I love this game.

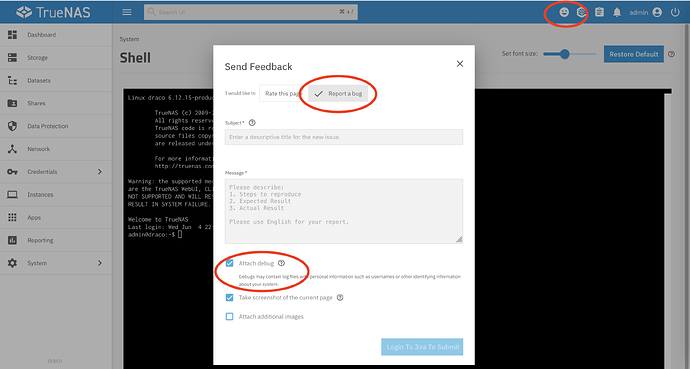

Yes. Click on the smiley face at top right, switch to “Report a bug” and send the debug information to iXsystems.

And full output of zdb -l for all six drives in the pool please, not just the supposedly problematic ones. Two failing drives should not suffice to suspend a raidz2.

If you can supply us the output of zpool import in CODE tags without the pool name, we can see if their are additional problems beyond the 2 REMOVED disks.

If the pool only has 2 REMOVED disks AND is RAID-Z2, then it is possible you just need to force import the pool.

This is because ZFS does not want to import a pool missing disks, because whence that happens, those missing disks are no long up to date. Then, even if good, those missing disks would likely need a full re-silver, which is both time consuming and stressful on the pool when the pool is already is poor state.

What information?

Was there a field identifying which of the four labels it found (0 1 2 3)? If the labels all match it does not repeat itself 4 times, but it does tell you which labels it found.

Or you could just post the results here (as a Blockquote).

Me too ![]()

root@freenas[~]# zdb -l /dev/sda1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292731

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 1026282999581038360

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

aux_state: 'err_exceeded'

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

removed: 1

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zdb -l /dev/sdb1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292731

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 3504886088678984596

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

aux_state: 'err_exceeded'

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

removed: 1

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zdb -l /dev/sdc1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292731

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 18426049172646806820

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

aux_state: 'err_exceeded'

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

removed: 1

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zdb -l /dev/sdd1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292731

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 12560554049367260037

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

aux_state: 'err_exceeded'

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

removed: 1

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zdb -l /dev/sde1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292723

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 10710542068254203083

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zdb -l /dev/sdf1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'subramanya'

state: 0

txg: 36292727

pool_guid: 15005074635607672362

errata: 0

hostid: 1123785772

hostname: 'freenas'

top_guid: 1843460257378462166

guid: 4494896413352636629

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 1843460257378462166

nparity: 2

metaslab_array: 35

metaslab_shift: 37

ashift: 12

asize: 72000790855680

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 12560554049367260037

path: '/dev/disk/by-partuuid/69e33d70-2e29-4440-bafd-0f183720274b'

whole_disk: 0

DTL: 120441

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 3504886088678984596

path: '/dev/disk/by-partuuid/90aea466-1527-4960-ad48-98bc5f0ffd21'

whole_disk: 0

DTL: 104136

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1026282999581038360

path: '/dev/disk/by-partuuid/ddb020b8-2290-495d-8343-089f94d31ad4'

whole_disk: 0

DTL: 112428

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4494896413352636629

path: '/dev/disk/by-partuuid/4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7'

whole_disk: 0

DTL: 92772

create_txg: 4

children[4]:

type: 'disk'

id: 4

guid: 18426049172646806820

path: '/dev/disk/by-partuuid/e6d7bead-7310-43d8-8236-9aba92acd3c8'

whole_disk: 0

DTL: 133471

create_txg: 4

children[5]:

type: 'disk'

id: 5

guid: 10710542068254203083

path: '/dev/disk/by-partuuid/33870927-1683-4d16-a597-7efee3e7b405'

whole_disk: 0

DTL: 30819

create_txg: 4

expansion_time: 1749000264

aux_state: 'err_exceeded'

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@freenas[~]# zpool import

pool: FAST

id: 15095281718178502118

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

FAST ONLINE

nvme0n1p2 ONLINE

pool: subramanya

id: 15005074635607672362

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

subramanya ONLINE

raidz2-0 ONLINE

69e33d70-2e29-4440-bafd-0f183720274b ONLINE

90aea466-1527-4960-ad48-98bc5f0ffd21 ONLINE

ddb020b8-2290-495d-8343-089f94d31ad4 ONLINE

4e570c79-6ff8-4ab4-94e3-0fc804c1a3a7 ONLINE

e6d7bead-7310-43d8-8236-9aba92acd3c8 ONLINE

33870927-1683-4d16-a597-7efee3e7b405 ONLINE

root@freenas[~]#

I don’t understand the LABEL meaning, even looking at zdb.8 — OpenZFS documentation.

But it’s LABEL 0 for all the 6 disks.

Hopefully that would work, all 6 disks appear to be in good shape.

Sorry, I don’t get that part, I can’t find the top right smiley face.

Don’t I have to go to JIRA and fill up a bug report with a Debug FIle?

Two disks have a different TXG than the others.

Good catch, and those two are SDE & SDF, which are respectively B002R13D, REMOVED, and B002SNAD, FAULTED.

For anyone also learning about zdb:

The txg (transaction group) value in ZFS represents a specific point in time for the state of the storage pool, allowing users to track changes and manage data consistency

Here’s the new process, automating data collection:

I think it could be useful to send to iXsystems a debug from a system where no attempt at recovery has yet been done, to investigate the issue.

sdf is 4 txg behind (20 s)

sde is 8 txg behind (40 s), and this too much for ZFS to import normally.

You should still be able to import a degraded pool, and then resilver to the last txg. At worst, the pool could be imported by discarding the last 40 seconds. The first command to try would be:

sudo zpool import -Fn subramanya

And the expected output would be… nothing.

and then…

IF that is the case, AND IF @HoneyBadger or @Arwen confirm that this is the right thing to try, then you would proceed to run the real recovery command without the -n but with extra -R /mnt to import in the right place.

But let’s move very carefully with that.

Good catch @winnielinnie on the drive’s last ZFS TXG not matching on 2 of the disks.

Because of that, a simple or even forced import will likely fail.

My guess is that during operation something caused them to be data disconnected to the server, while the pool was imported. Thus, with a RAID-Z2 vDev, ZFS continued with normal operation leaving the 2 REMOVED drives behind in ZFS transactions.

You can try the following:

- Gracefully shutdown server

- Temporarily disconnect disks

sdeandsdf - Power up server

- Go into command line mode

- Run

zpool import, which should show 2 missing disks - Run

zpool import subramanyawhich probably will fail, but, pretty harmless to try. - Then try

zpool import -f subramanya

If that works, you will somehow need to deal with the 2 disks. Part of the issue is that ZFS knows they are part of the pool subramanya, and wants to use them during import. But, because they are out of date, ZFS can’t use them.

One possible “fix”, is to mark the 2 missing disks offline:

zpool offline subramanya DEVICE1

zpool offline subramanya DEVICE1

It is possible this won’t work because they are now faulted.

Without the ability to mark the 2 problematic drives as OFFLINE, any pool import attempt with 1 or both attached will likely cause a pool import failure.

Basically we need to wipe the ZFS labels on both the problematic drives, and get the pool imported. At which point you can re-silver them one at a time. But, this can be dangerous if performed on the wrong disk since you have no more redundancy. A spare or blank disk would be best…

If all goes good, either none, or extremely minimal data loss would be the outcome. I say minimal because of 2 factors:

- Any bad block on any of the remaining 4 drives, that is in use by ZFS will likely cause a file to be lost. (But, if it is metadata, potentially no loss due to metadata being redundant even beyond RAID-Z2.)

- If you have to use “-F” during pool import, some of the most recent changes are thrown out.

Good luck.

Thank you VERY MUCH for the detailed procedure.

I’ll ponder whether to purchase a spare similar HDD, could be useful in the future for backup ![]()

But I still can’t make sense of what happened really, especially considering the pool is a RAIDZ2, with 2 disks faulted/removed, it should have continued to operate AFAIK.

3:21

New alerts:

Pool subramanya state is DEGRADED: One or more devices has been removed by the administrator. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:22

The following alert has been cleared:

Pool subramanya state is DEGRADED: One or more devices has been removed by the administrator. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:23

New alerts:

Pool subramanya state is DEGRADED: One or more devices has been removed by the administrator. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:24

The following alert has been cleared:

Pool subramanya state is DEGRADED: One or more devices has been removed by the administrator. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:25

New alerts:

Pool subramanya state is DEGRADED: One or more devices are faulted in response to persistent errors. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002SNAD is FAULTED

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:26

New alert:Pool subramanya state is SUSPENDED: One or more devices are faulted in response to IO failures.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002SNAD is FAULTED

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVEDThe following alert has been cleared:

Pool subramanya state is DEGRADED: One or more devices are faulted in response to persistent errors. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002SNAD is FAULTED

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVEDCurrent alerts:

Pool subramanya state is SUSPENDED: One or more devices are faulted in response to IO failures.

The following devices are not healthy:

Disk WDC_WD122KRYZ-01CDAB0 B002SNAD is FAULTED

Disk WDC_WD122KRYZ-01CDAB0 B002R13D is REMOVED

3:52

Monitor is DOWN: (First monitoring alert, I’m guessig the VM was still operating in RAM for 20mn or so)

Let’s say the first REMOVED disk was due to hardware issue (heat, wahatever)

Is the second disk becoming FAULTED within minutes of the first one a coincidence? FAULTED means disk issue contrary to REMOVED right? So shouldn’t the smartctl test reveal something?

And then why was the pool suspended even though it could operate with 2 disks at faults? (I think it’s better it get suspended in this situation though but I don’t thik it’s expected)

I think it could be useful to send to iXsystems a debug from a system where no attempt at recovery has yet been done, to investigate the issue.

I’m guessing this still stands, even after Arwen’s answer, correct ?

This cannot hurt… You appear to be a still pristine case of a bug that has plagued many people, so that would give material for review.

Of course, it could be an infortunate case of two drives having failure in short succession BUT two failures should not fault a raidz2 vdev and there’s nothing suspicious in SMART reports.

And then your pririty is recovery.

I see, I’ll report the bug tonight, and should be able to wait for a first answer.

If asked quickly to wait before applying recovery procedure and to assist in any way I’ll be happy to postpone and help. I can afford it, but not for weeks of course.

I did not realize that. Make sense now.

That made me swore to myself a lot of changes in the way I structure data and do backup in the future!!

Hopefully this occurrence of the bug will be useful to IX System as well.

This is why I think we have either a genuine bug or there is some factor besides the drives themselves (heat, for example). I once had a disk backplane have a loose power connector. 4 out of 16 drives went offline at once. I shutdown the system, fixed the power connector, and the 4 drives resilvered when the zpool was mounted at boot time. This was FreeBSD + ZFS.