Below a list of all errors that I’m getting on the target side in the TrueNAS GUI after replicating zfs snapshots with zfs-autobackup of a dataset that is mounted on both source and target server.

I suspect this is because zfs-autobackup doesn’t “properly” mount the target dataset, because after a reboot (where TrueNAS does the mounting itself), there are no errors…

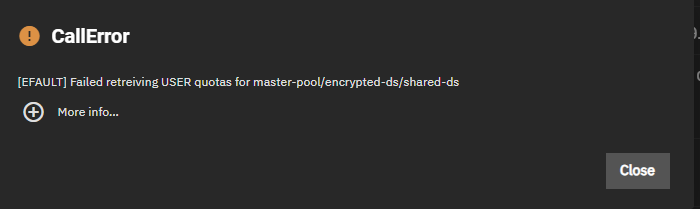

[EFAULT] Failed retreiving USER quotas for master-pool/encrypted-ds/test-ds

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 76, in get_quota

with libzfs.ZFS() as zfs:

File "libzfs.pyx", line 529, in libzfs.ZFS.__exit__

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 78, in get_quota

quotas = resource.userspace(quota_props)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "libzfs.pyx", line 3651, in libzfs.ZFSResource.userspace

libzfs.ZFSException: cannot get used/quota for master-pool/encrypted-ds/test-ds: unsupported version or feature

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.11/concurrent/futures/process.py", line 256, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 112, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 46, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 34, in _call

with Client(f'ws+unix://{MIDDLEWARE_RUN_DIR}/middlewared-internal.sock', py_exceptions=True) as c:

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 40, in _call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 80, in get_quota

raise CallError(f'Failed retreiving {quota_type} quotas for {ds}')

middlewared.service_exception.CallError: [EFAULT] Failed retreiving USER quotas for master-pool/encrypted-ds/test-ds

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 198, in call_method

result = await self.middleware.call_with_audit(message['method'], serviceobj, methodobj, params, self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1466, in call_with_audit

result = await self._call(method, serviceobj, methodobj, params, app=app,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1417, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 187, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/dataset_quota_and_perms.py", line 225, in get_quota

quota_list = await self.middleware.call(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1564, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1425, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1431, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1337, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1321, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EFAULT] Failed retreiving USER quotas for master-pool/encrypted-ds/test-ds

[EFAULT] Failed retreiving GROUP quotas for master-pool/encrypted-ds/test-ds

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 76, in get_quota

with libzfs.ZFS() as zfs:

File "libzfs.pyx", line 529, in libzfs.ZFS.__exit__

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 78, in get_quota

quotas = resource.userspace(quota_props)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "libzfs.pyx", line 3651, in libzfs.ZFSResource.userspace

libzfs.ZFSException: cannot get used/quota for master-pool/encrypted-ds/test-ds: dataset is busy

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.11/concurrent/futures/process.py", line 256, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 112, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 46, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 34, in _call

with Client(f'ws+unix://{MIDDLEWARE_RUN_DIR}/middlewared-internal.sock', py_exceptions=True) as c:

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 40, in _call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 80, in get_quota

raise CallError(f'Failed retreiving {quota_type} quotas for {ds}')

middlewared.service_exception.CallError: [EFAULT] Failed retreiving GROUP quotas for master-pool/encrypted-ds/test-ds

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 198, in call_method

result = await self.middleware.call_with_audit(message['method'], serviceobj, methodobj, params, self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1466, in call_with_audit

result = await self._call(method, serviceobj, methodobj, params, app=app,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1417, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 187, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/dataset_quota_and_perms.py", line 225, in get_quota

quota_list = await self.middleware.call(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1564, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1425, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1431, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1337, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1321, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EFAULT] Failed retreiving GROUP quotas for master-pool/encrypted-ds/test-ds

[ENOENT] Path /mnt/master-pool/encrypted-ds/test-ds not found

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 198, in call_method

result = await self.middleware.call_with_audit(message['method'], serviceobj, methodobj, params, self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1466, in call_with_audit

result = await self._call(method, serviceobj, methodobj, params, app=app,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1428, in _call

return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1321, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 191, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 53, in nf

res = f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/filesystem.py", line 422, in stat

raise CallError(f'Path {_path} not found', errno.ENOENT)

middlewared.service_exception.CallError: [ENOENT] Path /mnt/master-pool/encrypted-ds/test-ds not found

Is there perhaps a way to force TrueNAS to remount a specific (or all) datasets from the command line?