Am curious. I read that it does what it claims but what cable adapter will you use ?, also what cage for power delivery ? Thanks

You use a SFF-8643 to SFF-8639 cable, which comes with its power connector for the drive:

You could also go with a pair of these, fancy but expensive

(They have lots of other form factors too)

Newegg has the same thing and (for me anyway) cheaper once shipping is included.

I use an x8 to 4 NVMe card in my build that runs optane drives for l2 cache and slogs without issues.

@Orlando_Furioso , what is the available budget?

Don’t mind if I answer on his behalf.

The least possible expense with a functional setup. After all, this is out of pocket money and betting in that the projects keep coming in. Otherwise I’ll have to make PC parts stew to feed my family ![]()

Well, it would be helpful, to say the least, if there was a certain amount or at least a somewhat specific range. This is nothing we can work with.

… he stated $2000 and that was not realistic for his aim. Hence my response.

Maybe when he’s done, he’ll post what ended up been the setup and the prices.

tldr; $1200-$2000

That’s actually pretty accurate. But so starting with the system that I am currently plugging parts into, the roughly $1200-$2000 budget was what I have for drives in the near term. I was about to drop $900-$1400 on four brand new 4TB NVME M.2 drives and put those into the (relatively inexpensive) M.2 Hyper that I bought for bifurcated 4x4x4x4. I was sort of resigned to the idea that I’d be stuck at ~8-12 TB of storage where I’d be able to scrub over the network, and then, later, building out a SATA pool of spinning drives for lukewarm storage.

This two-tier setup may still happen but then @etorix whispered in my ear about PCI lane-splitting. So now I’m eyeing the higher capacities and possibility of adding more devices that (presumably used) U.2 SSDs could give me.

I ordered the 8 Port U.2 SSD x16 card from AliExpress. I haven’t decided about the cables vs IcyDock. Even though it’s twice the price, I’m leaning towards the IcyDock because that’ll be more tidy.

I have started plugging things in and ran MemTest. I’ve gotta say that it’s pretty neat having more RAM than your OS drive. I’ve got the 2x U.2 carrier card in an x8 slot with those test U.2 drives and I’ll be installing TrueNAS tonight. I want to try pushing around Red footage across the network even in only 1.92TB just to be sure it all works before I spend too much more.

I really appreciate all the feedback you guys have given me. It’s been hugely helpful. System is working and I’m pretty stoked.

Specs: X10SRi-f, E5-1650v3, 256GB ECC RAM, Intel X520-DA2 Ethernet Converged Network Adapter

Here’s what we have been using as a good (maybe not gold, but usable) standard for logging/editing/colorgrading:

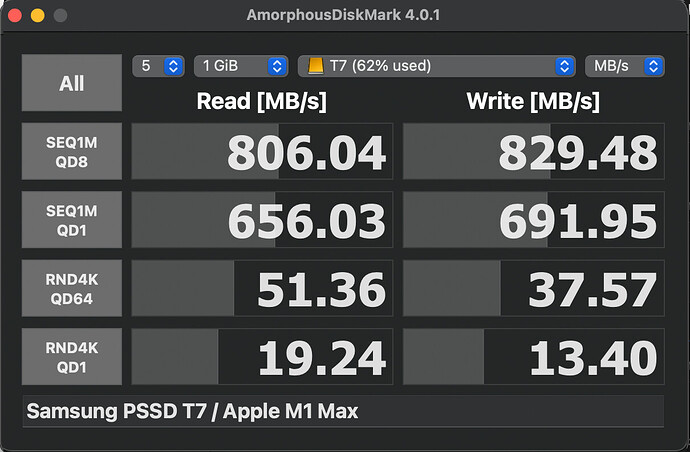

Little USB C external SSD drives plugged directly into my laptop—no network involved.

I plugged the U.2 drives (Samsung PM983 1.92TB U.2 NVMe) into the U.2 to PCIe 3.0 Adapter Card (x8) and started pushing things around a little in TrueNAS just to A) make sure I didn’t buy a dud, and B) to get my feet wet trying things out.

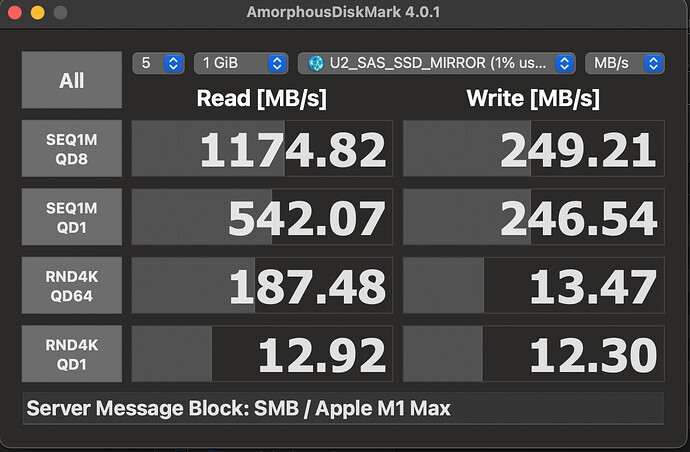

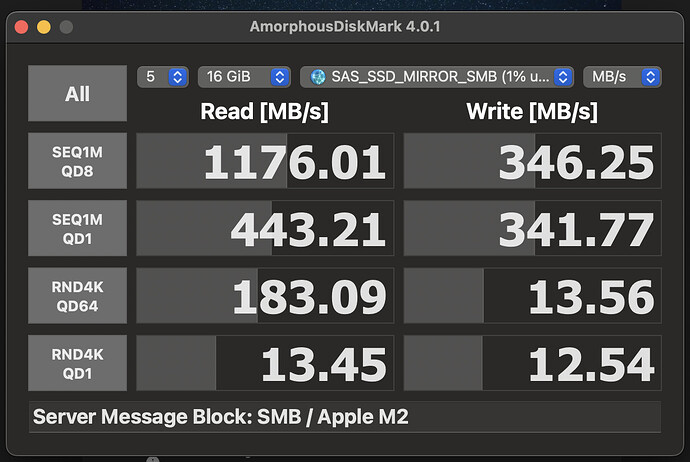

You guys will yell at me that I’m doing it wrong, but for comparison’s sake, this is what I’m currently getting for a 2 wide mirror across the network.

Wish that write speed was faster, but seems like a good start.

Mostly because I could get more capacity out of them, I am still curious to explore the (non U.2) SAS SSD route and, foolhardy again, but made a few more exploratory purchases. A Supermicro AOC-S3008L-L8E SAS 8-Port 12Gb/s PCIe HBA and two Samsung 1.92TB SAS 3 SSDs. Those aren’t here yet, and I’ll report back with some numbers for those and do some real-ish world testing with footage next.

I’m still curious about (and scared of) exploring using SLOG/metadata drives. Do I understand correctly that those drives/pools ought to be way faster than whatever data pool they’re being used with?

Skip SLOG and metadata unless you have a proven need.

You need to test a lot larger files or the read results can be just from ARC (memory)

I’ll point you to the ZFS Primer to read up on the special devices like SLOG, L2ARC, etc. You can also browse the documentation for the version of TrueNAS you are running.

Yes, the whole point of these devices is to be faster than the underlying pool. And it’s not easy for a single drive to outperform a whole NVMe array…

SLOG is for sync writes and only for sync writes, which you should NOT have. For performance, you should be all asynchronous.

As for L2ARC, with 256 GB RAM you’re not likely to ever use it.

“U_2_SAS” is a weird misnomer. But it’s just a name.

These write speed are disappointing, and there should be room for improvement with network settings and/or share parameters (record size, sync writes off…).

How are you mounting the share that you’re benchmarking?

You can try (temporarily) setting Sync to Disabled in the dataset settings, then rerunning the benchmark. If that makes a difference then a SLOG may be worth while.

Was hoping you might say that and am delighted that several of you did. I started building this machine with the notion that an extravagant (to me, a noob) pile of ECC RAM would give me something faster than drives (though damn, this NVME is quick.) I didn’t understand all the complexities of ZFS, but I knew it could leverage memory.

So I do have more benchmarks (including larger files) to share, but wanted to say that I’m chiefly interested in the overall effect of the entire thing–ARC included, literally across the network.

So by “mounting”, I’m thinking you mean how is the OS running the test connecting to the share and for that I’m just using the Mac OS Finder’s network built-in samba to the share on the network. Physically, my MacBook Air M2 is connecting over Thunderbolt (3) to an ATTO Thunderlink SFP+ dock, fiber to a cheap switch, DAC to the Intel NIC in my TrueNAS. I haven’t gotten to link aggregation to try for a 10 + 10 GbE setup yet. I suspect there are other improvements possible here.

So I was excited about the prospect and did try doing this and it consistently improved the write speed having Sync set to Disabled.

What is that; like, 50% improvement?

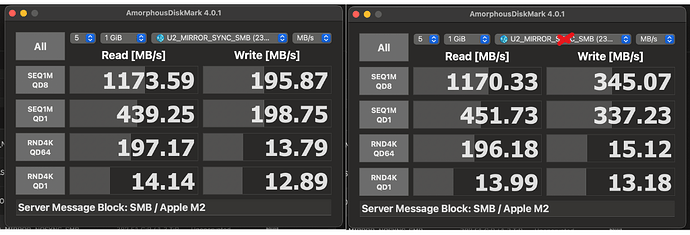

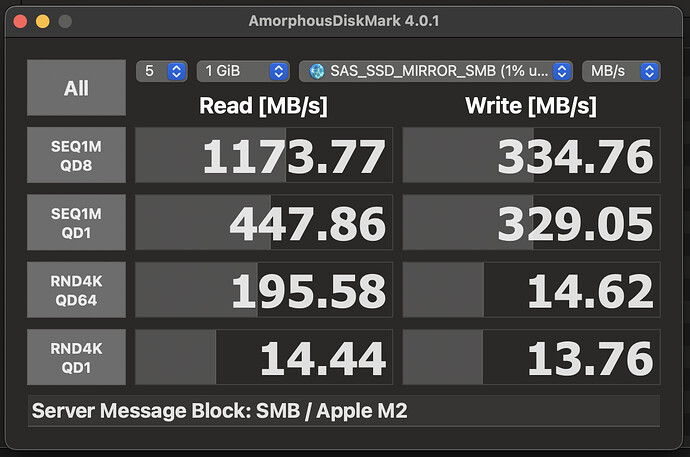

My SAS SSDs arrived and then, later, the hardware for connecting them. After a mild panic and then learning about power disable pins (Thanks Art of Server!) and some fun with painters tape, the test drives showed up in preboot and TrueNAS and seem to be working.

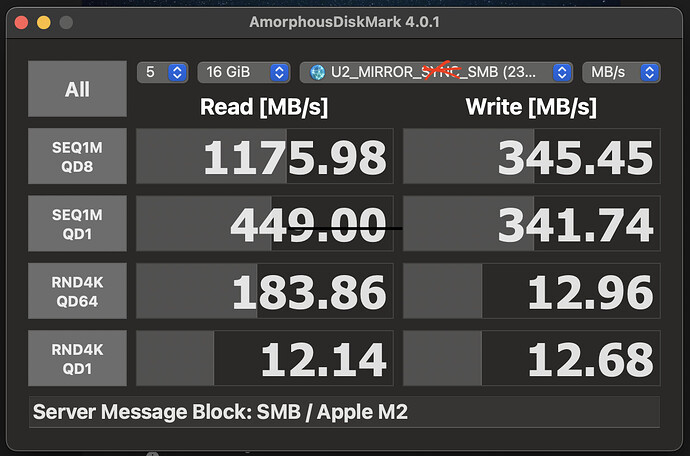

I’m assuming maybe because it’s the same Samsung NAND in there, but the numbers really weren’t that different. The temps sure were and I need to get some fans blowing across the SAS SSDs-- very hot.

I did also bump up the size to 16GB files and the numbers stayed about the same.

Very similar. I’m reluctant to go too much higher for beating on the drives (and the heat coming off those SAS SSDs! Ouch!)

Next steps for me include trying to actually edit, like testing the real use-case off these pools. I did get my LinkReal 8 Port to PCIe 3 x16 NVME card and am keen to plug some NVME into it, but the cables I ordered have not materialized yet. Might be time to order the real, big boy drives. How do we feel about Micron 9300 NVME drives? Seems like there are a lot of them and the prices aren’t too brutal. I get the impression now is maybe a less good time to be shopping for server SSDs.

Good news I guess is video editing is a predominately read heavy workload.

Strange that you seem to have a limit of 3gbps writes.