Hello, I’m back.

I changed data VDEV to:

1 x RAIDZ1 | 4 wide | 1.82 TiB (Usable Capacity: 5.15 TiB)

(not as solution, I want more space)

I finally replaced the network card “Asus XG-C100C V2” (w11 side) with an “Intel X550-T2”.

And configured “Intel X550-T2” as follows:

1. Interrupt moderation: Disabled ("low" is OK as well)

2. Jumbo packet: 9014

3. Receive buffers: 2048

4. Transmit buffers: 2048

Connection scheme is the same (direct wired connection):

w11: Intel X550-T2 → RJ45 → Ubiquiti RJ45 to SFP+ → proxmox: Intel x520-DA2

As before, TrueNAS directly uses a dedicated port from the Intel x520-DA2 (PCI Passthrough).

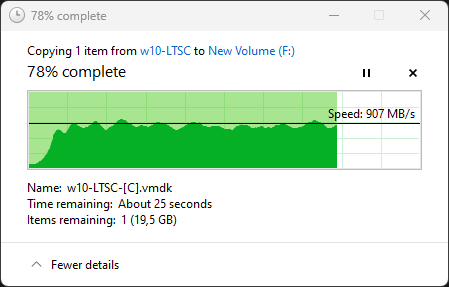

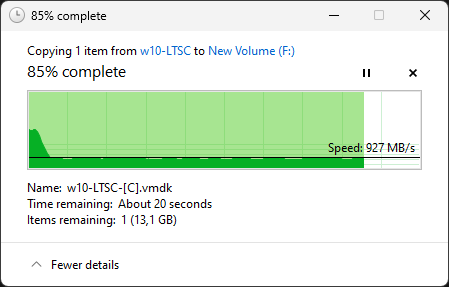

Here are the results:

SMB speed as fast as before, no changes - 1.12 GB/s

iSCSI:

iSCSI Zvol:

Type: VOLUME

Sync: DISABLED

Compression Level: OFF

ZFS Deduplication: OFF

Volblocksize: 64K

iSCSI Zvol:

Type: VOLUME

Sync: DISABLED

Compression Level: OFF

ZFS Deduplication: OFF

Volblocksize: 128K

------------------------------------------------------------------------------

CrystalDiskMark 8.0.4 x64 (C) 2007-2021 hiyohiyo

------------------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

[Read]

SEQ 1MiB (Q= 8, T= 1): 1217.902 MB/s [ 1161.5 IOPS] < 6878.02 us>

SEQ 1MiB (Q= 1, T= 1): 878.151 MB/s [ 837.5 IOPS] < 1193.70 us>

RND 4KiB (Q= 32, T= 1): 369.527 MB/s [ 90216.6 IOPS] < 353.52 us>

RND 4KiB (Q= 1, T= 1): 41.126 MB/s [ 10040.5 IOPS] < 99.49 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 945.361 MB/s [ 901.6 IOPS] < 8853.96 us>

SEQ 1MiB (Q= 1, T= 1): 972.868 MB/s [ 927.8 IOPS] < 1077.29 us>

RND 4KiB (Q= 32, T= 1): 119.451 MB/s [ 29162.8 IOPS] < 1096.45 us>

RND 4KiB (Q= 1, T= 1): 35.311 MB/s [ 8620.8 IOPS] < 115.88 us>

Profile: Default

Test: 2 GiB (x1) [F: 0% (0/200GiB)]

Mode: [Admin]

Time: Measure 5 sec / Interval 5 sec

OS: Windows 11 Professional [10.0 Build 22631] (x64)

This is much faster than it was before, although I think the hardware is capable of reaching 1.2-1.4 GB/s with 4x sata SSD @ RAIDZ1 (based on SMB multipath test with 2 x 10Gbps channels).

I will also test Synology ds923+ iSCSI speed with the same SATA SSDs.

So, I think in my case, the solution is:

- Use server grade NICs on both sides.

- iSCSI Zvol Volblocksize should be: 64K

- iSCSI Extent Logical Block Size should be 4K

General advises:

- Configure NICs for low latency (Interrupt moderation)

- Don’t forget to set proper Jumbo packet size.

- Increase Receive and Transmit buffers (2048 should be OK).

- Use a direct wired connection or the same network subnet to avoid routing traffic through the router or consider very powerful router (I bet that UXG-Enterprise or EFG should do the trick).

- Sync=Disabled increases the write speed, but in case of system failure there will be data loss for 5 seconds.

- Make sure that write caching is enabled on your disks (see this topic for details).