This resource was originally created by user: ngandrass on the TrueNAS Community Forums Archive. Please DM this account or comment in this thread to claim it.

Disk spindown has always been an issue for various TrueNAS / FreeNAS users. This script utilizes iostat to detect I/O operations (reads, writes) on each disk. If a disk was neither read nor written for a given period of time, it is considered idle and is spun down.

Periodic reads of S.M.A.R.T. data performed by the smartctl service are excluded. This allows users to have S.M.A.R.T. reporting enabled while still being able to automatically spin down disks. The script moreover is immune to the periodic disk temperature reads in newer versions of TrueNAS.

Key Features

- Periodic S.M.A.R.T. reads do not reset the disk idle timers

- Configurable idle timeout and poll interval

- Support for ATA and SCSI devices

- Works with both TrueNAS Core and TrueNAS SCALE

- Per-disk idle timer / Independent spindown

- Automatic detection or explicit listing of drives to monitor

- Ignoring of specific drives (e.g. SSD with system dataset)

- Executable via Tasks as Post-Init Script, configurable via TrueNAS GUI

- Allows script placement on encrypted pool

- Optional shutdown after configurable idle time

For more information, installation and usage instructions as well as the most recent release, checkout the repository on GitHub: GitHub - ngandrass/truenas-spindown-timer: Monitors drive I/O and forces HDD spindown after a given idle period. Resistant to S.M.A.R.T. reads.

1 Like

Dear TrueNas Community, dear @iX_Resources or ngandrass.

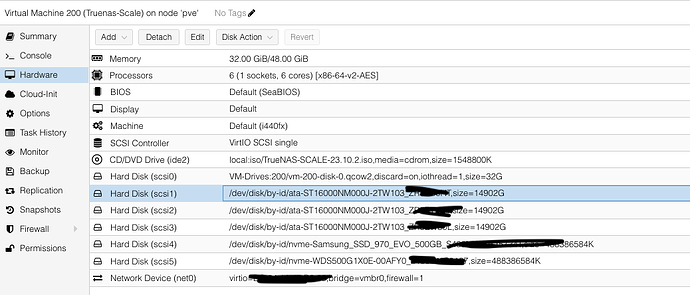

I’m running TrueNAS-SCALE-23.10.2 as a VM on Proxmox 8.1.4 where NVMEs and HDDs are mounted to the TrueNas Scale Installation as scsi Hard Disks with their respective device id.

following the instructions I get a lot of SG_IO: bad/missing sense data errors

SMART Values are not available at truenas scale level.

root@truenas-scale-vm:/home/admin/spindown_timer# ./spindown_timer.sh -d -c -t 10 -p 5 -u zpool -i boot-pool

[2024-04-16 13:43:32] Performing a dry run...

[2024-04-16 13:43:32] Monitoring drives with a timeout of 10 seconds: sdb sdc sdd sde sdf

[2024-04-16 13:43:32] I/O check sample period: 5 sec

SG_IO: bad/missing sense data, sb[]: 70 00 05 00 00 00 00 0a 00 00 00 00 20 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

SG_IO: bad/missing sense data, sb[]: 70 00 05 00 00 00 00 0a 00 00 00 00 20 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

SG_IO: bad/missing sense data, sb[]: 70 00 05 00 00 00 00 0a 00 00 00 00 20 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

SG_IO: bad/missing sense data, sb[]: 70 00 05 00 00 00 00 0a 00 00 00 00 20 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

SG_IO: bad/missing sense data, sb[]: 70 00 05 00 00 00 00 0a 00 00 00 00 20 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

[2024-04-16 13:43:32] Drive power states: [sdb] => 0 [sdc] => 0 [sdd] => 0 [sde] => 0 [sdf] => 0

I followed the instructions in setting power states on the storage devices,

does the script not work with scsi deviced rootet to truenas scale as a VM on hypervisors?

with regards

Unfortunately, passing drives from Proxmox in this way will not allow TrueNAS direct access to the drives. The problems you are having are expected in that configuration, and it is recommended you use PCI-Express pass-thru of a supported HBA like this one:

LSI SAS 9211-8i PCI Express to 6Gb/s Serial Attached SCSI (SAS) Host Bus Adapter User Guide (broadcom.com)

Please see:

Resource - “Absolutely must virtualize TrueNAS!” … a guide to not completely losing your data. | TrueNAS Community

2 Likes