This resource was originally created by user: @NickF1227 on the TrueNAS Community Forums Archive.

There’s a burgeoning trend I’ve noticed over the past few years in this community. That same trend exists for both home users and business use cases. That trend is the idea of tuning for single-client performance is becoming more important to folks in general. Video Production houses are number one on that list, with HomeLab nuts like myself coming in a close second.

Having multiple NVME drives in a storage pool you are connecting to over ethernet comes with many performance considerations, variables and nuance. This is a common trend across the industry, transcending OS and sharing protocol boundaries, but each with their own fiddly optimizations.

We can learn a thing or two from the successes and failures of LinusTechTips (amongst other YouTubers, shoutout to LevelOneTechs) over the past few years. This is kinda ironic, because the only breadcrumbs I can find about this topic are VLOG style videos about them solving their own IT problems.

Here’s a few:

Lots of good hardware and software information here.

This Server Deployment was HORRIBLE

Get $20 in free credit on your new account at https://www.linode.com/linusMonitor and manage your PC in real-time with Pulseway! Create your free account tod…

2 years later, lessons learned, and better hardware resulted in this video, and Features TrueNAS SCALE:

We Finally Did it Properly - “Linux” Whonnock Upgrade

Get Current and learn more here!: https://www.current.com/linustechtipsPersonalize your PC with Cablemod at Configurator – CableMod Global Store After months of performance …

Enter my testing today;

I wanted to focus my eye on the client-side of this conversation. I wanted to shift the narrative a bit to encompass the problem as a whole. When talking about NAS, we have alot of variables in play in the external environment. Before attaching storage from an external system, we should be confident in the performance of the layers in between.

Besides, there is plenty of information to be had about server side network tuning already:

Resource - High Speed Networking Tuning to maximize your 10G, 25G, 40G networks

Both FreeBSD and Linux come by default highly optimized for classic 1Gbps ethernet. This is by far the most commonly deployed networking for both clients and servers, and a lot of research has been done to tune performance especially for local…

Some official iXsystems information can be found here:

Interconnect Maximum Effective Data Rates

Tables of maximum effective data rates, in a single data flow direction, for various data interconnect protocols.

CORE Hardware Guide

Describes the hardware specifications and system component recommendations for custom TrueNAS CORE deployment.

That being said, I set up the following test platforms:

System 1:

AMD Ryzen 7900X3D

64 GB DDR5 6000

Mellanox ConnectX-4 100 Gb (Windows Reports PCI-E Gen3, 16x, in PCIEX16_2)

Win 11

System 2:

AMD Ryzen 3700X

32 GB DDR4 3200

Intel XL710BM2 40Gb (Windows Reports PCI-E Gen3, 4x)

Win 11

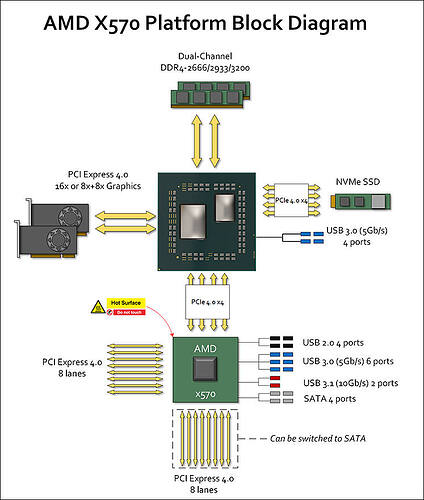

Lets consider for a moment the hardware configuration here. In particular, PCI-E bus topology MATTERS here.

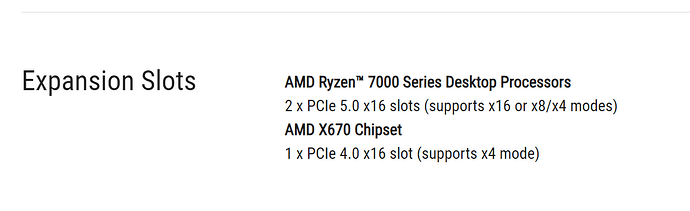

In the example of system 1, the motherboard has this information:

ROG STRIX X670E-E GAMING WIFI | Motherboards | ROG United States

ASUS ROG Strix X670E-E Gaming WiFi features 18+2 power stages, DDR5 support, four M.2 slots with heatsinks, PCIe® 5.0, USB 3.2 Gen 2x2 front-panel connector, and onboard WiFi 6E. It also features AI Cooling II, AI Networking, Two-Way AI Noise Cancelation. ROG Strix X670E-E Gaming WiFi caters to…

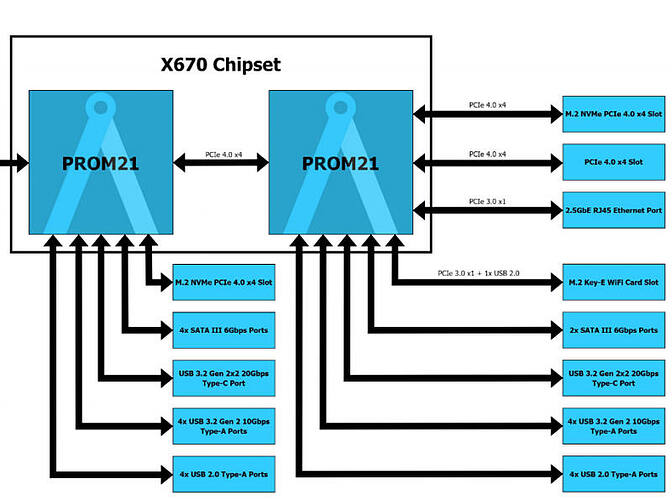

But it’s not quite that simple. Ryzen 7000 has a maximum of 24 PCI-E 5 lanes total, which would mean the motherboard has got to shuffle the deck quite a bit for all of this connectivity to work.

Asus does not share a good block diagram of this board. But based on the X670 block diagram we can infer that both x16 slots do go directly to the CPU. So it seems then, that I should theoretically have full PCI-E Gen3 X16 speed for the card in that system.

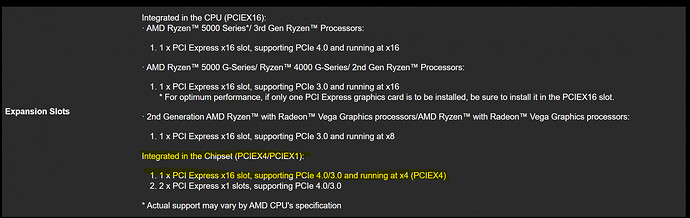

System 2 is even worse off. Despite windows report PCI-E Gen3, it is indeed going through the chipset. So while we have 32Gb/s of potential performance here, it’s shared among other devices, we are going to be performing even less that that.

X570 AORUS ELITE (rev. 1.0) Specification | Motherboard - GIGABYTE Global

Lasting Quality from GIGABYTE.GIGABYTE Ultra Durable™ motherboards bring together a unique blend of features and technologies that offer users the absolute …

Networking

Layer 1:

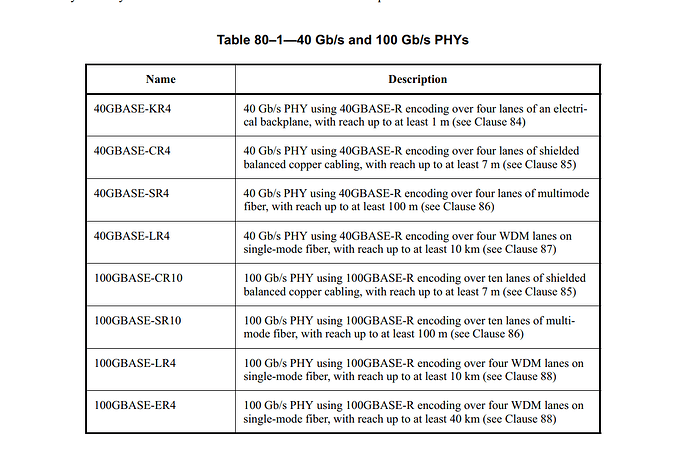

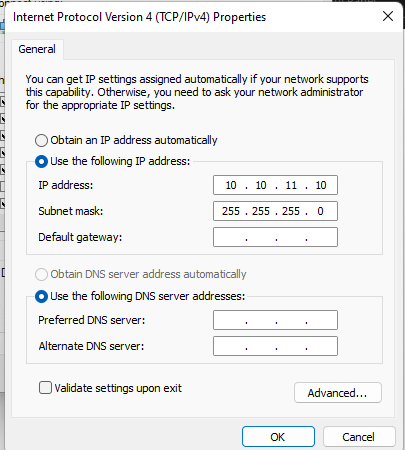

We have here a simple PtP connection between two windows hosts. I re-ran my testing with a few different optics so as to ensure consistency. Despite some minor run-to-run variance, very little difference was observed between them:

- 40Gb SR4, 3M MPO OM4 patch cable https://www.fs.com/products/181287.html

- 40Gb SR-BD, 3M LC OM4 patch cable https://www.fs.com/products/48722.html

- 40Gb Active DAC Cable https://www.fs.com/products/30899.html

Under the hood, a 40 Gigabit connection is basically 4 bonded 10 gig links in a single cable

https://jira.slac.stanford.edu/secure/attachment/23373/8023ba-2010.pdf

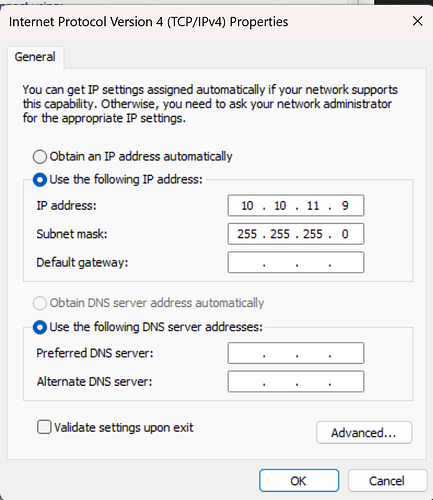

Layer 3:

Basic configuration:

Initial iPerf, single threaded:

Code:

C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>iperf3 -c 10.10.11.9 Connecting to host 10.10.11.9, port 5201 [ 4] local 10.10.11.10 port 42726 connected to 10.10.11.9 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 816 MBytes 6.85 Gbits/sec [ 4] 1.00-2.00 sec 886 MBytes 7.44 Gbits/sec [ 4] 2.00-3.00 sec 865 MBytes 7.25 Gbits/sec [ 4] 3.00-4.00 sec 867 MBytes 7.27 Gbits/sec [ 4] 4.00-5.00 sec 876 MBytes 7.35 Gbits/sec [ 4] 5.00-6.00 sec 900 MBytes 7.55 Gbits/sec [ 4] 6.00-7.00 sec 888 MBytes 7.45 Gbits/sec [ 4] 7.00-8.00 sec 916 MBytes 7.68 Gbits/sec [ 4] 8.00-9.00 sec 873 MBytes 7.32 Gbits/sec [ 4] 9.00-10.00 sec 882 MBytes 7.40 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 8.56 GBytes 7.36 Gbits/sec sender [ 4] 0.00-10.00 sec 8.56 GBytes 7.36 Gbits/sec receiver

The single-threaded nature of iPerf testing results in what may sound like lack-luster performance. Really, 40 and 100 gigabit were designed to scale out to a wide number of clients. Whereas 10 and 25 Gigabit have simpler PHY designs that may be easier to tune for single client performance.

But, when running 4 threads of iPerf, I didn’t see much of an umprovement:

Code:

C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>iperf3 -c 10.10.11.10 -P 4 Connecting to host 10.10.11.10, port 5201 [ 4] local 10.10.11.9 port 52520 connected to 10.10.11.10 port 5201 [ 6] local 10.10.11.9 port 52521 connected to 10.10.11.10 port 5201 [ 8] local 10.10.11.9 port 52522 connected to 10.10.11.10 port 5201 [ 10] local 10.10.11.9 port 52523 connected to 10.10.11.10 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 318 MBytes 2.67 Gbits/sec [ 6] 0.00-1.00 sec 315 MBytes 2.65 Gbits/sec [ 8] 0.00-1.00 sec 313 MBytes 2.63 Gbits/sec [ 10] 0.00-1.00 sec 307 MBytes 2.58 Gbits/sec [SUM] 0.00-1.00 sec 1.22 GBytes 10.5 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 1.00-2.00 sec 354 MBytes 2.97 Gbits/sec [ 6] 1.00-2.00 sec 351 MBytes 2.95 Gbits/sec [ 8] 1.00-2.00 sec 348 MBytes 2.92 Gbits/sec [ 10] 1.00-2.00 sec 346 MBytes 2.90 Gbits/sec [SUM] 1.00-2.00 sec 1.37 GBytes 11.7 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 2.00-3.00 sec 334 MBytes 2.80 Gbits/sec [ 6] 2.00-3.00 sec 332 MBytes 2.78 Gbits/sec [ 8] 2.00-3.00 sec 329 MBytes 2.76 Gbits/sec [ 10] 2.00-3.00 sec 327 MBytes 2.75 Gbits/sec [SUM] 2.00-3.00 sec 1.29 GBytes 11.1 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 3.00-4.00 sec 339 MBytes 2.84 Gbits/sec [ 6] 3.00-4.00 sec 336 MBytes 2.82 Gbits/sec [ 8] 3.00-4.00 sec 334 MBytes 2.80 Gbits/sec [ 10] 3.00-4.00 sec 332 MBytes 2.78 Gbits/sec [SUM] 3.00-4.00 sec 1.31 GBytes 11.2 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 4.00-5.00 sec 360 MBytes 3.02 Gbits/sec [ 6] 4.00-5.00 sec 358 MBytes 3.00 Gbits/sec [ 8] 4.00-5.00 sec 355 MBytes 2.98 Gbits/sec [ 10] 4.00-5.00 sec 353 MBytes 2.96 Gbits/sec [SUM] 4.00-5.00 sec 1.39 GBytes 12.0 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 5.00-6.00 sec 316 MBytes 2.65 Gbits/sec [ 6] 5.00-6.00 sec 313 MBytes 2.63 Gbits/sec [ 8] 5.00-6.00 sec 311 MBytes 2.61 Gbits/sec [ 10] 5.00-6.00 sec 310 MBytes 2.60 Gbits/sec [SUM] 5.00-6.00 sec 1.22 GBytes 10.5 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 6.00-7.00 sec 311 MBytes 2.61 Gbits/sec [ 6] 6.00-7.00 sec 308 MBytes 2.59 Gbits/sec [ 8] 6.00-7.00 sec 306 MBytes 2.56 Gbits/sec [ 10] 6.00-7.00 sec 305 MBytes 2.56 Gbits/sec [SUM] 6.00-7.00 sec 1.20 GBytes 10.3 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 7.00-8.00 sec 326 MBytes 2.73 Gbits/sec [ 6] 7.00-8.00 sec 324 MBytes 2.72 Gbits/sec [ 8] 7.00-8.00 sec 322 MBytes 2.70 Gbits/sec [ 10] 7.00-8.00 sec 321 MBytes 2.69 Gbits/sec [SUM] 7.00-8.00 sec 1.26 GBytes 10.9 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 8.00-9.00 sec 324 MBytes 2.72 Gbits/sec [ 6] 8.00-9.00 sec 323 MBytes 2.71 Gbits/sec [ 8] 8.00-9.00 sec 320 MBytes 2.69 Gbits/sec [ 10] 8.00-9.00 sec 319 MBytes 2.67 Gbits/sec [SUM] 8.00-9.00 sec 1.26 GBytes 10.8 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 9.00-10.00 sec 328 MBytes 2.75 Gbits/sec [ 6] 9.00-10.00 sec 326 MBytes 2.73 Gbits/sec [ 8] 9.00-10.00 sec 324 MBytes 2.71 Gbits/sec [ 10] 9.00-10.00 sec 322 MBytes 2.70 Gbits/sec [SUM] 9.00-10.00 sec 1.27 GBytes 10.9 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 3.23 GBytes 2.78 Gbits/sec sender [ 4] 0.00-10.00 sec 3.23 GBytes 2.78 Gbits/sec receiver [ 6] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec sender [ 6] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec receiver [ 8] 0.00-10.00 sec 3.19 GBytes 2.74 Gbits/sec sender [ 8] 0.00-10.00 sec 3.19 GBytes 2.74 Gbits/sec receiver [ 10] 0.00-10.00 sec 3.17 GBytes 2.72 Gbits/sec sender [ 10] 0.00-10.00 sec 3.17 GBytes 2.72 Gbits/sec receiver [SUM] 0.00-10.00 sec 12.8 GBytes 11.0 Gbits/sec sender [SUM] 0.00-10.00 sec 12.8 GBytes 11.0 Gbits/sec receiver iperf Done.

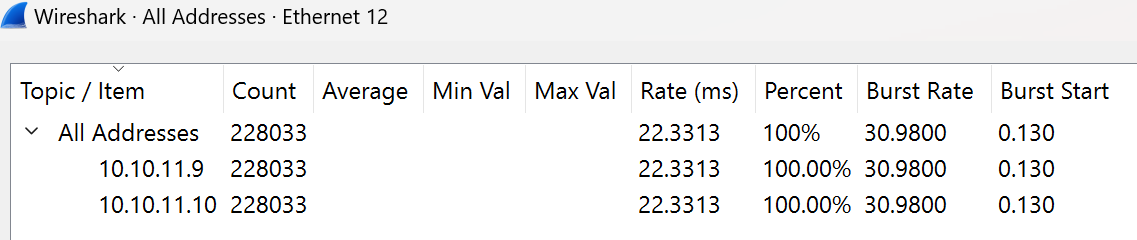

Taking a Peek at the Ether:

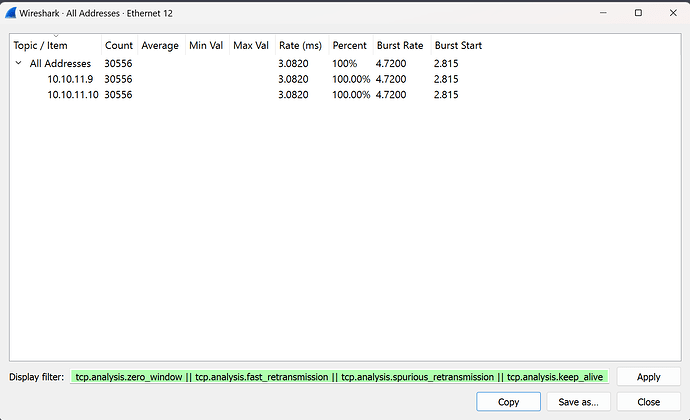

Using wireshark to capture data on the interface during an iPerf run, we have about 220,000 packets.

Filtering for some indicators of poor TCP performance:

Code:

tcp.analysis.out_of_order || tcp.analysis.duplicate_ack || tcp.analysis.ack_lost_segment || tcp.analysis.retransmission || tcp.analysis.lost_segment || tcp.analysis.zero_window || tcp.analysis.fast_retransmission || tcp.analysis.spurious_retransmission || tcp.analysis.keep_alive

13% of all traffic is being “wasted”, so some additional tweaking may yield some additional performance, but it may also be that the inherent platform bottleneck on “System 2” is causing a cascading negative affect into the TCP stack.

Tuning:

I’ve found several resources here to help me move along this process:

Tuning Throughput Performance for Intel® Ethernet Adapters

Provides suggestions for improving performance of Intel® Ethernet adapters and troubleshooting performance issues.

Performance Tuning Network Adapters

Learn how to tune the performance network adapters for computers that are running Windows Server 2016 and later versions.

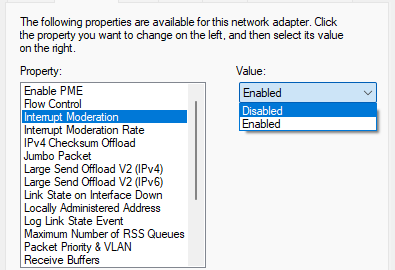

In my testing, in this environment, I found the best mix of performance between these two systems was with the following settings. The same settings were applied to both systems.

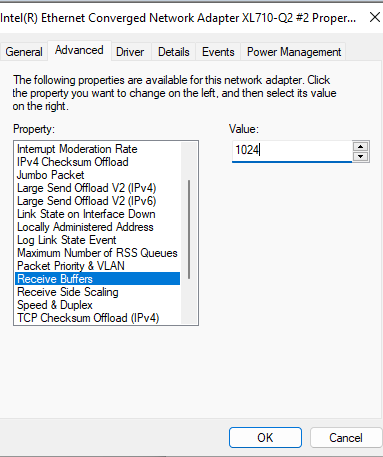

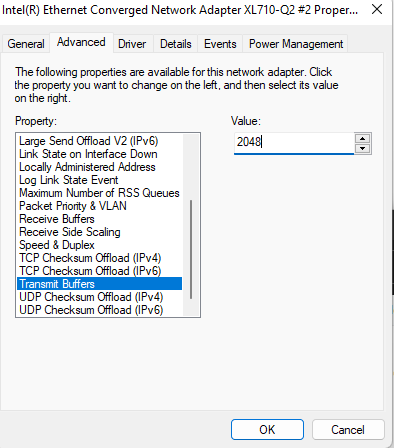

- Toying around with buffers, it seems that the best speeds I was able to achieve were with 2048 Send and 1024 Receive. Increasing the buffer sizes beyond these values decreased performance for me, but YMMV.

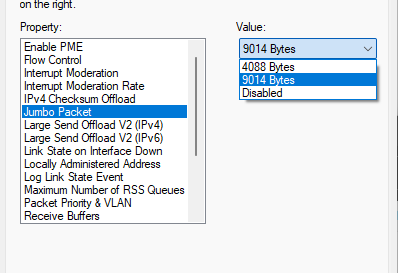

- Jumbo Frames to 9014 did seem to help, but in doing so I have statically increased the number of issues in the TCP stack.

- Interrupt Morderation Disabled made the biggest performance difference.

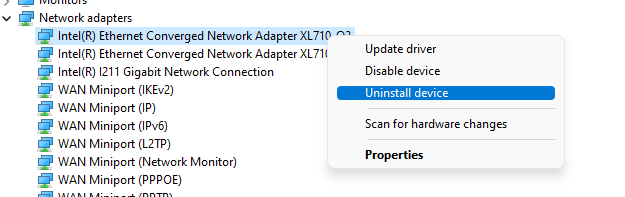

I found that in my testing, some settings did not properly revert back to defaults when I expected them to. The only way I was able to reset the NICs back to default properly was to uninstall/reinstall them in Device Manager:

Code:

C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>iperf3 -c 10.10.11.10 Connecting to host 10.10.11.10, port 5201 [ 4] local 10.10.11.9 port 34248 connected to 10.10.11.10 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 1.54 GBytes 13.2 Gbits/sec [ 4] 1.00-2.00 sec 1.49 GBytes 12.8 Gbits/sec [ 4] 2.00-3.00 sec 1.30 GBytes 11.2 Gbits/sec [ 4] 3.00-4.00 sec 1.27 GBytes 10.9 Gbits/sec [ 4] 4.00-5.00 sec 1.33 GBytes 11.5 Gbits/sec [ 4] 5.00-6.00 sec 1.30 GBytes 11.2 Gbits/sec [ 4] 6.00-7.00 sec 1.27 GBytes 10.9 Gbits/sec [ 4] 7.00-8.00 sec 1.26 GBytes 10.8 Gbits/sec [ 4] 8.00-9.00 sec 1.57 GBytes 13.5 Gbits/sec [ 4] 9.00-10.00 sec 1.36 GBytes 11.7 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 13.7 GBytes 11.8 Gbits/sec sender [ 4] 0.00-10.00 sec 13.7 GBytes 11.8 Gbits/sec receiver iperf Done. C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>

Now when I scale out to multiple threads:

Code:

C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>iperf3 -c 10.10.11.10 -P 4 Connecting to host 10.10.11.10, port 5201 [ 4] local 10.10.11.9 port 52119 connected to 10.10.11.10 port 5201 [ 6] local 10.10.11.9 port 52120 connected to 10.10.11.10 port 5201 [ 8] local 10.10.11.9 port 52121 connected to 10.10.11.10 port 5201 [ 10] local 10.10.11.9 port 52122 connected to 10.10.11.10 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 494 MBytes 4.15 Gbits/sec [ 6] 0.00-1.00 sec 495 MBytes 4.15 Gbits/sec [ 8] 0.00-1.00 sec 494 MBytes 4.14 Gbits/sec [ 10] 0.00-1.00 sec 476 MBytes 3.99 Gbits/sec [SUM] 0.00-1.00 sec 1.91 GBytes 16.4 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 1.00-2.00 sec 537 MBytes 4.50 Gbits/sec [ 6] 1.00-2.00 sec 540 MBytes 4.53 Gbits/sec [ 8] 1.00-2.00 sec 530 MBytes 4.44 Gbits/sec [ 10] 1.00-2.00 sec 505 MBytes 4.24 Gbits/sec [SUM] 1.00-2.00 sec 2.06 GBytes 17.7 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 2.00-3.00 sec 442 MBytes 3.71 Gbits/sec [ 6] 2.00-3.00 sec 455 MBytes 3.82 Gbits/sec [ 8] 2.00-3.00 sec 456 MBytes 3.82 Gbits/sec [ 10] 2.00-3.00 sec 447 MBytes 3.75 Gbits/sec [SUM] 2.00-3.00 sec 1.76 GBytes 15.1 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 3.00-4.00 sec 482 MBytes 4.04 Gbits/sec [ 6] 3.00-4.00 sec 480 MBytes 4.02 Gbits/sec [ 8] 3.00-4.00 sec 474 MBytes 3.98 Gbits/sec [ 10] 3.00-4.00 sec 465 MBytes 3.90 Gbits/sec [SUM] 3.00-4.00 sec 1.86 GBytes 16.0 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 4.00-5.00 sec 477 MBytes 4.00 Gbits/sec [ 6] 4.00-5.00 sec 477 MBytes 4.00 Gbits/sec [ 8] 4.00-5.00 sec 471 MBytes 3.95 Gbits/sec [ 10] 4.00-5.00 sec 452 MBytes 3.79 Gbits/sec [SUM] 4.00-5.00 sec 1.83 GBytes 15.8 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 5.00-6.00 sec 473 MBytes 3.97 Gbits/sec [ 6] 5.00-6.00 sec 469 MBytes 3.94 Gbits/sec [ 8] 5.00-6.00 sec 463 MBytes 3.89 Gbits/sec [ 10] 5.00-6.00 sec 444 MBytes 3.73 Gbits/sec [SUM] 5.00-6.00 sec 1.81 GBytes 15.5 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 6.00-7.00 sec 463 MBytes 3.88 Gbits/sec [ 6] 6.00-7.00 sec 491 MBytes 4.12 Gbits/sec [ 8] 6.00-7.00 sec 484 MBytes 4.06 Gbits/sec [ 10] 6.00-7.00 sec 465 MBytes 3.90 Gbits/sec [SUM] 6.00-7.00 sec 1.86 GBytes 16.0 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 7.00-8.00 sec 419 MBytes 3.52 Gbits/sec [ 6] 7.00-8.00 sec 488 MBytes 4.09 Gbits/sec [ 8] 7.00-8.00 sec 498 MBytes 4.18 Gbits/sec [ 10] 7.00-8.00 sec 462 MBytes 3.87 Gbits/sec [SUM] 7.00-8.00 sec 1.82 GBytes 15.7 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 8.00-9.00 sec 453 MBytes 3.80 Gbits/sec [ 6] 8.00-9.00 sec 478 MBytes 4.01 Gbits/sec [ 8] 8.00-9.00 sec 479 MBytes 4.02 Gbits/sec [ 10] 8.00-9.00 sec 480 MBytes 4.02 Gbits/sec [SUM] 8.00-9.00 sec 1.84 GBytes 15.8 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 9.00-10.00 sec 430 MBytes 3.61 Gbits/sec [ 6] 9.00-10.00 sec 450 MBytes 3.78 Gbits/sec [ 8] 9.00-10.00 sec 474 MBytes 3.98 Gbits/sec [ 10] 9.00-10.00 sec 446 MBytes 3.74 Gbits/sec [SUM] 9.00-10.00 sec 1.76 GBytes 15.1 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 4.56 GBytes 3.92 Gbits/sec sender [ 4] 0.00-10.00 sec 4.56 GBytes 3.92 Gbits/sec receiver [ 6] 0.00-10.00 sec 4.71 GBytes 4.05 Gbits/sec sender [ 6] 0.00-10.00 sec 4.71 GBytes 4.05 Gbits/sec receiver [ 8] 0.00-10.00 sec 4.71 GBytes 4.05 Gbits/sec sender [ 8] 0.00-10.00 sec 4.71 GBytes 4.05 Gbits/sec receiver [ 10] 0.00-10.00 sec 4.53 GBytes 3.89 Gbits/sec sender [ 10] 0.00-10.00 sec 4.53 GBytes 3.89 Gbits/sec receiver [SUM] 0.00-10.00 sec 18.5 GBytes 15.9 Gbits/sec sender [SUM] 0.00-10.00 sec 18.5 GBytes 15.9 Gbits/sec receiver iperf Done. C:\Users\nickf.FUSCO\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64>

With these settings alone, I saw a 58% single stream performance improvement and a 74% multi-stream performance improvement, and yet we are still no where near the maximum performance possible of the network card itself.

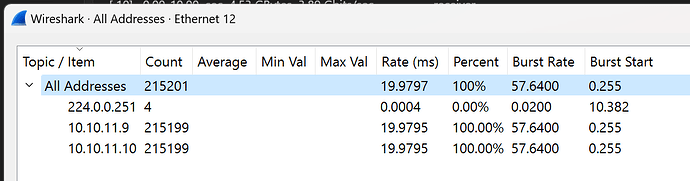

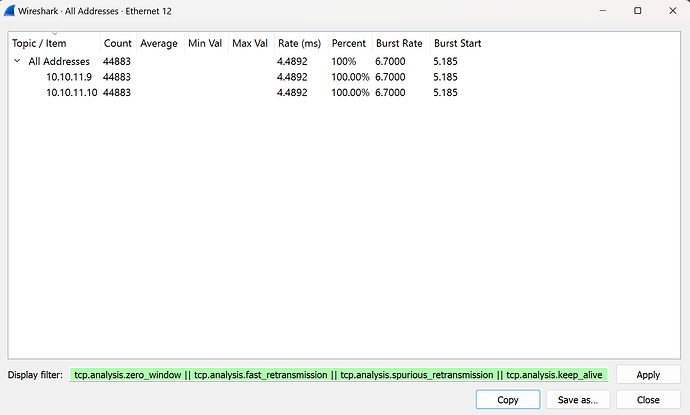

Re-running Wireshark after applying my settings:

There are 215,201 packets

And 44,883 packets are indicating potential poor performance still, which is actually higher than original at 20%!!

It seems I have hit the wall of this test platform, and would have to get a beefyer test environment to scale further.

- Setting auto-tune for TCP to any value other than “Normal” resulted in performance degradation.

Set-NetTCPSetting -AutoTuningLevelLocal Normal - Disabling Flow Control and QoS resulted in performance degradation.

- Changing RSS settings may have some performance to unlock too, but in my testing so far all of the variations I have tried resulted in performance degradation.