Three decades ago I had a massive 3GB disk drive (yes GB) fail with all my personal data to date on it.

I tried all sorts of things to recover it myself (because at the time I couldn’t afford professional data recovery), but I couldn’t get anything back. It was hard to admit it was gone and let go, but once I did and moved forward the stress was gone.

So, @Berboo , it is difficult in the circumstances, but do try to stand-back and think about whether it might be better to let go and move forwards rather than spend days or weeks trying to recover it.

But whatever you decide, we will continue to try to help you.

1 Like

Berboo

November 25, 2024, 5:03pm

42

It’s true that the moment I wrote the first topic I was still overwhelmed and in shock. As I said, some of the data is very precious to me.

I know that there is a risk that I will not recover any of the data and I’m working on accepting that. But I will put some effort trying to get something out of those drives, and it seems that there may be a last thing to try.

So I will try that last attempt (from a clean Install of TrueNAS) and if that fails, I think I’ll move on. Anyway, I love your wisdom and empathy, thanks for that.

1 Like

Are you referring to the read-only “TXG” import?

Berboo

November 25, 2024, 5:33pm

44

Yes. I’m referring to this message from @HoneyBadger

HoneyBadger:

Important to note that while I do know a little bit about ZFS, I’m not the panacea or ultimate authority here.

@Berboo I would suggest using Klennet first from a Windows PC, as the “scanning” functionality is free. But you will need additional separate drives to recover your files to .

If you are wanting to try this from within TrueNAS, the very first thing you would want to do is export the pool (without destroying data) if it does get mounted at boot-time. You would need to be comfortable with the command line, connect over SSH, and run the sudo zdb -eul command against each of the physical pool devices - paste the results here as text file attachments.

We would start with the uberblocks - the “entry point” of the ZFS tree - and see if there is one that is dated prior to the unfortunate rm -rf command.

captain

November 25, 2024, 5:49pm

45

Do not muck with the TrueNAS boot disk either! You could swap out the boot drive for a brand new one, install a new system on that, and see if you could recover. HOWEVER, from your OP it looks like all the filesystems were mounted RO, so what’s the problem? Nothing shows as having been rm’ed. ¯_(ツ)_/¯

Those were files and directories that were spared from the recursive rm command. Notably, located on the OS directories. His actual files, which are mounted under the /mnt/TankPrincipal, were not spared from being deleted.

2 Likes

Then let’s begin the attempt.

Start with getting me the contents of sudo zdb -eul against each of the ZFS pool member partitions such as /dev/sda2 - you can find them with lsblk -o NAME,LABEL and attach them to the post as text files.

We’ll go looking for the earliest possible transaction group and import read-only from there.

2 Likes

Berboo

November 25, 2024, 7:38pm

49

root@truenas[~]# lsblk -o NAME,LABEL

NAME LABEL

sda

├─sda1 Borealis:swap0

└─sda2 TankPrincipal

sdb

├─sdb1

├─sdb2 EFI

└─sdb3 boot-pool

sdc

├─sdc1

└─sdc2 TankPrincipal

sdd

├─sdd1 Borealis:swap0

└─sdd2 TankPrincipal

root@truenas[~]#

So the commands for each disk needs to be :

zdb -eul /dev/sda2

sdb2 being the boot drive

Is that right ?

So the pool is not mounted. I’m ready to go

Edit : I did those commands. Here are the files attached to this post.

@HoneyBadger Thanks again for your help

sdd2.txt (13.9 KB)sdc2.txt (13.9 KB)sda2.txt (13.9 KB)

1 Like

Looks like the earliest uberblock that can be found for entry is at transaction 10443235, Mon Nov 18 09:10:36 2024 -

sudo zpool import -fFXT 10443235 -R /mnt -o readonly=on -N TankPrincipal

After that, go ls’ing in /mnt/TankPrincipal and see if you see anything.

1 Like

Berboo

November 25, 2024, 10:31pm

51

Thanks for the command.

I entered it :

root@truenas[~]# sudo zpool import -fFXT 10443235 -R /mnt -o readonly=on -N TankPrincipal

How long does it supposed to last ?

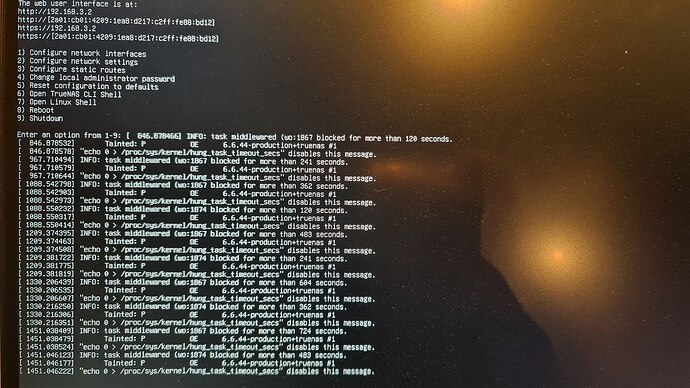

Also, this is what is displayed when I plugged a screen to the server :

is this normal ?

Edit : if Putty disconnects, it will kill the job right ?

Yes. Rewinding to a specific transaction group can take a very long time. There are ways to speed it up, but they also involve disabling various additional safety checks.

Berboo

November 25, 2024, 10:38pm

53

Ok thanks a lot.

It almost midnight over here and I’m gonna wake up early.

If I let my computer on, with Putty still opened, it won’t gonna kill the task right ?

It shouldn’t kill the task or time out - the import will attempt to continue in the background.

Berboo

November 26, 2024, 6:21pm

55

So,

Here I am after the command. I don’t hear the disks anymore.

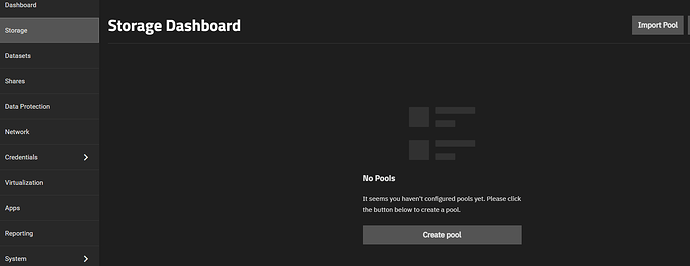

I can’t see the pool in the storage section

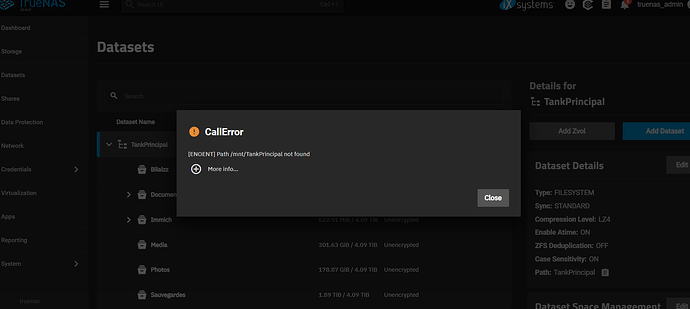

When I click on datasets this Error shows up :

I attached to this post the txt file for the more details :Error Traceback (most recent call l.txt (1.8 KB)Error Traceback (most recent call l_2.txt (1.8 KB)

It seems that the Pool isn’t mounted. maybe there is a log file that can show what happened ?

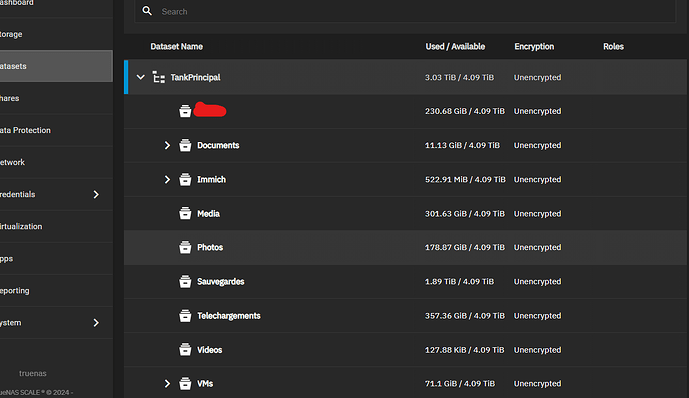

When I close the windows, I can see the datasets and their sizes :

The data seems there…but is not accessible.

root@truenas[~]# jobs

root@truenas[~]# ls /mnt/TankPrincipal/Photos

ls: cannot access '/mnt/TankPrincipal/Photos': No such file or directory

root@truenas[~]# cd /mnt

root@truenas[/mnt]# ls

root@truenas[/mnt]#

I don’t know if this is good or bad…

bacon

November 26, 2024, 6:27pm

56

You used the -N flag on the zpool import command. Which means that none of the datasets actually gets mounted. What you’re seeing is perfectly normal and not indicative of any data loss.

You can export & import again (without -N this time). But it’s probably better to wait for @HoneyBadger - I don’t want to interfere.

3 Likes

Berboo

November 26, 2024, 6:31pm

57

OMG that would be so nice if it’s true !

Yeah I won’t do anything before he asks me to !

Actually, big N is “no mounts” and little n is “no-op” - case matters for this.

@Berboo can you try a simple sudo zfs list and see if your MainTank datasets are visible with a whole lot more data in the USED column?

2 Likes

Berboo

November 26, 2024, 6:41pm

59

Ok here it is :

root@truenas[~]# sudo zfs list

NAME USED AVAIL REFER MOUNTPOINT

TankPrincipal 3.03T 4.09T 8.82G /mnt/TankPrincipal

TankPrincipal/.system 1.67G 4.09T 1.15G legacy

TankPrincipal/.system/configs-66311c036e824820af44b2dbf4c55f10 85.1M 4.09T 85.1M legacy

TankPrincipal/.system/cores 117K 1024M 117K legacy

TankPrincipal/.system/netdata-66311c036e824820af44b2dbf4c55f10 416M 4.09T 416M legacy

TankPrincipal/.system/nfs 165K 4.09T 165K legacy

TankPrincipal/.system/rrd-66311c036e824820af44b2dbf4c55f10 23.8M 4.09T 23.8M legacy

TankPrincipal/.system/samba4 3.05M 4.09T 666K legacy

TankPrincipal/.system/services 128K 4.09T 128K legacy

TankPrincipal/.system/syslog-66311c036e824820af44b2dbf4c55f10 8.30M 4.09T 8.30M legacy

TankPrincipal/.system/webui 117K 4.09T 117K legacy

TankPrincipal/MyName 231G 4.09T 231G /mnt/TankPrincipal/MyName

TankPrincipal/Documents 11.1G 4.09T 5.57G /mnt/TankPrincipal/Documents

TankPrincipal/Documents/Documents_Famille 128K 4.09T 128K /mnt/TankPrincipal/Documents/Documents_Famille

TankPrincipal/Documents/Documents_Perso 128K 4.09T 128K /mnt/TankPrincipal/Documents/Documents_Perso

TankPrincipal/Documents/Documents_Pro 5.57G 4.09T 5.57G /mnt/TankPrincipal/Documents/Documents_Pro

TankPrincipal/Immich 523M 4.09T 224K /mnt/TankPrincipal/Immich

TankPrincipal/Immich/Backups 128K 4.09T 128K /mnt/TankPrincipal/Immich/Backups

TankPrincipal/Immich/Library 128K 4.09T 128K /mnt/TankPrincipal/Immich/Library

TankPrincipal/Immich/PostgreSQL 522M 4.09T 522M /mnt/TankPrincipal/Immich/PostgreSQL

TankPrincipal/Immich/Profile 128K 4.09T 128K /mnt/TankPrincipal/Immich/Profile

TankPrincipal/Immich/Thumbs 128K 4.09T 128K /mnt/TankPrincipal/Immich/Thumbs

TankPrincipal/Immich/Uploads 128K 4.09T 128K /mnt/TankPrincipal/Immich/Uploads

TankPrincipal/Media 302G 4.09T 302G /mnt/TankPrincipal/Media

TankPrincipal/Photos 179G 4.09T 179G /mnt/TankPrincipal/Photos

TankPrincipal/Sauvegardes 1.89T 4.09T 1.89T /mnt/TankPrincipal/Sauvegardes

TankPrincipal/Telechargements 357G 4.09T 357G /mnt/TankPrincipal/Telechargements

TankPrincipal/VMs 71.1G 4.09T 128K /mnt/TankPrincipal/VMs

TankPrincipal/VMs/Lubuntu-un15g8 20.3G 4.10T 7.57G -

TankPrincipal/VMs/UbuntuNN-ekzpmn 50.8G 4.14T 74.6K -

TankPrincipal/Videos 128K 4.09T 128K /mnt/TankPrincipal/Videos

TankPrincipal/ix-apps 4.87G 4.09T 160K /mnt/.ix-apps

TankPrincipal/ix-apps/app_configs 2.06M 4.09T 2.06M /mnt/.ix-apps/app_configs

TankPrincipal/ix-apps/app_mounts 7.27M 4.09T 128K /mnt/.ix-apps/app_mounts

TankPrincipal/ix-apps/app_mounts/qbittorrent 6.39M 4.09T 139K /mnt/.ix-apps/app_mounts/qbittorrent

TankPrincipal/ix-apps/app_mounts/qbittorrent/config 6.13M 4.09T 5.70M /mnt/.ix-apps/app_mounts/qbittorrent/config

TankPrincipal/ix-apps/app_mounts/qbittorrent/downloads 128K 4.09T 128K /mnt/.ix-apps/app_mounts/qbittorrent/downloads

TankPrincipal/ix-apps/app_mounts/tailscale 304K 4.09T 128K /mnt/.ix-apps/app_mounts/tailscale

TankPrincipal/ix-apps/app_mounts/tailscale/state 176K 4.09T 176K /mnt/.ix-apps/app_mounts/tailscale/state

TankPrincipal/ix-apps/app_mounts/transmission 469K 4.09T 128K /mnt/.ix-apps/app_mounts/transmission

TankPrincipal/ix-apps/app_mounts/transmission/config 128K 4.09T 128K /mnt/.ix-apps/app_mounts/transmission/config

TankPrincipal/ix-apps/app_mounts/transmission/downloads_complete 128K 4.09T 128K /mnt/.ix-apps/app_mounts/transmission/downloads_complete

TankPrincipal/ix-apps/docker 4.79G 4.09T 4.79G /mnt/.ix-apps/docker

TankPrincipal/ix-apps/truenas_catalog 74.5M 4.09T 74.5M /mnt/.ix-apps/truenas_catalog

boot-pool 2.43G 50.9G 96K none

boot-pool/.system 19.7M 50.9G 112K legacy

boot-pool/.system/configs-ae32c386e13840b2bf9c0083275e7941 172K 50.9G 172K legacy

boot-pool/.system/cores 96K 1024M 96K legacy

boot-pool/.system/netdata-ae32c386e13840b2bf9c0083275e7941 19.0M 50.9G 19.0M legacy

boot-pool/.system/nfs 112K 50.9G 112K legacy

boot-pool/.system/samba4 252K 50.9G 156K legacy

boot-pool/ROOT 2.40G 50.9G 96K none

boot-pool/ROOT/24.10.0.2 2.40G 50.9G 165M legacy

boot-pool/ROOT/24.10.0.2/audit 196K 50.9G 196K /audit

boot-pool/ROOT/24.10.0.2/conf 6.83M 50.9G 6.83M /conf

boot-pool/ROOT/24.10.0.2/data 284K 50.9G 284K /data

boot-pool/ROOT/24.10.0.2/etc 7.33M 50.9G 6.37M /etc

boot-pool/ROOT/24.10.0.2/home 128K 50.9G 128K /home

boot-pool/ROOT/24.10.0.2/mnt 96K 50.9G 96K /mnt

boot-pool/ROOT/24.10.0.2/opt 96K 50.9G 96K /opt

boot-pool/ROOT/24.10.0.2/root 152K 50.9G 152K /root

boot-pool/ROOT/24.10.0.2/usr 2.05G 50.9G 2.05G /usr

boot-pool/ROOT/24.10.0.2/var 35.6M 50.9G 31.5M /var

boot-pool/ROOT/24.10.0.2/var/ca-certificates 96K 50.9G 96K /var/local/ca-certificates

boot-pool/ROOT/24.10.0.2/var/log 3.21M 50.9G 1.24M /var/log

boot-pool/ROOT/24.10.0.2/var/log/journal 1.97M 50.9G 1.97M /var/log/journal

boot-pool/grub 8.20M 50.9G 8.20M legacy

root@truenas[~]#

1 Like

bacon

November 26, 2024, 6:44pm

60

I know. You used big N in your command