Yes but why shouldn’t one fill a ZFS pool with zvols over 50%?

Because zvols act like block devices ZFS can’t tell which blocks inside them are truly free so when the pool gets over 50%ish ZFS runs out of easy places to write new data.

Thanks a lot @Johnny_Fartpants but especially @etorix , read both llinked articels - very interesting and informative.

With all that new (to me) information I’d now also say that using SMB instead of NTFS or even ReFS via iSCSI is probably a way better idea - particularly because @Tieman’s system uses HDDs only (even though the pool consists of mirrors only).

Have you contacted 45Drives for support? It’s possible something is misconfigured on the hardware.

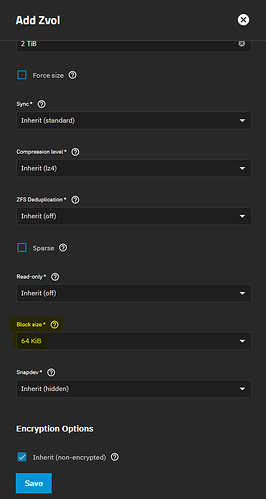

If you’re able to create a new zvol and attempt to target that as a new repository, try making one with the zvol block size (not the dataset) set to 64K to match the ReFS cluster size.

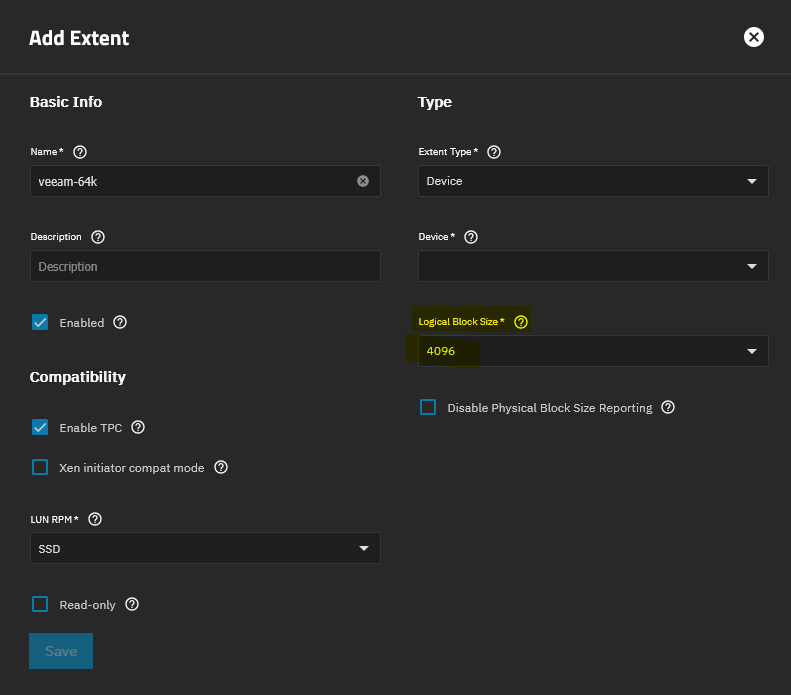

Your iSCSI extent might also benefit from being exported at 4Kn (4096 bytes) if Veeam will handle that.

Was 4 months or so ago. Problem was some patch from Windows Update.

Sorry for getting even more off topic, but this is what I have a hard time understanding. You set zvol to 64k. And iSCSI presents a zvol just like a local disk, so should it not be the OS job to decide what block size it uses? Like 64k for ReFS or 4k for NTFS.How does that work together with that logical block size? Is it that this is just a single “sector” so setting it to 4096 is “basically” what setting ashift=12 is for creating a ZFS pool, so the disks use 4k sectors?

So with the 4k logical block size setting, it would use a single block for 4k NTFS and 16 blocks for 64k ReFS?

And if so, why do ext4 linux installations and TrueNAS extent still use 512 instead of 4096? Is that just a legacy leftover?

I have enabled compression and set to LZ4, thank you all for pointing out that this would be helpful, I was not aware.

Thank you @etorix for those links, that was incredibly helpful and informative.

I reached out to Veeam support again and they confirmed that their best practice recommendation is to use 64kb block size for NTFS but to have the storage volume set with 128kb block size, so at least that is already correct.

I originally used ReFS and it worked AMAZINGLY for about a week and a half and then it failed and displayed on the Veeam server has “RAW”. I did a little research and found that Veeam did not list 45drives as a vendor that supported ReFS. I also contacted 45drives directly and they confirmed that they could not certify that ReFS would work with their hardware. Very frustrating but that’s on us for not realizing this before we made the purchase.

Hahahaha sigh unfortunately my company declined to pay for setup/config support so I’m on my own here.

I don’t think that this “counts” for ReFS in a ZFS zvol.

I’d probably look for ZFS there or something like that😅

Sounds like a very weird and fatal error but I wouldn’t be sure that it aas directly because of ReFS.

KB2792: ReFS Known Issues, Considerations, and Limitations (sorry, I can’t post the link but wanted to provide my source) [I got you -Mod]

Do not use ReFS with iSCSI or FC SANs unless explicitly supported by the hardware vendor.

This is why I’m hesitant to try again with ReFS.

Understandable. So are you going to give SMB a try?

Yes, I’m just waiting for a response from Veeam support on the best order of operations for making than kind of change to the repository. Would love to get this in place for this weekend’s synthetic full backups so I can compare to last weekend.

I don’t get that.

ReFS is AFAIK a Windows Server only filesystem. So the question is mostly, does 45 drives support windows Server, isn’t it?

So my guess is that 45drives support Windows Server and running ReFS only adds the additional requirement to be an HBA.

But that does not help you much, since you want to use TrueNAS and not Windows Server as backup destination.

And for TrueNAS, your best bet would probably be to use a dataset with an SMB share.

There might be a smb CPU bottleneck, so maybe installing NFS support for your Windows Server would result in faster speeds.

WIndows only. It works with non-server versions of Windows as well.

There is a big difference between ReFS working on the hardware and 45drives supporting it. I think they especially won’t be supporting ReFS via iSCSI on a zvoli because that basically doesn’t have anything to do with the hardware directly.

What do you mean by that?

I would very much not do that - I know Windows does support NFS but I haven’t heard anything positive about that feature so far.

I’m fairly sure my Commvault colleague gets around this by using multiple DNS alias’ so that multiple SMB threads can be spawned (one per connection).

Veeam includes the NFS support, you don’t have to fuss with the Windows stuff. I use NFS for my Veeam backups to a TrueNAS at work.

Didn’t know it could do that directly on Windows without a backup proxy, nice.

I believe that ReFS requires you to set sync=always on the iSCSI ZVOL in order to prevent some rather fragile metadata flushes that ReFS does. However, this can (likely will) tank performance if you don’t have a fast SLOG device.

Sorry to hear that. I see you’re looking into SMB as an option now - we do have some options in TrueNAS Enterprise to optimize for Veeam Fast Clone, but those won’t be available on a 45Drives unit unfortunately.

It’s layers.

volblocksize is what ZFS allocates at - so in this case, 64K

iSCSI then presents it as a disk, with the logical sector size as shown - typically 512 for legacy, 4096 for modern OSes that can handle 4Kn drives

And then the initiator decides what record size to use for its filesystem.

So it’s a 64K NTFS/ReFS record → broken into 16x 4K logical sectors sent over iSCSI → reassembled into a single 64K ZFS record at the other end.

The idea here is to avoid having to break it up too small. With mirrors in play and legacy logical block size it’s defaulting to volblocksize=16K and 512b iSCSI extents, thus going:

64K client filesystem → 128x 512b sectors over iSCSI → 4x 16K ZFS records

Generally speaking, the fewer “split and reassemble” cycles, the better.

So I do have that available to me, I’m curious if SMB fast clone is the preferred path, or doing this via iSCSI+ReFS. I think the recommended best practice I found on your website said iSCSI+ReFS, but the article seemed dated and I wasn’t sure if the recommendation had changed.

I believe you have to have a “backup proxy,” as in you need that piece to do backups at all. However, it doesn’t need to be a separate server if that’s what you mean. Mine is all in one beefy former ESXi, now Windows, server.