WELL!!! Thank you to EVERYONE!

Most of all @Constantin for the original post. This subject has been a thorn in my side for a while. I always understood that for my workload, combined with my configuration, the L2ARC was USELESS (to me), and RAM is GOD (So I invested in GOD).

BUT! I have 84 8TB drives of spinning SAS Rust that I need to index. Hopefully Optimize. Bonus is save a bunch of index bursts to save power.

Most of the data WILL be large video files.

I am in the planning stage and procurement stage. I invested in semi-recent e-waste 13-gen dell servers and loaded the RAM. Also invested (thankfully at the right time) in some 1TB Intel 905P u.2s, and a chunk of Dell BOSS cards and some Black Friday Gen3 Consumer NVME and h.20 Optane at stupid great prices.

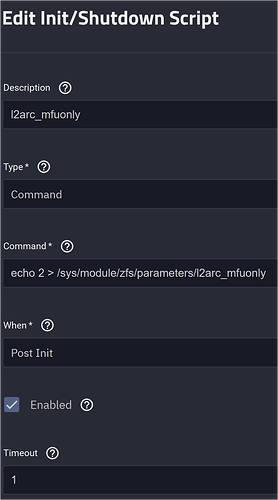

I know what everyone is doing (cluster), know what everything is doing (BOSS/other cards with H.20’s for VMs/DBs and maybe a SINGLE SLOG), but I always had a NAG…

I have 84x8TB SAS Drives (at a hell of a deal) + 4x24bay JBODs (STUFFED At an even better deal), and I need to index them. Or Manage the meta-data.

So I would like to re-thank @Constantin for being clear, when EVERYONE ELSE was clear as MUD. Not just here BTW. I have scoured the REDDIT RATS, Level1 Forums, and the abyss, including the TrueNAS Docs.

Ambiguity is the cruel mistress of confusion.

Secondary thank-you to the others like @HoneyBadger & Others

I know my workload, your examples matched what I thought should translate. The rest of the NET is confusing and contradictory to reason.

T3 Truenas Youtube REALLY needs to get on this topic.

Enough about L2ARC, BUY/CONFIGURE RAM.

Tier Your Storage.

Focus on indexing and storing the crumbs.

Meta-Data SVDEV is the performance obvious.

I do have a ZFS/TRUENAS request though.

MOST of the confusion (As I see it in this post even) is derived by the combination of the indexing of the pool and small files on the same device.

MAYBE we should be looking at separating the indexing and the small files the way you currently tackled the SLOG.

What a wonderful and streamlined world it would be, if rather than manually predicting the file sizes and saving them to the correct/speed pool/vdev….

We have the algorithm(s) responsible for small-block-size assignable all on their own to their own pool. Ya I know I stepped out of the pool (box), Maybe we can figure out a way to accomplish the same thing without stepping out of the pool/box?

Anyways… SERIOUSLY prudent post and I am happy I got to learn from it.

Cheers.