Well… neither the HBA nor the expander should be fully stressed as you are only pulling a bit more than 2Gbps per drive when they are rated 6Gbps. That being said, you should be putting them under a more intensive workload than they normally would be put: in other words, you cannot stress them more with your current hardware, so it’s a valid test.

Hi Nick,

I’m using the SMB-protocol for shares and i was using large files of 40GB or more.

And thank you for the help ![]()

Here is the iperf3:

Everything looks good, I’m not sure I can explain from the TrueNAS side why this is happening. Are you copying from TrueNAS to a local drive on Windows? I think client-side tuning is our answer.

Take a look at this:

Highlight of that guys story:

Now, using the same test criteria, I only get ~1300 MB/s instead of > 4200 MB/s and the average latency is also 3 times higher than the test 2 years ago. :nauseated_face:

And see if any client side tuning helps.

Also, server side tuning is here:

Resource - High Speed Networking Tuning to maximize your 10G, 25G, 40G networks | TrueNAS Community

Particularly turning on dctcp, which works best in simple networks like yours. The reason it’s not default is because cubic is better for higher latencies and is a safer option.

Linux defaults to cubic

To load dctcp, set a post-boot task of

modprobe tcp_dctcp

and also run that command from the shell prompt to load it right away. You will then be able to set a sysctl tunable

net.ipv4.tcp_congestion_control=cubic

or

net.ipv4.tcp_congestion_control=dctcp

to enable your preferred congestion control module.

Thank you! With all the things you guys made me test, it indeed seems like the jbod and truenas itself are doing fine.

I’ll look into the clientside of things tomorrow. ![]()

Keep us in the loop!

Allthough i’m wondering why it’s no issue when i’m reading it out from the memory (on 2nd copy). Client side shouldn’t affect that.

Run these tests in “tmux”, then you can reattach to the terminal if you close it (or it breaks)

This is demonstrating your problem. Pool

Is only pulling 100MB/s

Later on, it’s doing 250MB/s

So, either the pool speed is fluctuating or the client connection speed is :-/

OMG -

High-Speed Windows Client Performance Tuning

Interrupt Morderation Disabled made the biggest performance difference.

Disabling this on my ingest machine made a huge difference. I have no clue why, but I no longer get 0.5-1 second load times between folders between Windows & my NAS. That might not sound too annoying or a huge bump, but it just feels amazing.

Interrupt moderation controls the rate of interrupts to the CPU during the TX and RX . Too many interrupts (per packet interrupt) increase CPU usage, impacting the throughput adversely, while too few interrupts (after time or number of packets) increase the latency.

I assume you’re spending cpu to get faster transfers. Which is okay.

Same reason Jumbo packets used to be used. CPUs weren’t fast enough to deal with line rate with small packets.

Interesting; now I need to think of a way to benchmark cpu performance while simultaneously loading the NIC with Interrupt Moderation enabled vs disabled to see if there is any noticeable hit…

Hi all,

I did some more testing, the local tweaking didn’t fix much, i also max out the connection easily when i’m reading from memory instead of from disk. I created another share on the pcie SSD on the truenas and if it reads out from memory it has speeds of over 1GB/s, but from the second it has to read out directly from the pool i get the same crappy performance as on my clients.

I’m really baffled of the causes because as you can see above, when i do the soltest it all maxes the HDD’s out (by verifying it trough the monitoring in truenas).

I’ll let it run overnight trough ssh this time to get a full report.

I ran the soltest for a day and then my server refused duty. It seems the soltest uses more and more memory until it used up everything and the apps started to degrade etc which was starting to have too much impact on my network.

What i could see is that all disks ran parallell at max speed and slowly started to degrade towards 150MB/s each due to the memory being in full use.

Does anybody have an idea what it could be? If the soltest can max out the HDD’s running parallel does that mean it’s no the HBA controller or the JBOD?

You were not expecting your server to be available while testing its stability, were you?

I know it was a stretch, but it’s a production server. Anyways it doesn’t change from the fact i saw the results of the tests in the reports and it looks normal there. What i also notice is that if i do a parallel copy it just maxes out my network connection.

Weird stuff ![]()

Nobody has an idea of a possible cause?

I would expect and issue on the production server to halt the production.

With a confirmation the drives and everything attached to them run fine for an extended period we can speculare your original testing method was somehow wrong: how were you testing exactly?

Sounds like the HBA may be overheating. You have this server? Did you replace the fans or something?

SYS-110D-8C-FRAN8TP | 1U | SuperServer | Products | Supermicro

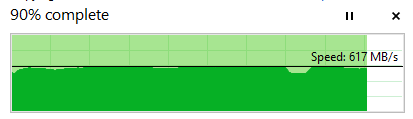

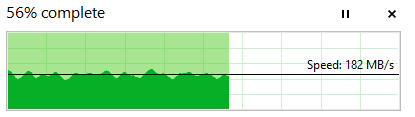

Yes i have that server. Nothing in this server is replaced and nothing seems to be overheating, because from the getgo, even after a reboot the performance is like this (copy of a a single 30GB file).:

The rack itself has massive fans and is placed in a very big cool cellar.

Out of pure frustration i reinstalled my entire server with the same exact result.

I’m completely out of ideas ![]()

Test is just simply copying one big file from the truenas to my local pc. See previous post. Once the file is loaded in the truenas memory and i copy it again it runs superfast: