That’s pretty high bandwidth from a single client!.. is that 2 x 100Gbe links or 1?

Can you make a general post with your hardware specs… it would be very interesting.

Had to run 25-04-1-RC1 for a few days before official release tracking down a bug between Synology and TrueNAS (was Synology) and now when I try to upgrade to final release 25.04.0 it always fails on boot up stalling on starting Middlewared. I rebooted back into RC1 and tried upgrading manually with the .update file and the same error occurs. I’ve thrown everything I know at this problem and it always fails to load Middlewared and the network doesn’t load up so diagnosing is fun, especially with this server out of the country and I have to use iDRAC. Never had an RC upgrade to release fail like this. I’ve got hours trying to bring this server to release and have given up, will wait to see if 25.04.1 brings us some relief.

Even “midclt call update.manual” failed with the same Middlewared result.

That sounds worth pursuing — so you should raise a topic for it in TrueNAS General.

Have you tried saving the config file and doing an update via ISO instead?

Report a bug even with minimal info. I suspect a manual re-install might be needed, but at least we’ll know this can happen and might give you molre expert advice on how to fix. Just report NAS-ticket in this channel.

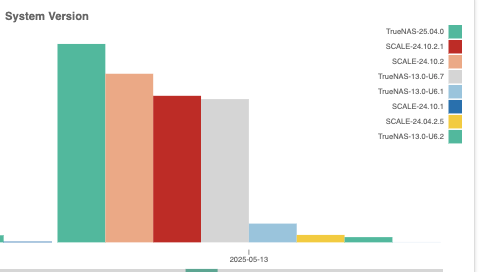

FYI… 25.04.0 has become the most popular version of TrueNAS. That’s unexpected for a .0 version. Quality (particularly the instances function) will improve with the .1 update later this month.

Exporting a pool that had a dataset mounted in Incus seems to break Incus.

I’m 99% sure I removed the disk from the instance before exporting the pool. The instance was “off” at the time which may be relevant.

When I went to export the pool in the GU, after ~10 minutes of hanging at 80% it finally said it exported. I had selected to remove all TrueNAS configs related to the pool. Dmesg showed a lot of errors about the task taking too long.

After exporting, when I go back to Incus it appears like it’s not configured. When I select the pool (the same one as before, not exported), there’s an error that it failed validation.

The validation fails because it’s still got the disk in the configuration, despite me removing it from the GUI.

I feel like TrueNAS stores the Incus configs somewhere in the TrueNAS configs, and when the disk was removed from the GUI it wasn’t immediately committed to the TrueNAS configs. So when I select the Incus pool, TrueNAS attempts to load the configs it has stored somewhere, they fail validation due to the broken mount, and Incus doesn’t start.

Could someone tell me where TrueNAS stores the pre-validation Incus configs (freenas db? Which table?) so that I can manually remove the non-existent disk and carry on?

I will file a report once I determine the fix.

Go ahead and file a bug if you can replicate it… no need to wait until you resolve (may be impossible without software changes).

Last time I went to and used a RC version, I had to reboot back into the previous standard release boot environment I came from then had to switch trains back to the regular release train, then update to the next release version.

I don’t currently have any VM’s setup thet I can use to try again to see if this is still true. But you might want to investigate this route.

I wouldn’t think of it as a bug as RC’s are a different train and the update roadmap does not show a path of updating from a RC to a released version.

Its probably best to think of it as a bug… maybe in documentation and not in software. If anyone has the same issue, I’d be happy to see a ticket raised and shared here.

I have booted the previous 24.10.2 train twice now, both times it boots 24.10.2 cleanly but then fails the upgrade to 25.04 the same way with the console showing “middleware is not running. Press Enter to open shell”. I can see where that should work but in this instance it continues to fail, leaving me stuck in RC land.

This should be fixed for 25.04.1 release.

Do you know where TrueNAS stores the Incus container configs, before they’re validated for Incus to start?

I’d really like to remove the broken mount that’s preventing Incus from starting. I believe deleting the ‘disk’ didn’t immediately remove it and that was what caused the export to hang. I can’t test my theory until I get Incus working in the GUI again.

Incus stores its backup configuration inside yaml files within unmounted datasets in the ix-virt dataset. This is an upstream issue in that it makes missing mounts a fatal error in recover ops rather than having the recover succeed and raise an error on instance start.

Thanks, i’ll check it when I get everything back together.

Generally incus stores backups of its own configuration within the ZFS datasets its storage drivers use. This means extreme care needs to be taken when making any storage-related changes outside of incusd otherwise you will end up with a broken configuration. Some ways we’ve seen users break this (non-exhaustive):

- removing datasets from shell that had references in incus

- zfs send/recv from one pool to another via shell (changes the pool name out from under incus)

- zfs renames on datasets / zvols consumed by incus

Do you mean “changes the path out from under Incus”? Isn’t this resolved by reimporting the new pool with the old name?

On another subject, is Incus (as incorporated into TrueNAS) running its own ZFS commands behind the scenes, such as taking its own snapshots not defined in the TrueNAS tasks?

Right. Well, path references based on pool name. Incus for instance will error out like this:

{'type': 'error', 'status': '', 'status_code': 0, 'operation': '', 'error_code': 500, 'error': 'Failed creating instance "MYINSTANCE" record in project "default": Failed creating instance record: Failed initializing instance: Failed add validation for device "disk0": Missing source path "/mnt/dozer/SHARE" for disk "disk0"', 'metadata': None}

for containers. On the other hand if you have custom volumes in the config for VMs, the configuration backup will contain references to the incus storage pool where they’re expected to be.

Making this more fault-tolerant is non-trivial. It’s something that will need addressing, but probably not reasonable to expect in 25.04. This is one of the reasons why we hide away ix-virt from normal UI users. Incus expects to own that ZFS path and there are sharp-edges.

This may sound obvious, but can’t something be done in TrueNAS that informs Incus that the path to the containers is $POOLPATH. The “variable” is dynamically assigned at every reboot/import.

It would override what “upstream” does, of course.

This way, if you replicate, $POOLPATH used to be /mnt/dozer/, but now it’s /mnt/grazer.