I am also experiencing the same thing. Freshly installed dragonfish 24 release; no apps running, basically everything on default other than encrypted pool and forced client / server smb encryption. I am not sure it’s the same, but for my case, I have very LOW cpu usage(reserved 32cores), 1TB RAM, 12 x 18tb exos in Z2. i am migrating NTFS 80TB of data to ZFS. After a whole day of transfering data, i will suddenly start to notice significant transfer speed slowdown; specifically heavy I/O operations like small 4k files, will sort and travarsal extremely slow, like 3 file per second; WEB UI completely locks up and cannot login until all file transfers are stopped; and in CLI typing has huge delay, letters appear half second or copule seconds later alltogeher. Strangely “asyncio_loop” also starts to consume about 1.6GB of SWAP when i have 1TB of memory, 65GB Free, 900GB ARC, and only 15GB in service. stopping file transfer “asyncio_loop” swap also goes down, and web UI responsive again, transfer throttle improves but issue presist and comes back once file transfer resumes. Only workaround is to reboot truenas and issue goes away for another 20+ hours.

just an update.

the latest dragonfish, i had done a fresh reinstall from scratch. been testing over a couple of days, didn’t notice that slow down since.

so i’m thinking maybe this issue was more to do with the previous version ![]()

because i can go to truenas ui, browse around and it’s responsive. so no issue for me atm.

will update here if that changes. but from what i can see atm, seems ok.

and keep in mind i have 20 active docker containers running, and only using 16gbe of DDR4 ram. hope that is enough ![]()

i think the issue is within dragonfish itself. which everyone has been posting. Smaller ram will have issue appear faster. Currently the workaround appears to be limiting dragonfish’s ARC to be at 50% mimicking cobia’s default behavior.

I didn’t realize there was so much additional traffic on this thread.

Some things I have noticed:

- accessing a lot of files definitely triggers this issue. In my case, rsync or file sharing.

- the asyncio wait process might have something to do with it? I see it at the top of top for CPU activity when things are slow

- I have 512GB ram with less than 40gb used by processes, a 64-core processor that averages 5% utilization, and still run into these issues. Does not seem to be a memory/CPU limitation.

- increasing fs.inotify.max_user_watches seemed to fix some issues I was running into

- increasing fs.inotify.max_user_instances from 128 seemed to fix some other issues I was running into.

- increasing fs.inotify.max_queued_events didn’t make a noticeable difference, but I’ve increased it anyway…

- I’m still running into some sluggishness, but not getting the weird errors I was getting before. I believe some of the above values, and maybe others, need to be increased to deal with the high number of [something] that rsync/apps/middleware can use up. I’m just not sure which… I’ve wanted to compare the values for cobia vs dragonfish but to be honest am too lazy. I did not have any changes from the defaults on cobia but did not run into these problems.

how did you do that ![]()

where in truenas ui do you set this?

System > Advanced > Sysctl

Add the variable and the value. The three values above are what I currently have increased, but I think there’s another value NOT mentioned above that ‘resolved’ the issues. I was able to do anything and the UI was always as fast as it should be, but after a reboot it’s not ![]()

May do some searching again and try some others, but I’m 99% sure the issue is a sysctl value set too low.

EDIT: Whoops, I see you were responding to SnowReborn. Still getting used to the new forum. I’ll leave this up anyway.

I just increased net.core.somaxconn from 4096 to 16384 and – at the moment – webui is snappy again. I decided to try this due to these errors being in the logs:

May 6 13:58:21 truenas middlewared[3182236]: raise ClientException(‘Failed connection handshake’)

May 6 13:58:21 truenas middlewared[3182236]: middlewared.client.client.ClientException: Failed connection handshake

At the same time as the above change, I also disabled swap since I have more than plenty of RAM. Not sure which of the two helped, but I’m leaning towards the sysctl variable.

Increasing fs.inotify.max_user_instances seemed to help when I was unable to start systemd-nspawn jails/containers. I have 38 ‘apps’ that run. I believe they exhausted the default limits?

Increasing fs.inotify.max_user_watches seemed to help with slowdown while I was running rsync on a huge number of files.

Time will tell if any of this is an actual fix, but so far it seems to help.

So I thought I wasn’t using swap because TrueNAS would report loads of free memory, this isn’t the case however.

My issue was that the CPU was ‘thrashing’ moving things in and out of memory into swap, causing extremely high IO times.

Simple fix is just to disable swap. swapoff -a

Then add this to TrueNAS as a postinit script. Never had even a hint of a slowdown after that.

Thanks for the info.

Yeah, I noticed a while back that despite having 512gb of ram, only 30-40gb allocated by services, and always having 30-40gb “free”, that I was noticing swap being used. Over a long enough time, the entire 10gb of swap would be in use. It made me scratch my head but I didn’t expect it to be an issue.

My use case has a ton of open network connections, a lot of containers running, and a large number of files being accessed simultaneously. Increasing the sysctl variables really seemed to have an impact, which makes me wonder if some of the defaults are perfectly fine for a desktop or single-service server scenario, but not for a situation where a lot of files are being accessed by a lot of services and users? max_user_instances default 128 was definitely an issue for the number of containers. max_user_watches default seemed to be an issue for situations where a lot of files are accessed (rsync, remote peers). net.core.somaxconn seemed to help with the number of connections…

Is it possible these values are only ‘too low’ because swap/memory issues are causing delays in releasing the above resources and is allowing them to become exhausted?

So far with the above values increased and swap disabled, truenas has been SNAPPY for the past day or two no matter what I throw at it. As a test, tonight I will disable the modified sysctl values, reboot, and disable swap. If the UI remains responsive for a few days, I’ll feel confident the swap is the only issue.

Please let us know how that goes. My theory is that swap being enabled is triggering these bad behaivors. We don’t use swap on our enterprise side, and I even personally disable it as well (because swap is evil in general). We are wondering if that is the variable triggering this for most.

Just disabled all my sysctl changes and rebooted with swapoff -a in the post-init commands. Seems fine so far but issues usually take some time to crop up – maybe once ARC is filled. Will update tomorrow.

Great, thank you for being willing to help test!

Ran into the first issue:

‘Apps’ page keeps showing ‘No applications installed’ after waiting a minute or so for it to finish loading. Some/all of the 38 containers seems to load glancing at the processes in htop. I’m unable to start my systemd-nspawn / jailmkr container:

May 07 21:31:13 truenas systemd-nspawn[94773]: systemd 252.22-1~deb12u1 running in system mode (+PAM +AUDIT +SELINUX +APPARMOR +IMA +SMACK +SECCOMP +GCRYPT -GNUTLS +OPENSSL +ACL +BLKID +CURL +ELFUTILS +FIDO2>

May 07 21:31:13 truenas systemd-nspawn[94773]: Detected virtualization systemd-nspawn.

May 07 21:31:13 truenas systemd-nspawn[94773]: Detected architecture x86-64.

May 07 21:31:13 truenas systemd-nspawn[94773]:

May 07 21:31:13 truenas systemd-nspawn[94773]: Welcome to Debian GNU/Linux 12 (bookworm)!

May 07 21:31:13 truenas systemd-nspawn[94773]:

May 07 21:31:13 truenas systemd-nspawn[94773]: Failed to create control group inotify object: Too many open files

May 07 21:31:13 truenas systemd-nspawn[94773]: Failed to allocate manager object: Too many open files

May 07 21:31:13 truenas systemd-nspawn[94773]: [!!!] Failed to allocate manager object.

May 07 21:31:13 truenas systemd-nspawn[94773]: Exiting PID 1…

May 07 21:31:13 truenas systemd[1]: jlmkr-my.service: Main process exited, code=exited, status=255/EXCEPTION

May 07 21:31:13 truenas systemd[1]: jlmkr-my.service: Failed with result ‘exit-code’.

I set and was able to start the container:

sysctl -w fs.inotify.max_user_instances=1024

However, the ‘Apps’ page was still showing 'No applications installed. Dashboard won’t load. I’m not sure which k3s service is the proper one to restart it, and I didn’t want to unset/set the application pool, so I just rebooted.

Critical #### Failed to start kubernetes cluster for Applications: (-1, ‘dump interrupted’)

Hm. Strangely, at least some of the apps are running. 2nd reboot and apps came up properly. Seems fine. I don’t think the above change has anything to do with the UI slowdown.

Why disable swap outright? Why not set the “swappiness” to the lowest possible level, so as to have a safety net to prevent an OOM or system halt issue? (In other words, swap never gets used, except for situations where the system would have run out of physical memory.)

Especially if people are using Apps, VMs, and lots of cached data in the ARC.

Disabling swap has helped with my UI responsiveness. I also noticed that my boot drive was getting hit a lot harder than I expected- caused by swap.

What is writing to my boot drive? - TrueNAS General - TrueNAS Community Forums

Dragonfish-24.04.0

I think this is a better approach potentially, I guess it’s legit option to turn off swap altogehter if have large RAM to kick out of ARC before OOM, but changing swappienss, asumming it works, is probably suited for most people.

So far, so good. I don’t think disabling swap is a ‘solution’ since TrueNAS should perform with or without it, but it seems to be a good mitigation for the time being.

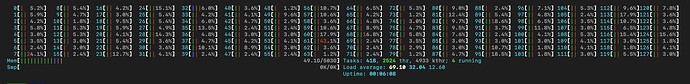

49gb services

428gb ARC

26gb free

0gb swap

Changes:

swapoff -a

fs.inotify.max_user_instances=1024

Good to hear! We are having the internal debate about swap right now. It’s created and used on the system for some historical reasons which don’t have as much relevance today for TrueNAS. We’ll have a fix in 24.04.1 in a few weeks either way, but in the meantime swapoff -a for anybody who runs into these issues.

For what its worth, I’m personally in the swap is evil camp. ![]() I mean, on a laptop? Sure, there is some use-case. But on anything that looks like a server, it tends to cause more problems than it solves, and introduces somewhat undefined new behaviors in the process, a total pain to troubleshoot.

I mean, on a laptop? Sure, there is some use-case. But on anything that looks like a server, it tends to cause more problems than it solves, and introduces somewhat undefined new behaviors in the process, a total pain to troubleshoot.