and the stats from finish:

I migrated from DSM some time ago, that’s not the same thing. DSM is really more stable (the OS, not the file system integrity), don’t need you to tune up the parameters (like here with a simple parameter change you speed up a (really long) task by 4! but the parameter is not in the GUI you have to use a shell and a command line) and even with the new expansion (adding drive), it is not as good as SHR for extending your volume.

There’s also the need to understand how ZFS work to create a good disk topology: I didn’t realise the importance of a slog in the beginning (ZFS ZIL and SLOG | TrueNAS Documentation Hub).

But ZFS is so much better for data integrity that it’s worth the move!

Yes, some other NAS products are more mature, and possibly have their tuning already applied. But, the converse may also be true. As a mature product, it may not get attention for optimizations as extensive testing would need to be done for quality control.

In the case of ZFS RAID-Zx expansion, we are learning what makes the process faster. Their is some talk about including the raidz_expand_max_copy_bytes tuning into the base OpenZFS release. That is one thing nice about open source software, as things are found to be better, they can be changed. Or changed faster.

For pool topology and new users, in someways HexOS is designed for that type of turnkey usage. While on the other hand TrueNAS Core AND SCALE are designed for Enterprise users. Experienced NAS and / or ZFS users will have an idea of what they want. Like 1 or 2 disks worth of redundancy and the costs & risks of those choices.

In general, the average SOHO user does not need:

- Hot Spare

- dRAID

- L2ARC

- SLOG

- RAID-Z3

- Special Allocation devices

- Mirrored boot device

To be clear, I am not saying ALL SOHO users / free users won’t want or need any of those. It is just that over the years I have seen many new users want to use such but have no specific need. Or worse, have a poor understanding of what they do, (SLOG is not a write cache!). Like trying to expand 8GBs of memory with a 1TB L2ARC. Really?

I’ve been using Synology since 2011/2012 (started with a DS1511+) and IIRC in DSM 5 you indeed needed to tune a parameter with a CLI command to improve the resilvering/expansion priority significantly. Granted by now this setting is in a simplified form in the UI (again IIRC, haven’t used this in a long time), but it took some years.

I will grant you though that Synology managed to hide the complexities under the hood at bit more successfully than TrueNAS, so for the casual (non-techie) user it might be the easier NAS-OS to handle. But now we have HexOS, which attempts to do the same with TrueNAS as the foundation and I hope that they will succeed, though there have a long way to go.

I also agree with you that SHR (Synology Hybrid RAID) is a great feature, to allow you to use mixed size HDDs more effectively in a pool and that’s a feature that home and SOHO users can really appreciate. I myself never cared about any of the Synology apps, but I like the flexibility and safety that the combo of SHR and BTRFS provides. (From what I’ve heard Synology has added some proprietary code to BTRFS to mitigate some of its weaknesses, e.g. with the RAID write hole.)

So with differences like this - and not to mention that going form raidzn to raidzn+1 isn’t possible (yet?) in OpenZFS - the differences in what the targeted market segment was/is becomes obvious. Yet, even though I’m not the customer that iXSystem will sell anything to in the foreseeable future (though I did take a long look at the TrueNAS Mini R), I appreciate that they have opened up their software to the community for free. Whereas Synology seems to get more eager every year to lock down their hardware and get users to buy their rebranded products, such as memory, NICs and HDDs. So Kudos to iXSystems for not falling to the dark side!

I hope that - over time - OpenZFS will get some of those QoL features like parity upgrade (e.g. from raidz1 to raidz2) and also a built-in option to “rebalance” the parity blocks after a raidz expansion (though I hear that a simple recursive copy script will do the trick).

Don’t take it wrong, in the end I did say that it is worth the move from DSM!

It’s just to share my experience of migration.

And I am so grateful that it is free and open source!

But anyway there’s only 1 point on which I don’t agree with you is that SOHO user don’t need SLOG (when using HDD for the data, not SDD of course): I was so disappointed on my first install by the writing speed and not ready to use async IO as integrity is important to me. So it’s not a cache and it does not increase the vdev overall throughput but for somme usage, like mine, that’s the same benefit as a cache: I can copy file nearly 8 time faster. Let’s call it “a mechanism to ensure RAM cache integrity”. So for a SOHO it really a great bonus for a small price.

Same here for Synology, I’m using the DS1812+ I migrated from as a backup server for my TrueNAS.

HexOS : not free, maybe partially open source (front end): I’m too old for this sh**. If I have enough money I would buy iXsystems hardware/support.

For “raidzn to raidzn+1” I don’t know if is technically possible to implement but if it is let’s hope that iXsystems is interested (I understand that iXsystems sponsored a part of the expansion work raidz expansion feature by don-brady · Pull Request #15022 · openzfs/zfs · GitHub )

Yes! Really thank you iXsystems!

To be clear I do like TrueNAS Community Edition (new name really too long, must find something shorter, FreeNAS maybe? ![]() ) and for me it’s the best solution but as you said “TrueNAS noob” in your signature I just wanted to share my experience from DSM to TrueNAS.

) and for me it’s the best solution but as you said “TrueNAS noob” in your signature I just wanted to share my experience from DSM to TrueNAS.

Yes, my DS1819+ will also be the backup server, once my migration to TrueNAS is finished.

I briefly looked into HexOS and it’s definitely not for me either. They don’t even offer 2-disk-redundancy in their UI at the moment. But as a contender to DSM, HexOS might have a chance in the future, though perhaps more of a chance, if there was a company that would package HexOS with their own hardware and offer an integrated solution to non-techie users. Or perhaps HexOS is trying to position itself against Unraid? We shall see…

I wouldn’t blame them, if there weren’t interested, but now that single raidz VDEV expansion is a thing, perhaps some in their customer base with smaller systems would want larger VDEVs, instead of adding another VDEV. And I could see that with increasing VDEV size might come the desire to increase the raid-level (likely though from raidz2 to raidz3). Just some random guesses and musings on my part…

And I very much appreciate you sharing your experience, because as a newbie it helps to have expectations calibrated. I did the same a couple of years ago on the Synology reddit board, when I wrote a purchasing guide for Synology NAS models. And now that I’m on the receiving end of knowledge sharing, I’m very grateful for all experiences and insights shared, which will make the transition so much smoother for me.

One of these things is not like the other.

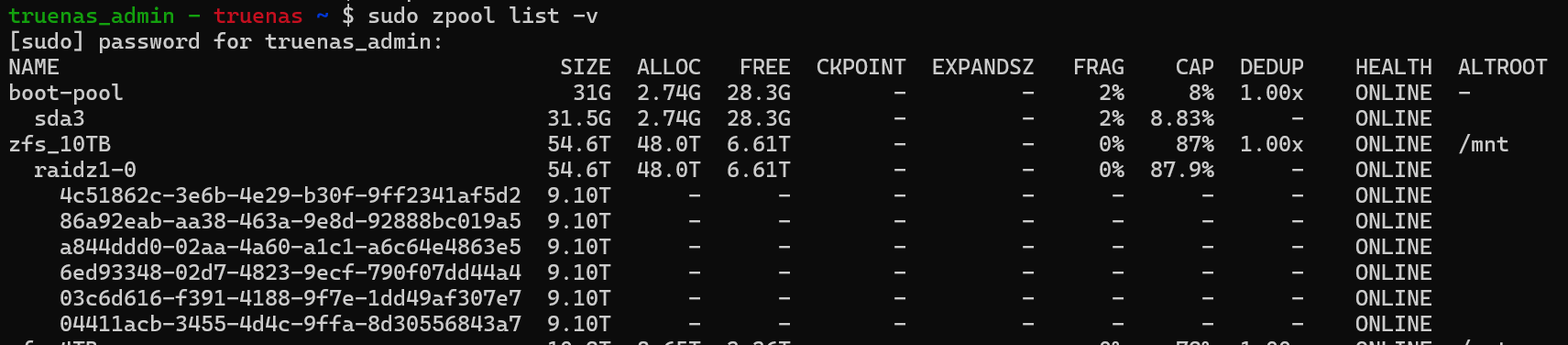

zpool list -v shows 54.6TB

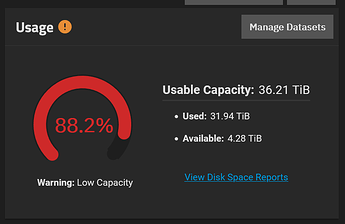

Dashboard shows usable capacity is 36.21TB

I’ve done a few single VDEV expansions on this pool now:

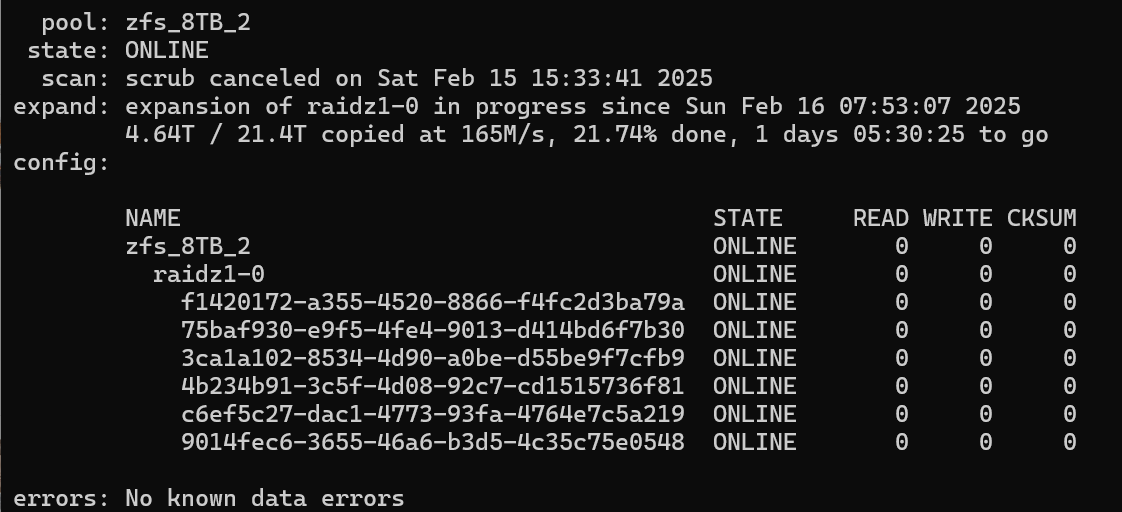

I’m 58% through running a rebalance on the pool:

and am seeing the ALLOC / FREE and CAP changing slowly but no change to this:

BTW: once I finish ZFS expansion-a-pollooza 2025 I’ll get all my pools back under 80% ![]()

Yeah, that’s one of the “is it a bug? Is it a feature?” Questions that arose last August.

My question is… Do you have/need to rebalance after every single drive added? Or after, let’s say, I added three more drives (one at a time) then rebalance all after they have been added?

You can do it once at the end.

I’ve seen some discussion - especially over at OpenZFS about the different answers you can get from ZFS regarding free space calculations - but this seems like a pretty straightforward discrepancy between the widget / dashboard display and the most basic of zpool commands.

Am I missing something @Captain_Morgan?

I noticed its referenced here: Jira

Thanks @Stux I hadn’t seen that thread - will watch it for any updates

My first 2x expansions in BETA 1 - first expansion ran very fast (zfs_8TB ~350MB/sec) the second expansion (zfs_8TB_2) is running very slow and raidz_expand_max_copy_bytes has no effect

No differences from other drives / pools that I have expanded, HGST SAS drive

Were these two expansions happening at the same time?

Its not something we have tested or recommended…

nope I started the zfs_8TB_2 (slow) one after the other had completed

I just noticed that ever since I paused the scrub on zfs_8TB (the fast expansion) things are running a bit faster on zfs_8TB_2:

Its a good observation… perhaps we should automate the scrub pause.

Please make a “suggestion” with any data on speed changes.

Actually, stopping any in progress Scrub and preventing new Scrubs from starting should be standard for RAID-Zx expansions. After all, immediately after the expansion is completed, a Scrub is performed because the data re-columned did not have its checksums verified.

Now the stopping of in progress Scrubs on pools attempting to be RAID-Zx expanded, probably should be a prompt in the GUI. Like:

There is a Scrub in progress, starting RAID-Zx expansion would stop it.

Okay?

And of course, it would be only for a pool being expanded. Unrelated pools can start and continue their Scrubs without interference.

Edit: Looks like a Feature Request to stop Scrubs during the expansion is already part of this request: