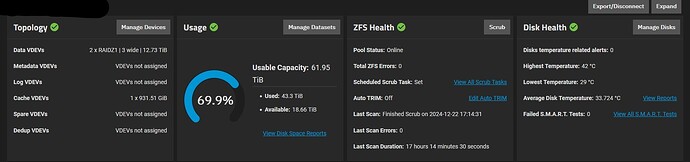

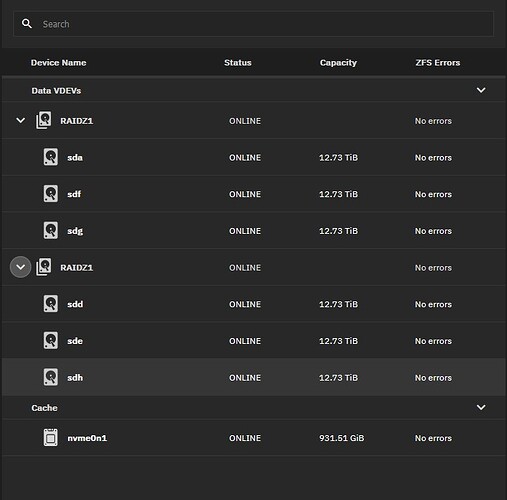

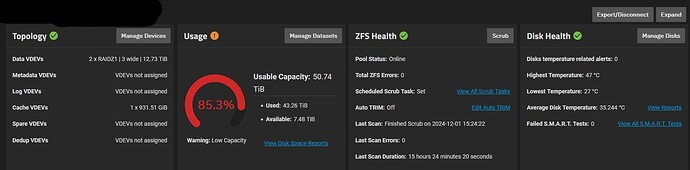

Hi I have a question I have been unable to solve. I have 2 servers running identical drives setup in identical VDEVs. But one server shows less usable space then the other. The servers are same models with slightly different processors and ram but the main hardware is identical. any suggestions would be greatly appreciated. I want to get larger drives for y main and then use the drives i replaced to expand my storage on the backup machine. the backup machine is the one showing less usable space so would like to solve this before I try and replace my main servers drives as i want a healthy backup before i start this process. Any help would be greatly appreciated.

Merry Christmas ![]() . Have you perhaps by any chance set a quota on the top level dataset which is either present on the backup system and not on the primary or at least different? It’s an easy thing to check and the first thing that springs to mind.

. Have you perhaps by any chance set a quota on the top level dataset which is either present on the backup system and not on the primary or at least different? It’s an easy thing to check and the first thing that springs to mind.

I checked and found no quotas set. everything I have looked at is identical

What is the output of the following commands on each server?

zpool status <poolname>

zpool list -v <poolname>

zfs list -o space <poolname>

Please put the output into formatted text, which looks like a </> on the composer’s menu bar.

You can censor any pool names or other sensitive information before posting it in here.

Main

admin@TrueNasMain[~]$ sudo zpool status #######

pool: #######

state: ONLINE

scan: scrub repaired 0B in 17:14:30 with 0 errors on Sun Dec 22 17:14:31 2024

config:

NAME STATE READ WRITE CKSUM

####### ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

491c484e-bc74-4b9d-b7af-702c7017e973 ONLINE 0 0 0

9af2fc70-abed-4cf9-86ad-4b7d73f48ead ONLINE 0 0 0

91421d2c-fb7b-45b6-9412-af7cd849ba96 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

11aaa26a-8a57-4edd-a987-bc2fb7378a6b ONLINE 0 0 0

47eaa808-2f3b-4394-978d-142a0151a176 ONLINE 0 0 0

64c9b76b-a4d9-4c9d-a4d8-a4d09c760f85 ONLINE 0 0 0

cache

nvme0n1p1 ONLINE 0 0 0

errors: No known data errors

admin@TrueNasMain[~]$ sudo zpool list -v #######

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

####### 76.4T 48.2T 28.2T - - 2% 63% 1.00x ONLINE /mnt

raidz1-0 38.2T 24.2T 14.0T - - 2% 63.3% - ONLINE

491c484e-bc74-4b9d-b7af-702c7017e973 12.7T - - - - - - - ONLINE

9af2fc70-abed-4cf9-86ad-4b7d73f48ead 12.7T - - - - - - - ONLINE

91421d2c-fb7b-45b6-9412-af7cd849ba96 12.7T - - - - - - - ONLINE

raidz1-1 38.2T 24.0T 14.2T - - 2% 62.9% - ONLINE

11aaa26a-8a57-4edd-a987-bc2fb7378a6b 12.7T - - - - - - - ONLINE

47eaa808-2f3b-4394-978d-142a0151a176 12.7T - - - - - - - ONLINE

64c9b76b-a4d9-4c9d-a4d8-a4d09c760f85 12.7T - - - - - - - ONLINE

cache - - - - - - - - -

nvme0n1p1 932G 931G 114M - - 0% 100.0% - ONLINE

admin@TrueNasMain[~]$ sudo zfs list -o space #######

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

####### 18.6T 43.3T 0B 149K 0B 43.3T

Backup

admin@TrueNasBackup[~]$ sudo zpool status ######

pool: ######

state: ONLINE

scan: scrub repaired 0B in 15:24:20 with 0 errors on Sun Dec 1 15:24:22 2024

config:

NAME STATE READ WRITE CKSUM

###### ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

3d8f5b7a-1fde-449f-8258-fdf25795d0f9 ONLINE 0 0 0

6f408671-d8b6-4b68-a912-35bce60a2023 ONLINE 0 0 0

e5211473-8d07-45fa-8fb6-32a25deb3182 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

2eaff45f-3c18-41bd-8ba0-a67864809f07 ONLINE 0 0 0

23d09873-ef14-40a9-afde-3d36d6a01432 ONLINE 0 0 0

3e1bcd29-07ee-4eb6-9da8-dd22d67bedd1 ONLINE 0 0 0

cache

9ad84128-2084-422e-8689-34c17517db39 ONLINE 0 0 0

errors: No known data errors

admin@TrueNasBackup[~]$ sudo zpool list -v ######

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

###### 76.4T 65.0T 11.4T - - 0% 85% 1.00x ONLINE /mnt

raidz1-0 38.2T 32.7T 5.51T - - 0% 85.6% - ONLINE

3d8f5b7a-1fde-449f-8258-fdf25795d0f9 12.7T - - - - - - - ONLINE

6f408671-d8b6-4b68-a912-35bce60a2023 12.7T - - - - - - - ONLINE

e5211473-8d07-45fa-8fb6-32a25deb3182 12.7T - - - - - - - ONLINE

raidz1-1 38.2T 32.3T 5.90T - - 0% 84.5% - ONLINE

2eaff45f-3c18-41bd-8ba0-a67864809f07 12.7T - - - - - - - ONLINE

23d09873-ef14-40a9-afde-3d36d6a01432 12.7T - - - - - - - ONLINE

3e1bcd29-07ee-4eb6-9da8-dd22d67bedd1 12.7T - - - - - - - ONLINE

cache - - - - - - - - -

9ad84128-2084-422e-8689-34c17517db39 932G 931G 111M - - 0% 100.0% - ONLINE

admin@TrueNasBackup[~]$ sudo zfs list -o space ######

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

###### 7.47T 43.3T 0B 128K 0B 43.3T

Perhaps snapshots?

I beg of you, please. Use preformatted text for any and all code that is posted on the forums. ![]()

It is hard to read otherwise.

sorry still figuring this out

There’s definitely an 11-TiB discrepancy between the two pools.

Identical vdevs, indeed. No apparent deduplication used.

- Which version of SCALE (or Core?) did you originally create these pools?

- Were the two vdevs (RAIDZ1 + RAIDZ1) originally created as they exist now, as 3-wide RAIDZ1 vdevs?

- Is one pool a backup target of the other?

Some more information that can help out, from both servers:

zpool list -o bcloneused,bclonesaved,bcloneratio <poolname>

zfs list -r -t filesystem -o usedds,usedsnap,usedrefreserv <poolname>

I wonder if one pool’s vDevs were made with 512 byte sectors and the other with 4096 byte sectors.

Please supply the output of the following for each pool:

zpool get ashift

In theory, the individual vDevs can have different ashift values in the same pool. But I don’t have the way to extract that information handy.

Not sure why it would make such a dramatic difference, unless the pool was full of small files. Perhaps in such a case the overhead of writing just 2 columns of a 3 column RAID-Z1 causes such waste.

Of course, I am reaching in to fantasy land at this point. But, the ashift information is both easy to get, and causes no harm to get it.

Sorry for the delay but here is the info that was requested .

Both were created from same boot media scale 22.12.3.1 Both are currently scale 24.10.0 Raids were both setup identically and as they are now. I use Rsync Tasks to push to the second machine. it will eventually be housed in a separate building on my property once its built.

admin@TrueNasMain[~]$ sudo zpool list -o bcloneused,bclonesaved,bcloneratio ######

BCLONE_USED BCLONE_SAVED BCLONE_RATIO

7.97T 11.2T 2.40x

admin@TrueNasMain[~]$ sudo zfs list -r -t filesystem -o usedds,usedsnap,usedrefreserv RageNas

USEDDS USEDSNAP USEDREFRESERV

149K 0B 0B

1.47G 0B 0B

55.7M 0B 0B

128K 0B 0B

128K 0B 0B

139K 0B 0B

677M 0B 0B

139K 0B 0B

68.0M 0B 0B

522K 341K 0B

128K 0B 0B

32.2M 0B 0B

128K 0B 0B

30.3T 0B 0B

22.0M 0B 0B

13.0T 0B 0B

160K 0B 0B

2.15M 0B 0B

128K 0B 0B

1.16G 0B 0B

159M 0B 0B

admin@TrueNasMain[~]$ sudo zpool get ashift

NAME PROPERTY VALUE SOURCE

####### ashift 12 local

boot-pool ashift 12 local

admin@TrueNasBackup[~]$ sudo zpool list -o bcloneused,bclonesaved,bcloneratio ######

BCLONE_USED BCLONE_SAVED BCLONE_RATIO

0 0 1.00x

admin@TrueNasBackup[~]$ sudo zfs list -r -t filesystem -o usedds,usedsnap,usedrefreserv Backup

USEDDS USEDSNAP USEDREFRESERV

128K 0B 0B

1.47G 0B 0B

20.4M 0B 0B

128K 0B 0B

128K 0B 0B

139K 0B 0B

555M 0B 0B

139K 0B 0B

21.0M 0B 0B

405K 778K 0B

128K 0B 0B

6.01M 0B 0B

128K 0B 0B

43.3T 0B 0B

admin@TrueNasBackup[~]$ sudo zpool get ashift

NAME PROPERTY VALUE SOURCE

###### ashift 12 local

boot-pool ashift 12 local

So the only difference I see is the bclone numbers main is 2.4x and second is 1.0x would this be my difference and how do i change it.

This explains it!

Since Rsync is “file-based”, it does not carry over the savings that you enjoyed from “block-based” ZFS block-cloning.

To Rsync, a “file is a file”. For ZFS replication, it doesn’t care about files, but rather “blocks”. If several pointers use the same block of data (because of several “copied” files), then a ZFS replication will only need to transfer that single block, rather than the same file multiple times.

As long as you keep using file-based tools (e.g, Rsync) to transfer between ZFS servers, you will always lose out on any savings from block-cloning or deduplication.

Ok I have never been able to get replication working. Will have to see if I can fix it or find a different work around

Don’t forget to mark the solution. ![]()