Hello folks

supermicro x11ssl-f

Intel(R) Xeon(R) CPU E3-1280 v6

32GB ECC

1xNVME for apps pool

32gb SSD boot drive

tank pool with 4x 4TB HHD zfs2

datos pool 1x8TB HDD

truenas scale 25.04.1

I was very happy with my rock solid server, I left my google drive and google photos, trusted my fancy Nextcloud drive and installed a media server, vaultware, etc… It was working perfectly fine for a year or so… but the other day one of the hdds reported some problems, so I bought another drive, installed the new one, resilvered and two days later I reboot the system because I wanted to add a GPU for my media server.

The nightmare started after the reboot, because my pool tank had disappeared, all the 4 drives were exported and I can’t mount them again… ChatGPT had very good intentions and there is apparently a lot of knowledge behind… but it didn’t help me to solve the problem

I still can’t believe that a zfs2 can break so easily, it should have 2 redundant disks…

admin@truenas[~]$ sudo zpool import

tank ONLINE

raidz2-0 ONLINE

4f584c22-2192-11ef-8ffc-bc2411747b27 ONLINE

4f7421bf-2192-11ef-8ffc-bc2411747b27 ONLINE

4f4aa40e-2192-11ef-8ffc-bc2411747b27 ONLINE

58a99133-4ca3-4ae2-afc3-f88ad96f3d68 ONLINE

admin@truenas[~]$ sudo zpool import -d /dev/disk/by-id/

tank UNAVAIL insufficient replicas

raidz2-0 UNAVAIL insufficient replicas

4f584c22-2192-11ef-8ffc-bc2411747b27 UNAVAIL

4f7421bf-2192-11ef-8ffc-bc2411747b27 UNAVAIL

4f4aa40e-2192-11ef-8ffc-bc2411747b27 UNAVAIL

wwn-0x5000c500faaa9cc9-part1 ONLINE

Please provide the output of this command, as formatted text (</> button):

lsblk -bo NAME,MODEL,ROTA,PTTYPE,TYPE,START,SIZE,PARTTYPENAME,PARTUUID

I assume that there is no SAS HBA or “PCIe SATA card”.

Have you checked cables (power and data)?

1 Like

If you are going to import then you need to specify where it will get mounted. Usually you don’t need to tell ZFS where to look, but if you do then it needs to be by partuuid not by disk id. I suggest that you try the following and see what it says:

sudo zpool import -R /mnt tank

Also please run @etorix ’s command to give us details of what disks and partitions Linux can see.

1 Like

thanks etorix

admin@truenas[~]$ lsblk -bo NAME,MODEL,ROTA,PTTYPE,TYPE,START,SIZE,PARTTYPENAME,PARTUUID

NAME MODEL ROTA PTTYPE TYPE START SIZE PARTTYPENAME PARTUUID

loop1 0 loop 383078400

sda ST4000VN00 1 gpt disk 4000787030016

├─sda1 1 gpt part 128 2147483648 FreeBSD swap 4f0242d0-2192-11ef-8ffc-bc2411747b27

└─sda2 1 gpt part 4194432 3998639460352 FreeBSD ZFS 4f584c22-2192-11ef-8ffc-bc2411747b27

sdb MTFDDAK256 0 gpt disk 256060514304

├─sdb1 0 gpt part 4096 1048576 BIOS boot 7bce92bb-c57e-48a7-90a2-18316f48df4d

├─sdb2 0 gpt part 6144 536870912 EFI System 076662d1-13a3-4d32-9cd3-0b39f25bd7eb

├─sdb3 0 gpt part 34609152 238340611584 Solaris /usr & Apple ZFS 93d10795-bf3b-4fef-8966-8baa5df3fc65

└─sdb4 0 gpt part 1054720 17179869184 Linux swap 21f56f6c-2a4c-4fe0-85f2-1ddbcbdd0696

sdc ST4000VN00 1 gpt disk 4000787030016

├─sdc1 1 gpt part 128 2147483648 FreeBSD swap 4f2144fc-2192-11ef-8ffc-bc2411747b27

└─sdc2 1 gpt part 4194432 3998639460352 FreeBSD ZFS 4f7421bf-2192-11ef-8ffc-bc2411747b27

sdd ST4000VN00 1 gpt disk 4000787030016

├─sdd1 1 gpt part 128 2147483648 FreeBSD swap 4f0c5012-2192-11ef-8ffc-bc2411747b27

└─sdd2 1 gpt part 4194432 3998639460352 FreeBSD ZFS 4f4aa40e-2192-11ef-8ffc-bc2411747b27

sde ST4000NT00 1 gpt disk 4000787030016

└─sde1 1 gpt part 2048 3998639460352 Solaris /usr & Apple ZFS 58a99133-4ca3-4ae2-afc3-f88ad96f3d68

nvme0n1 Lexar SSD 0 gpt disk 512110190592

├─nvme0n1p1

│ 0 gpt part 128 2147483648 FreeBSD swap 30b828ac-2234-11ef-8ffc-bc2411747b27

└─nvme0n1p2

0 gpt part 4194432 509962620928 FreeBSD ZFS 30bb9738-2234-11ef-8ffc-bc2411747b27

thanks Protopia

admin@truenas[~]$ sudo zpool import -R /mnt tank

cannot import 'tank': one or more devices is currently unavailable

BTW, Could reinserting the old HDD that had caused problems before the resilver help?

Unless that drive is completely dead/causes system to hang/freeze or any other kind of degradation; yes, re-inserting the failing HDD can help.

Generally recommended (if possible/enough ports & introduces no additional faults) to keep all drives connected when replacing.

1 Like

Arwen

July 1, 2025, 11:33am

8

Please supply the output of the following commands for the 4 x 4TB disks;

zdb -l /dev/sda2 | grep txg: | grep -v create_txg:

zdb -l /dev/sdc2 | grep txg: | grep -v create_txg:

zdb -l /dev/sdd2 | grep txg: | grep -v create_txg:

zdb -l /dev/sde1 | grep txg: | grep -v create_txg:

They should all have the same ZFS transaction number. Let us see what the drives say they have.

Next, the disks should all have 4 labels:

zdb -l /dev/sda2 | grep labels

zdb -l /dev/sdc2 | grep labels

zdb -l /dev/sdd2 | grep labels

zdb -l /dev/sde1 | grep labels

i don’t get anything:

admin@truenas[/]$ sudo zdb -l /dev/sda2 | grep txg: | grep -v create_txg:

admin@truenas[/]$ sudo zdb -l /dev/sdc2 | grep txg: | grep -v create_txg:

admin@truenas[/]$ sudo zdb -l /dev/sdd2 | grep txg: | grep -v create_txg:

admin@truenas[/]$ sudo zdb -l /dev/sde1 | grep txg: | grep -v create_txg:

admin@truenas[/]$

Arwen

July 1, 2025, 11:42am

10

Weird.

Can you get us the output of 1 disk, the supposed working disk?

sudo zdb -l /dev/sde1

i installed again the resilvered one and the output changes:

admin@truenas[/]$ sudo zdb -l /dev/sde1 | grep txg: | grep -v create_txg:

txg: 6606762

admin@truenas[/]$ sudo zdb -l /dev/sde1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'tank'

state: 0

txg: 6606762

pool_guid: 13400593935322567235

errata: 0

hostid: 590394936

hostname: 'truenas'

top_guid: 15550163770109457767

guid: 4243709192505670205

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15550163770109457767

nparity: 2

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 15994538950656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 9392911267891898184

path: '/dev/disk/by-partuuid/4f584c22-2192-11ef-8ffc-bc2411747b27'

DTL: 73904

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 5149461304993027350

path: '/dev/disk/by-partuuid/4f7421bf-2192-11ef-8ffc-bc2411747b27'

DTL: 73903

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 15599814676261111453

path: '/dev/disk/by-partuuid/4f4aa40e-2192-11ef-8ffc-bc2411747b27'

DTL: 73902

create_txg: 4

removed: 1

children[3]:

type: 'disk'

id: 3

guid: 4243709192505670205

path: '/dev/disk/by-partuuid/58a99133-4ca3-4ae2-afc3-f88ad96f3d68'

whole_disk: 0

DTL: 771

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

Arwen

July 1, 2025, 11:50am

12

I’m not sure why the other 3 are not showing any data. But, without enough disks, (2 in a 4 disk RAID-Z2), that have valid ZFS header / label, the pool can’t be imported.

Perhaps someone else will be able to take this further.

@Jorge_Fernandez

Please run the following command to checks that device names didn’t change with a reboot etc.:

lsblk -bo NAME,LABEL,MAJ:MIN,TRAN,ROTA,ZONED,VENDOR,MODEL,SERIAL,PARTUUID,START,SIZE,PARTTYPENAMEsudo zpool import

Assuming that the disk names didn’t change please run the following and post the results (apologies but they will be lengthy):

sudo zdb -l /dev/sda2sudo zdb -l /dev/sdc2sudo zdb -l /dev/sdd2sudo zdb -l /dev/sde1

Then try the following in sequence and STOP immediately if any of them give no output and return straight to the command prompt:

sudo zpool import -R /mnt tanksudo zpool import -R /mnt -d /dev/disk/by-partuuidsudo zpool import -R /mnt -d /dev/disk/by-partuuid tanksudo zpool import -R /mnt -f tanksudo zpool import -R /mnt -f -d /dev/disk/by-partuuidsudo zpool import -R /mnt -f -d /dev/disk/by-partuuid tank

If any of these gave no output (so you stopped), then run the following commands:

`sudo zpool list -vL tank

`sudo zfs list -r tank

(Apologies for the multitude of commands but I prefer to ask for copious debug output all at once because it saves on the back and forth and can end up being quicker.)

1 Like

Should I do this with the resilvered HDD or the defective one.

No - definitely do NOT put the old drive back in. Let’s try to stick to a fixed set of hardware and not try to chop and change.

RAIDZ2 should be able to survive this - we just need to work out how to import the pool. If you swap the drive back, then you will have one drive which is known failing and which has a very old TXG.

What’s on the datos pool?

it is just data I don’t need to secure with additional parity, like media…

I have doubled checked the connections, changed one of them…

After inserting the resilvered one, I realised during the boot process that it gives me the option to boot whit version 25.04.1 and with 24.04.2.1 and 24.04.2.2. I guess this is no important, but if nothing works maybe I could try…

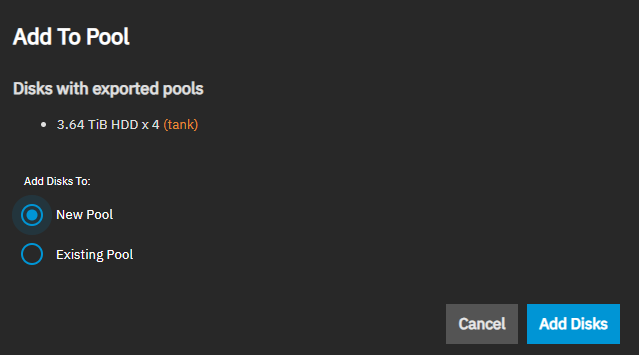

BTW, the UI gives me the option of adding the 4 disks to a new pool:

Now, to your commands:

admin@truenas[~]$ lsblk -bo NAME,LABEL,MAJ:MIN,TRAN,ROTA,ZONED,VENDOR,MODEL,SERIAL,PARTUUID,START,SIZE,PARTTYPENAME

NAME LABEL MAJ:MIN TRAN ROTA ZONED VENDOR MODEL SERIAL PARTUUID START SIZE PARTTYPENAME

loop1 7:1 0 none 383078400

sda 8:0 sata 0 none ATA MTFDDAK2 14080C02 256060514304

├─sda1 8:1 0 none 7bce92bb-c57e-48a7-90a2-18316f48df4d 4096 1048576 BIOS boot

├─sda2 EFI 8:2 0 none 076662d1-13a3-4d32-9cd3-0b39f25bd7eb 6144 536870912 EFI System

├─sda3 boot-pool 8:3 0 none 93d10795-bf3b-4fef-8966-8baa5df3fc65 34609152 238340611584 Solaris /usr & Apple ZFS

└─sda4 8:4 0 none 21f56f6c-2a4c-4fe0-85f2-1ddbcbdd0696 1054720 17179869184 Linux swap

sdb 8:16 sata 1 none ATA ST4000NT WX11JZB9 4000787030016

└─sdb1 tank 8:17 1 none 58a99133-4ca3-4ae2-afc3-f88ad96f3d68 2048 3998639460352 Solaris /usr & Apple ZFS

sdc 8:32 sata 1 none ATA ST4000VN ZW601MD7 4000787030016

├─sdc1 8:33 1 none 4f2144fc-2192-11ef-8ffc-bc2411747b27 128 2147483648 FreeBSD swap

└─sdc2 tank 8:34 1 none 4f7421bf-2192-11ef-8ffc-bc2411747b27 4194432 3998639460352 FreeBSD ZFS

sdd 8:48 sata 1 none ATA ST4000VN ZW628JGE 4000787030016

├─sdd1 8:49 1 none 4f0c5012-2192-11ef-8ffc-bc2411747b27 128 2147483648 FreeBSD swap

└─sdd2 tank 8:50 1 none 4f4aa40e-2192-11ef-8ffc-bc2411747b27 4194432 3998639460352 FreeBSD ZFS

sde 8:64 sata 1 none ATA ST4000VN WW60CTGN 4000787030016

├─sde1 8:65 1 none 4f0242d0-2192-11ef-8ffc-bc2411747b27 128 2147483648 FreeBSD swap

└─sde2 tank 8:66 1 none 4f584c22-2192-11ef-8ffc-bc2411747b27 4194432 3998639460352 FreeBSD ZFS

sdf 8:80 sata 1 none ATA ST8000VN ZPV0G93G 8001563222016

└─sdf1 datos 8:81 1 none 8cfc82bf-1706-4c57-a307-c796ed8216c7 4096 8001560773120 Solaris /usr & Apple ZFSnvme0n1 259:0 nvme 0 none Lexar SS MJN315Q0 512110190592

├─nvme0n1p1

│ 259:1 nvme 0 none 30b828ac-2234-11ef-8ffc-bc2411747b27 128 2147483648 FreeBSD swap

└─nvme0n1p2

apps 259:2 nvme 0 none 30bb9738-2234-11ef-8ffc-bc2411747b27 4194432 509962620928 FreeBSD ZFS

admin@truenas[~]$ sudo zpool import

[sudo] password for admin:

pool: tank

id: 13400593935322567235

state: ONLINE

status: Some supported features are not enabled on the pool.

(Note that they may be intentionally disabled if the

'compatibility' property is set.)

action: The pool can be imported using its name or numeric identifier, though

some features will not be available without an explicit 'zpool upgrade'.

config:

tank ONLINE

raidz2-0 ONLINE

4f584c22-2192-11ef-8ffc-bc2411747b27 ONLINE

4f7421bf-2192-11ef-8ffc-bc2411747b27 ONLINE

4f4aa40e-2192-11ef-8ffc-bc2411747b27 ONLINE

58a99133-4ca3-4ae2-afc3-f88ad96f3d68 ONLINE

admin@truenas[~]$ sudo zdb -l /dev/sdb1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'tank'

state: 0

txg: 6606762

pool_guid: 13400593935322567235

errata: 0

hostid: 590394936

hostname: 'truenas'

top_guid: 15550163770109457767

guid: 4243709192505670205

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15550163770109457767

nparity: 2

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 15994538950656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 9392911267891898184

path: '/dev/disk/by-partuuid/4f584c22-2192-11ef-8ffc-bc2411747b27'

DTL: 73904

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 5149461304993027350

path: '/dev/disk/by-partuuid/4f7421bf-2192-11ef-8ffc-bc2411747b27'

DTL: 73903

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 15599814676261111453

path: '/dev/disk/by-partuuid/4f4aa40e-2192-11ef-8ffc-bc2411747b27'

DTL: 73902

create_txg: 4

removed: 1

children[3]:

type: 'disk'

id: 3

guid: 4243709192505670205

path: '/dev/disk/by-partuuid/58a99133-4ca3-4ae2-afc3-f88ad96f3d68'

whole_disk: 0

DTL: 771

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sdc2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'tank'

state: 0

txg: 6606762

pool_guid: 13400593935322567235

errata: 0

hostid: 590394936

hostname: 'truenas'

top_guid: 15550163770109457767

guid: 5149461304993027350

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15550163770109457767

nparity: 2

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 15994538950656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 9392911267891898184

path: '/dev/disk/by-partuuid/4f584c22-2192-11ef-8ffc-bc2411747b27'

DTL: 73904

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 5149461304993027350

path: '/dev/disk/by-partuuid/4f7421bf-2192-11ef-8ffc-bc2411747b27'

DTL: 73903

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 15599814676261111453

path: '/dev/disk/by-partuuid/4f4aa40e-2192-11ef-8ffc-bc2411747b27'

DTL: 73902

create_txg: 4

removed: 1

children[3]:

type: 'disk'

id: 3

guid: 4243709192505670205

path: '/dev/disk/by-partuuid/58a99133-4ca3-4ae2-afc3-f88ad96f3d68'

whole_disk: 0

DTL: 771

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sdd2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'tank'

state: 0

txg: 6590607

pool_guid: 13400593935322567235

errata: 0

hostid: 590394936

hostname: 'truenas'

top_guid: 15550163770109457767

guid: 15599814676261111453

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15550163770109457767

nparity: 2

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 15994538950656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 9392911267891898184

path: '/dev/disk/by-partuuid/4f584c22-2192-11ef-8ffc-bc2411747b27'

DTL: 73904

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 5149461304993027350

path: '/dev/disk/by-partuuid/4f7421bf-2192-11ef-8ffc-bc2411747b27'

DTL: 73903

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 15599814676261111453

path: '/dev/disk/by-partuuid/4f4aa40e-2192-11ef-8ffc-bc2411747b27'

DTL: 73902

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 4243709192505670205

path: '/dev/disk/by-partuuid/58a99133-4ca3-4ae2-afc3-f88ad96f3d68'

whole_disk: 0

DTL: 771

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sde2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'tank'

state: 0

txg: 6606762

pool_guid: 13400593935322567235

errata: 0

hostid: 590394936

hostname: 'truenas'

top_guid: 15550163770109457767

guid: 9392911267891898184

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15550163770109457767

nparity: 2

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 15994538950656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 9392911267891898184

path: '/dev/disk/by-partuuid/4f584c22-2192-11ef-8ffc-bc2411747b27'

DTL: 73904

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 5149461304993027350

path: '/dev/disk/by-partuuid/4f7421bf-2192-11ef-8ffc-bc2411747b27'

DTL: 73903

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 15599814676261111453

path: '/dev/disk/by-partuuid/4f4aa40e-2192-11ef-8ffc-bc2411747b27'

DTL: 73902

create_txg: 4

removed: 1

children[3]:

type: 'disk'

id: 3

guid: 4243709192505670205

path: '/dev/disk/by-partuuid/58a99133-4ca3-4ae2-afc3-f88ad96f3d68'

whole_disk: 0

DTL: 771

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zpool import -R /mnt tank

cannot import 'tank': one or more devices is currently unavailable

admin@truenas[~]$ sudo zpool import -R /mnt -d /dev/disk/by-partuuid

pool: tank

id: 13400593935322567235

state: ONLINE

status: Some supported features are not enabled on the pool.

(Note that they may be intentionally disabled if the

'compatibility' property is set.)

action: The pool can be imported using its name or numeric identifier, though

some features will not be available without an explicit 'zpool upgrade'.

config:

tank ONLINE

raidz2-0 ONLINE

4f584c22-2192-11ef-8ffc-bc2411747b27 ONLINE

4f7421bf-2192-11ef-8ffc-bc2411747b27 ONLINE

4f4aa40e-2192-11ef-8ffc-bc2411747b27 ONLINE

58a99133-4ca3-4ae2-afc3-f88ad96f3d68 ONLINE

admin@truenas[~]$ sudo zpool import -R /mnt -d /dev/disk/by-partuuid tank

cannot import 'tank': one or more devices is currently unavailable

admin@truenas[~]$ sudo zpool import -R /mnt -f tank

cannot import 'tank': one or more devices is currently unavailable

admin@truenas[~]$ sudo zpool import -R /mnt -f -d /dev/disk/by-partuuid

pool: tank

id: 13400593935322567235

state: ONLINE

status: Some supported features are not enabled on the pool.

(Note that they may be intentionally disabled if the

'compatibility' property is set.)

action: The pool can be imported using its name or numeric identifier, though

some features will not be available without an explicit 'zpool upgrade'.

config:

tank ONLINE

raidz2-0 ONLINE

4f584c22-2192-11ef-8ffc-bc2411747b27 ONLINE

4f7421bf-2192-11ef-8ffc-bc2411747b27 ONLINE

4f4aa40e-2192-11ef-8ffc-bc2411747b27 ONLINE

58a99133-4ca3-4ae2-afc3-f88ad96f3d68 ONLINE

admin@truenas[~]$ sudo zpool import -R /mnt -f -d /dev/disk/by-partuuid tank

cannot import 'tank': one or more devices is currently unavailable

thank you…

Hi Arwen

admin@truenas[~]$ sudo zdb -l /dev/sdb1 | grep txg: | grep -v create_txg:

txg: 6606762

admin@truenas[~]$ sudo zdb -l /dev/sdc2 | grep txg: | grep -v create_txg:

txg: 6606762

admin@truenas[~]$ sudo zdb -l /dev/sdd2 | grep txg: | grep -v create_txg:

txg: 6590607

admin@truenas[~]$ sudo zdb -l /dev/sde2 | grep txg: | grep -v create_txg:

txg: 6606762

admin@truenas[~]$ sudo zdb -l /dev/sda3 | grep labels

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sdb1 | grep labels

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sdc2 | grep labels

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sdd2 | grep labels

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/sde2 | grep labels

labels = 0 1 2 3

Ok - I think the reason it won’t import is that 1 of the drives (currently /dev/sdd - a Seagate ST4000VN drive with serial number ZW628JGE on the label) has a lower TXG than the other 3.

You have two choices I think:

Shutdown, remove the Seagate ST4000VN drive with serial number ZW628JGE, reboot and try to import the degraded pool using sudo zpool import -R /mnt -f tank. If that works, then you will have one drive marked unavailable. You need to clean the removed drive (someone will need to advise how to do it), before reinstalling it and using it to resilver the degraded RAIDZ2 back to full redundancy.

Try to mount the pool read-only with a roll-back to TXG 6590607 and then copy the data elsewhere before cleaning the disks and recreating the pool - but this is 16,000 transactions previous so the uberblocks may have wrapped around which means that some data freed up blocks may have been overwritten and you will lose the data written in the 16,000 transactions and there might be files lost too.

Obviously the first of these seems to be most sensible - but get a 2nd opinion on this.

1 Like