Problem/Justification

When replacing a drive in a vdev, a new drive even from the same manufacturer may just be a hair too small to fit the vdev.

Examples of users impacted by the recent change in SCALE 24.04.1 to remove the default 2 GiB swap partition, which acted as this buffer:

Impact

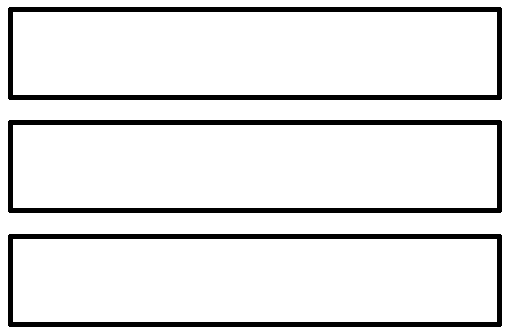

With a default buffer space of 2 GiB, a slightly-too-small replacement drive could still be added with a smaller or no buffer on that drive.

FreeNAS, TrueNAS CORE and TrueNAS SCALE before 24.04.1 did this and it’s really an amazing Quality of Life feature for users. Please bring it back.

2 GiB was chosen because CORE created buffer partitions of exactly 2 GiB; SCALE before 24.04.1 created buffer partitions of 2 GiB + 512 bytes; and users report size variation of between 100 MiB and 1.1 GiB on “same capacity class” drives. 2 GiB is a safe buffer that continues a good tradition.

Edit:

After discussion, a good way to implement may be to make the partitions on drives added to a new vdev 2 GiB smaller than max, without a dedicated “buffer partition”.

When replacing or adding to an existing vdev, use the same logic of “2 GiB smaller than max”, and if that’s smaller than the smallest member in the vdev, try to make the partition as large as that member, to allow the replacement to succeed.

No additional UI element is needed. Replacement would still show an error when the drive is genuinely too small; while the slight variations in drive size between revisions or manufacturers are taken care of by this 2 GiB buffer.

Gradually increasing the size of a vdev by replacing members with larger ones also still works as before.

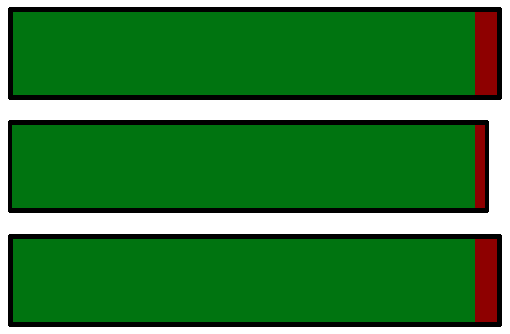

Graphics illustrating this mechanism: [Accepted] Create 2 GiB buffer space when adding a disk - #16 by winnielinnie

User Story

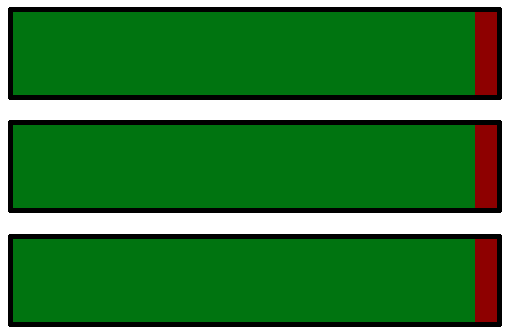

User has an existing vdev with 2 GiB buffer partitions (“swap” partitions) and wants to add or replace a drive: This method works. The new drive doesn’t have a buffer partition, but it has buffer space. Same difference as far as ZFS is concerned.

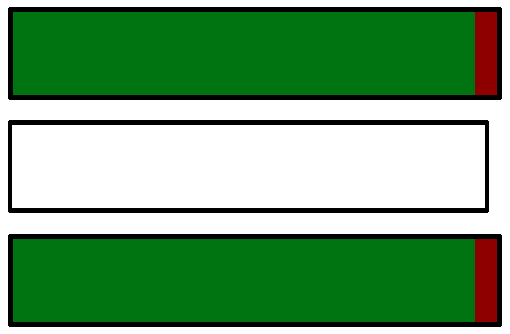

User has an existing vdev without any buffer space (post removal of this default, vdev created in SCALE 24.04.1 or later) and wants to add or replace a drive: No change to current functionality.

- A drive that’s exactly the same size will work, and won’t have buffer space.

- A drive that’s just a hair larger will work, and will have buffer space.

- A drive that’s a hair too small continues to be too small. “The UX damage created by removal of default buffer space can’t just be un-created”

User creates a new vdev: All drives have buffer space, making future replacement or addition easy. Some thought should be given to how the UI determines the partition size if the drives are all the same “capacity class” but of slightly varying size: However, the UI handles that case now, and the same logic could continue to be used. Just with a 2 GiB buffer in place.

User has an existing vdev and wants to add or replace a drive with a bigger one: This works, the new drive is placed with 2 GiB buffer space. Gradual replacement and expansion of a vdev through larger drives continues to work.

All stories I can think of for any vdev ever created in FreeNAS or TrueNAS are either improved or, where this is not possible (existing vdev without buffer and slightly smaller replacement drive), not worsened compared to the status quo.