I have upgraded from TrueNAS CORE 13.0-U6.2 to TrueNAS SCALE 24.04.2.3 using the WebUI.

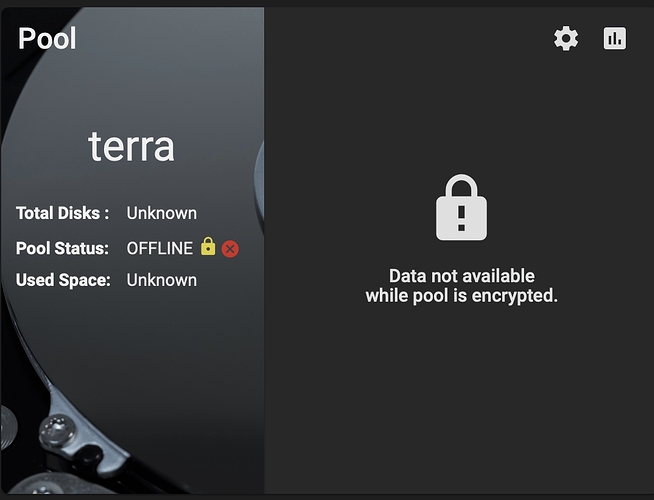

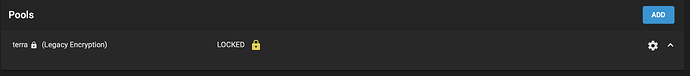

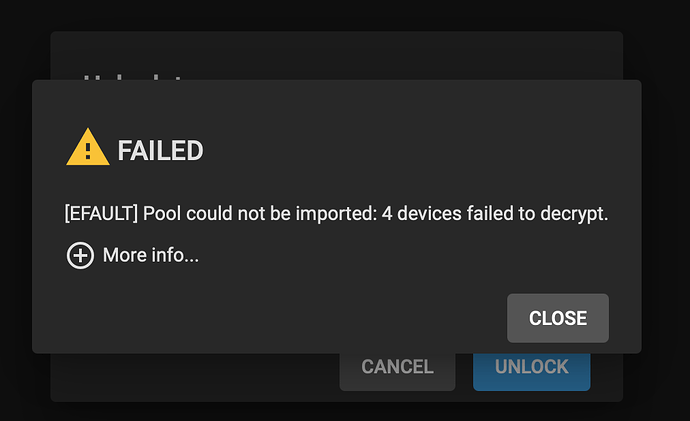

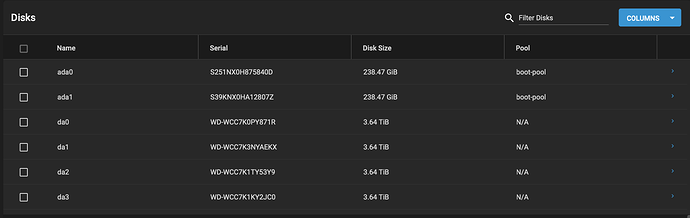

After the upgrade my main pool “terra” is offline and all 4 disks are unassigned. The pool contains offline Data VDEVs.

But the disk health is fine.

I have run some CLI commands that might help to find the issue:

root@freenas[~]# zpool status

pool: freenas-boot

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

scan: scrub repaired 0B in 00:00:16 with 0 errors on Thu Oct 17 03:45:16 2024

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-Samsung_SSD_850_PRO_256GB_S39KNX0HA12807Z-part2 ONLINE 0 0 0

ata-Samsung_SSD_850_PRO_256GB_S251NX0H875840D-part2 ONLINE 0 0 0

errors: No known data errors

root@freenas[~]#

root@freenas[~]# zpool import

no pools available to import

root@freenas[~]#

root@freenas[~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 3.6T 0 disk

├─sda1 8:1 0 2G 0 part

└─sda2 8:2 0 3.6T 0 part

sdb 8:16 0 3.6T 0 disk

├─sdb1 8:17 0 2G 0 part

└─sdb2 8:18 0 3.6T 0 part

sdc 8:32 0 3.6T 0 disk

├─sdc1 8:33 0 2G 0 part

└─sdc2 8:34 0 3.6T 0 part

sdd 8:48 0 238.5G 0 disk

├─sdd1 8:49 0 512K 0 part

└─sdd2 8:50 0 238.5G 0 part

sde 8:64 0 238.5G 0 disk

├─sde1 8:65 0 512K 0 part

└─sde2 8:66 0 238.5G 0 part

sdf 8:80 0 3.6T 0 disk

├─sdf1 8:81 0 2G 0 part

└─sdf2 8:82 0 3.6T 0 part

root@freenas[~]# lsblk -f

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

sda

├─sda1

└─sda2

sdb

├─sdb1

└─sdb2

sdc

├─sdc1

└─sdc2

sdd

├─sdd1

└─sdd2 zfs_member 5000 freenas-boot 6818064012132738886

sde

├─sde1

└─sde2 zfs_member 5000 freenas-boot 6818064012132738886

sdf

├─sdf1

└─sdf2

root@freenas[~]# midclt call pool.query | jq

[

{

"id": 1,

"name": "terra",

"guid": "7680425423279888722",

"path": "/mnt/terra",

"status": "OFFLINE",

"scan": null,

"topology": null,

"healthy": false,

"warning": false,

"status_code": null,

"status_detail": null,

"size": null,

"allocated": null,

"free": null,

"freeing": null,

"fragmentation": null,

"size_str": null,

"allocated_str": null,

"free_str": null,

"freeing_str": null,

"autotrim": {

"parsed": "off",

"rawvalue": "off",

"source": "DEFAULT",

"value": "off"

}

}

]

Any help is highly appreciated.

Many thanks!