Hello everyone,

I’ve recently added a new GPU to my truenas server (AMD Mi50 32Gb) to replace my good old Nvidia P2000

The problem i’m having is that i cannot add it to the Ollama App : i get this error message (using both the ROCM image and the default image)

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 515, in run

await self.future

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 562, in __run_body

rv = await self.middleware.run_in_thread(self.method, *args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 627, in run_in_thread

return await self.run_in_executor(io_thread_pool_executor, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 624, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3.11/concurrent/futures/thread.py”, line 58, in run

result = self.fn(*self.args, **self.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/service/crud_service.py”, line 294, in nf

rv = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/api/base/decorator.py”, line 101, in wrapped

result = func(*args)

^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/plugins/apps/crud.py”, line 229, in do_update

app = self.update_internal(job, app, data, trigger_compose=app[‘state’] != ‘STOPPED’)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/plugins/apps/crud.py”, line 267, in update_internal

compose_action(app_name, app[‘version’], ‘up’, force_recreate=True, remove_orphans=True)

File “/usr/lib/python3/dist-packages/middlewared/plugins/apps/compose_utils.py”, line 61, in compose_action

raise CallError(err_msg)

middlewared.service_exception.CallError: [EFAULT] Failed ‘up’ action for ‘ollama’ app. Please check /var/log/app_lifecycle.log for more details

When checking the afformentionned log file, I have this line

[2025/09/20 02:04:05] (ERROR) app_lifecycle.compose_action():56 - Failed ‘up’ action for ‘ollama’ app: Network ix-ollama_default Creating\n Network ix-ollama_default Created\n Container ix-ollama-ollama-1 Creating\n Container ix-ollama-ollama-1 Created\n Container ix-ollama-ollama-1 Starting\nError response from daemon: error gathering device information while adding custom device “/dev/dri”: no such file or directory\n

Indeed, trying to do a “cd /dev/dri” doesn’t lead anywhere and there isn’t a dri folder in the dev folder

Looking quickly around i’ve found a post talking about Iommu beeing enable for the GPU beeing the cause of this no “/dev/dri” error.

https://forums.unraid.net/topic/148092-steps-to-get-plex-hardware-transcoding-to-work-with-amd-igpu-vega-on-amd-mini-pc/

I had some trouble passing my previous GPU (Nvidia P2000) to the apps, but got it working following this post : https://forums.truenas.com/t/docker-apps-and-uuid-issue-with-nvidia-gpu-after-upgrade-to-24-10-or-25-04/22547 . Could it be something similar ?

Before going any further, I would really like some feedback before breacking anything

Thanks in advance !

Some info on the server

Cpu : AMD Threadripper W2290X

Ram : a mish mash of kits running on stock DDR4 speed ( 2 x 2 x 32gb + 4x8 gb) for a total of 157.3 Gib of usable Ram

MoBo : Asus X399-e Gaming

1 pci x16 : AMD Mi50 32gb

2 pci x16 : empty

3 pci x16 : LSI 9300-16i 12Gbps SAS-3

4 pci x16 : Mellanox ConnectX-2 10Gbe

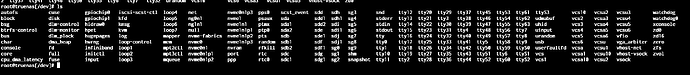

admin@truenas ~ % lspci

00:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Root Complex

00:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) I/O Memory Management Unit

00:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

00:01.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

00:01.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

00:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:03.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

00:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B

00:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

00:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B

00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 59)

00:14.3 ISA bridge: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge (rev 51)

00:18.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 0

00:18.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 1

00:18.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 2

00:18.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 3

00:18.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 4

00:18.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 5

00:18.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 6

00:18.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 7

00:19.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 0

00:19.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 1

00:19.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 2

00:19.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 3

00:19.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 4

00:19.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 5

00:19.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 6

00:19.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 7

01:00.0 USB controller: Advanced Micro Devices, Inc. [AMD] X399 Series Chipset USB 3.1 xHCI Controller (rev 02)

01:00.1 SATA controller: Advanced Micro Devices, Inc. [AMD] X399 Series Chipset SATA Controller (rev 02)

01:00.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] X399 Series Chipset PCIe Bridge (rev 02)

02:02.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port (rev 02)

02:03.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port (rev 02)

02:04.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port (rev 02)

02:09.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port (rev 02)

03:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

06:00.0 USB controller: ASMedia Technology Inc. ASM2142/ASM3142 USB 3.1 Host Controller

07:00.0 Non-Volatile memory controller: SK hynix BC501 NVMe Solid State Drive

08:00.0 Ethernet controller: Mellanox Technologies MT26448 [ConnectX EN 10GigE, PCIe 2.0 5GT/s] (rev b0)

09:00.0 PCI bridge: PLX Technology, Inc. PEX 8724 24-Lane, 6-Port PCI Express Gen 3 (8 GT/s) Switch, 19 x 19mm FCBGA (rev ca)

0a:00.0 PCI bridge: PLX Technology, Inc. PEX 8724 24-Lane, 6-Port PCI Express Gen 3 (8 GT/s) Switch, 19 x 19mm FCBGA (rev ca)

0a:08.0 PCI bridge: PLX Technology, Inc. PEX 8724 24-Lane, 6-Port PCI Express Gen 3 (8 GT/s) Switch, 19 x 19mm FCBGA (rev ca)

0a:09.0 PCI bridge: PLX Technology, Inc. PEX 8724 24-Lane, 6-Port PCI Express Gen 3 (8 GT/s) Switch, 19 x 19mm FCBGA (rev ca)

0b:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS3008 PCI-Express Fusion-MPT SAS-3 (rev 02)

0d:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS3008 PCI-Express Fusion-MPT SAS-3 (rev 02)

0e:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Zeppelin/Raven/Raven2 PCIe Dummy Function

0e:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Platform Security Processor (PSP) 3.0 Device

0e:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Zeppelin USB 3.0 xHCI Compliant Host Controller

0f:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Zeppelin/Renoir PCIe Dummy Function

0f:00.2 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

0f:00.3 Audio device: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) HD Audio Controller

40:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Root Complex

40:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) I/O Memory Management Unit

40:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

40:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:03.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge

40:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B

40:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-1fh) PCIe Dummy Host Bridge

40:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B

41:00.0 Non-Volatile memory controller: SK hynix PC401 NVMe Solid State Drive 256GB

42:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Device 14a0 (rev 01)

43:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Device 14a1

44:00.0 Display controller: Advanced Micro Devices, Inc. [AMD/ATI] Vega 20 [Radeon Pro VII/Radeon Instinct MI50 32GB] (rev 01)

45:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Zeppelin/Raven/Raven2 PCIe Dummy Function

45:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Platform Security Processor (PSP) 3.0 Device

45:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Zeppelin USB 3.0 xHCI Compliant Host Controller

46:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Zeppelin/Renoir PCIe Dummy Function

46:00.2 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

Truenas fangtooth 25.04.2.4 up to date

running application : jellyfin (no GPU passtrough) // Nextcloud (no GPU passtrough) // ollama // onlyoffice-document-server // open-webui (no GPU passtrough)