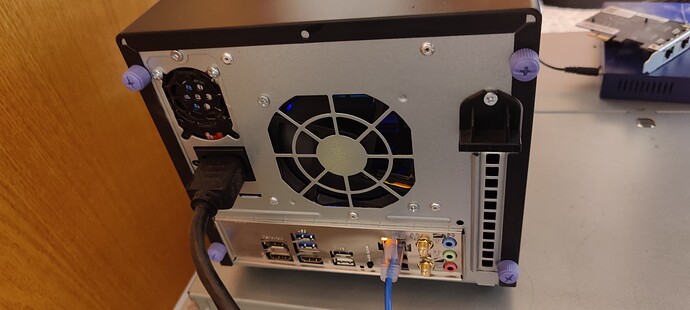

This is a SilverStone CS351 case. It houses a Micro-ATX board. I love this case, because it is very compact, but you have just enough room for everything. Take a look on the manufacturers website.

- 5x 3.5" HDD bays with status LEDs

- There is 2x more internal 3.5" HDD slots

- And there is a rail system wher eyou can mount 8x 2.5" disk/ssd

- HDD cooling is good, because the HDD cage has a fan directly on it

By default the power supply is right above the motherboard, so you are limited to low profile coolers. You may install an SFX power supply (I did) to make more room for the cooler.

Ultimately I decided to make a case-mod, and I fitted my SFX PSU under the HDD cage, and mounted a 140mm fan on the back, and installed a Noctua tower cooler.

Here is the power supply (bottom-left on the photo) mounted into the internal HDD frame. It gets airflow from the side panel constantly, and it is an efficient Seasonic 300W unit which had fan-stop function as well, so it stays cool. Please do this only if you know what you’re doing, as this is high voltage and kills. You see that I kept the insulator on it (transparent plastic sheet), but obviously the original case of the PSU is removed. For me personally, it is no big deal, as I am comfortable doing such mods.

The case is now dense-enough, and I am super happy that I could re-use my Noctua tower for this build

In the case you’re interested, these are the components:

- Silverstone CS351 case

- Seasonic SSP-300SFB PSU (SFX, comes with ATX adapter frame)

- ASUS Prime B550M-A CSM motherboard, M-ATX form factor

- AMD Ryzen Pro 5750G processor

- 2x 32GB Kingston Server Premier DDR4 3200Mhz ECC RAM (unbuffered)

- 2x Toshiba MG10 series 20TB disk for data (mirror)

- 1x Samsung 250GB SSD as the boot drive

- 2x Samsung 980 Pro 1TB NVME ssd as fast storage (mirror)

- ASM166 sata controller M.2 card, 6 port on PCI-E 3.0 x2 link

- Noctua NH-U9S CPU cooler

- Akasa 14cm fan on the rear (I just found it in my basement)

- Noctua 8cm fan on the HDD cage (the original Sivlerstone fan is loud, and I mean LOUD → better to replace it)

- EZDIY-FAB Quad M.2 PCI-E X16 Expansion Card, the mobo supports bifurcation so the NVME ssds are connected to the main PCI-E X16 slot which operates in X4/X4/X4/X4 mode

-

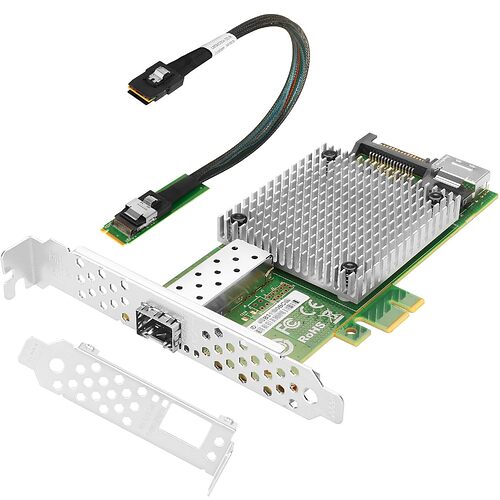

- Intel X520 10G SFP+ NIC with M.2 adapter connected to an M.2 slot using an X4 link

NVME ssds for the VMs and fast data are connected to PCI-E X16 slot, because I need the M.2 sockets on the motherboard for the sata controller and 10G NIC (those are fully fine in X4).

ASM1166 sata controller, power efficient and does the job. I was thinking about an HBA card, but everybody said those prevent the system from going into lower power states, so I decided on this one:

To improve airflow of the HDD cage I added a empty fan frame between the Noctua and the cage, it did help a lot:

In it’s current shape and form, this server sips ~36W from the wall.

If I remove the 10G NIC and use the onboard 1G connection the power consumption drops down to ~33W. Basically this is the absolute minimum I could achieve with 2 hard disks, 2 NVME ssds, and 1 SATA ssd, and the fans connected.

This is how it looked like on the “testbed”, I benchmarked and stress-tested everything before I built the components into the case.