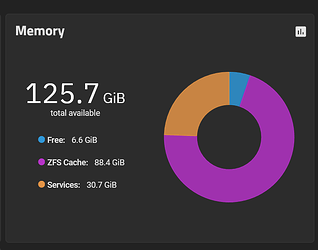

Here is my memory pie chart, isn’t it pretty?

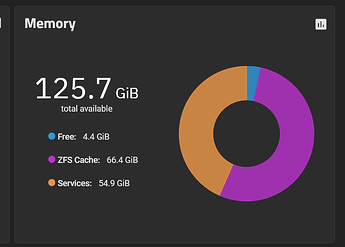

Now let’s go crazy and fill 60GiB of my memory as quickly as I possibly can (I have no swap)

head -c 60000m /dev/zero | tail

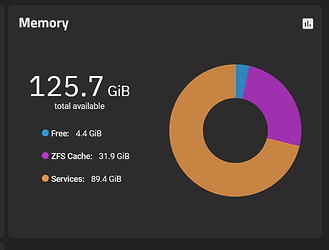

Going… going… and…

No problems, ARC shrinks dynamically. As long as you have enough free space + ARC you should be able to ignore that VM startup warning.