Actually, a single drive could be described as a “one-way mirror”, and one can move up to “two-way” or down from “two-way” to “one-way” exactly in the same manner that one can change between two-way and three-way. It’s all the same class of vdev.

Hi @NVS, again, thank you for the time you spent to remove the fog; unfortunately, it seems we are in the Autumn. But we should not forget that the software we are talking about is a derivate of a commercial SW.

My expectations to have a (and I call RAID10= 2vDevs with 2 disks per vDev) fast read configuration are not real with True NAS Scale at the moment. Maybe I’m wrong with that. You saved my time!

PS: My post before was not personal; it was only a description of my impression in general. It seems you share it.

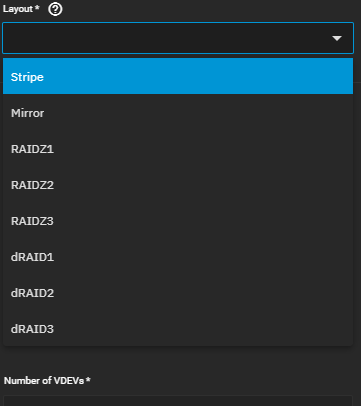

There are a lot of posts here, and newcomers are not going to read all of it. Using the correct terminology avoids misunderstandings. And as you point out yourself, there is no RAID10 in the dropdown:

I apologise. Yes - you did explain that. My mistake.

No, I did not misunderstand, I understood that you had tested across these configurations. What I am saying is that I think future tests should concentrate on just the first set, because there doesn’t seem to be an issue with unmirrored stripes.

Unfortunately some people here seem to be suffering from several false impressions:

-

Undertaking meaningful performance measurements is complicated - systems are complex, you need to understand how they work and decide what you want to measure and specifically design tests around that so that you are testing and measuring the right things and the measurements are not being twisted by other parts of the system. When I was an IT manager, at one point undertaking performance measurements as a specific project, I had entire teams of people designing, building, running and analysing performance using specialised software to build and run the tests (“Loadrunner”). Things are a bit more standardised these days, but it is still a specialisation, and I am not current with the tools in this space on any technology, and never that knowledgeable about the Linux space anyway - hence my general advice and not specifics on what tools to use.

-

Diagnosing a straight forward system-breaking bug is relatively easy - you do X, the system breaks, you get a stack trace or crash dump, so it is relatively easy to narrow it down to the specific bit of code. Working out what causes transient bugs is harder - you do X, sometimes it works but sometimes it breaks, so you have to work out by experimentation what triggers it in order to narrow it down, but when it breaks you can still get a stack trace etc. Diagnosing performance bugs is is far far harder - because the system is working every time and to recreate the issue you need to do a lot, and you either need to run a trace creating a huge mass of trace data (that requires special set up to collect and special tools to analyse and find the needle in the haystack) or try to narrow it down through guesswork and repeated experimentation.

-

The advice being given here is effectively from a bunch of semi-experienced and talented but part-time and amateur volunteers - and expectations need to be based on this.

Nevertheless, as a team, @nvs with some advice has made some great measurements that showed first that SCALE had network/SMB/disk issues on writing, and by stages narrowing this down to mirror disk issues with both reading and writing. Whether this is yet sufficiently narrow to convince a tech support team or even a chain of Tech Support teams (TrueNAS → ZFS / Debian / Linux kernel) that there is a general issue rather than a single issue with a single system is not clear IMO.

At this stage @nvs has (I think) four options:

- Drop the whole thing and live with the performance he gets;

- Stop doing further testing, simplify and summarise the results he has achieved so that a convincing case is made that there is a problem in the simplest possible way, and create tickets with TrueNAS, Debian and ZFS teams to see if anyone picks it up and runs with it.

- Continue to try to narrow it down further in the hope that this will enable a irrefutable ticket to be raised with the correct support team, definitively leading to a much fix in a shorter time.

- Do both 2. and 3.

I could find an old thread with Google

It confirms @NVS observations and shows performance observations with different topologies with ZFS.

Not nice, and it seems no change till now. I guess we have to live with it as @protopia mentioned.

For 4 disks, a Z2 pool seems the better configuration compared to a mirror2 v Devs with 2 drives in them each pool.

Hi @nvs @B52

Since we are struggling with similar problems I try to recreate your tests on my system. I always test with FIO but trying dd now. Compression off.

12xMirrors rolled back to Core 13.3:

root@workhorse[~]# dd if=/dev/zero of=/mnt/work/work_mirrored/999_DIV/999_speedtests/tmp.dat bs=1024k count=195k

199680+0 records in

199680+0 records out

209379655680 bytes transferred in 299.775622 secs (698454578 bytes/sec)

root@workhorse[~]# dd if=/mnt/work/work_mirrored/999_DIV/999_speedtests/tmp.dat of=/dev/null bs=1024k count=195k

^C128946+0 records in

128946+0 records out

135209680896 bytes transferred in 213.769294 secs (632502817 bytes/sec)

It looking at zpool iostat it seems dd reads and writes from disks at the same time during reads. I would consider using something else to test as dd seems to do something strange here.

---------------------------------------------- ----- ----- ----- ----- ----- -----

capacity operations bandwidth

pool alloc free read write read write

---------------------------------------------- ----- ----- ----- ----- ----- -----

work 96.0T 13.1T 482 1.29K 482M 1.29G

mirror-0 8.00T 1.09T 42 109 42.6M 110M

gptid/61a22c20-bb52-4b2c-937c-4eea928bd3ca - - 23 52 23.8M 52.5M

gptid/551c7d52-e2a0-4c48-bac3-5daf1f472d59 - - 18 57 18.8M 57.4M

mirror-1 8.00T 1.09T 41 106 41.6M 107M

gptid/488203e6-6627-4021-984e-d484979a135f - - 18 53 18.8M 53.5M

gptid/820c30c9-ff6a-46de-920d-ed83105cdf85 - - 22 53 22.8M 53.5M

mirror-2 8.00T 1.09T 40 107 40.6M 108M

gptid/ad7f3645-835d-4844-900b-7031b53cb85c - - 21 55 21.8M 55.5M

gptid/760079a9-650e-4dfe-9f05-5d3d7de7d288 - - 18 52 18.8M 52.5M

mirror-3 8.00T 1.09T 40 110 40.6M 111M

gptid/2fee2704-5dfa-49a8-bcf2-d14ba85aff55 - - 18 59 18.8M 59.4M

gptid/e8369ee5-ee70-43e4-b549-b64983cfb250 - - 21 51 21.8M 51.5M

mirror-4 8.00T 1.09T 41 105 41.6M 106M

gptid/4e323c4f-b971-4a2b-8225-0a3dcba80f0c - - 17 55 17.8M 55.5M

da2p1 - - 23 50 23.8M 50.5M

mirror-5 8.00T 1.09T 39 113 39.6M 114M

gptid/0219d478-e88c-4bc0-972d-e6de46663e0d - - 17 62 17.8M 62.4M

gptid/10e1b6d8-3106-4ffd-b509-b7f45578c7d5 - - 21 51 21.8M 51.5M

mirror-6 8.00T 1.09T 36 111 36.6M 112M

gptid/417c988e-f3d0-4bd5-b3d9-b8c34d21695c - - 18 55 18.8M 55.5M

gptid/73c4688e-f81b-4a11-8571-78c3167e6547 - - 17 56 17.8M 56.4M

mirror-7 8.00T 1.09T 38 108 38.6M 108M

gptid/032fb767-6159-43cd-a40a-990b51123f54 - - 17 55 17.8M 54.5M

gptid/382f72ea-1003-4334-92ce-639375cf3878 - - 20 53 20.8M 53.5M

mirror-8 8.00T 1.09T 40 115 40.6M 116M

gptid/b46529bc-2d47-449a-b13c-dc7c7f8aa672 - - 18 62 18.8M 62.4M

gptid/533680d1-f50f-45ff-9ac3-bc2875ddfbe0 - - 21 53 21.8M 53.5M

mirror-9 8.00T 1.09T 41 107 41.6M 106M

gptid/53081341-52be-4dd5-a398-e30f146920da - - 19 55 19.8M 54.5M

gptid/efc591c5-29f8-417b-846c-a6f58a1d4e50 - - 21 52 21.8M 51.5M

mirror-10 8.01T 1.09T 41 108 41.6M 109M

gptid/8695c764-5680-415d-9869-11a2d44d5f6b - - 18 55 18.8M 55.5M

gptid/b20b35e1-21ee-494f-b8bb-88fa7a750198 - - 22 53 22.8M 53.5M

mirror-11 8.00T 1.09T 36 108 36.6M 109M

gptid/137e249a-ae35-4c63-bcaf-2f69d1469ac1 - - 15 56 15.8M 56.4M

gptid/d562b513-4069-464d-bec2-64989cd0e43e - - 20 52 20.8M 52.5M

cache - - - - - -

nvd2p1 425G 1.45T 32 49 32.7M 42.6M

---------------------------------------------- ----- ----- ----- ----- ----- -----

IMO fio is more accurate to judge real-world workloads. But with your command I also get weird results:

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]# fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeq.dat --rw=write --size=500m --io_size=195g --blocksize=1024k --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

TESTSeqWrite: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=32

fio-3.36

Starting 1 process

TESTSeqWrite: Laying out IO file (1 file / 500MiB)

note: both iodepth >= 1 and synchronous I/O engine are selected, queue depth will be capped at 1

Jobs: 1 (f=1): [W(1)][12.7%][w=4135MiB/s][w=4134 IOPS][eta 00m:48s]

Jobs: 1 (f=1): [W(1)][21.8%][w=3348MiB/s][w=3347 IOPS][eta 00m:43s]

Jobs: 1 (f=1): [W(1)][29.8%][w=3147MiB/s][w=3147 IOPS][eta 00m:40s]

Jobs: 1 (f=1): [W(1)][37.9%][w=2925MiB/s][w=2925 IOPS][eta 00m:36s]

Jobs: 1 (f=1): [W(1)][46.6%][w=4232MiB/s][w=4232 IOPS][eta 00m:31s]

Jobs: 1 (f=1): [W(1)][57.1%][w=3942MiB/s][w=3941 IOPS][eta 00m:24s]

Jobs: 1 (f=1): [W(1)][65.5%][w=2841MiB/s][w=2841 IOPS][eta 00m:20s]

Jobs: 1 (f=1): [W(1)][74.1%][w=4030MiB/s][w=4030 IOPS][eta 00m:15s]

Jobs: 1 (f=1): [W(1)][82.8%][w=4026MiB/s][w=4025 IOPS][eta 00m:10s]

Jobs: 1 (f=1): [W(1)][93.0%][w=4198MiB/s][w=4198 IOPS][eta 00m:04s]

Jobs: 1 (f=1): [W(1)][100.0%][w=3403MiB/s][w=3403 IOPS][eta 00m:00s]

TESTSeqWrite: (groupid=0, jobs=1): err= 0: pid=42597: Fri Oct 4 06:52:18 2024

write: IOPS=3515, BW=3515MiB/s (3686MB/s)(195GiB/56800msec); 0 zone resets

clat (usec): min=105, max=31685, avg=268.37, stdev=154.69

lat (usec): min=119, max=31699, avg=281.73, stdev=157.26

clat percentiles (usec):

| 1.00th=[ 202], 5.00th=[ 206], 10.00th=[ 208], 20.00th=[ 210],

| 30.00th=[ 212], 40.00th=[ 215], 50.00th=[ 219], 60.00th=[ 293],

| 70.00th=[ 302], 80.00th=[ 310], 90.00th=[ 367], 95.00th=[ 375],

| 99.00th=[ 506], 99.50th=[ 537], 99.90th=[ 1270], 99.95th=[ 2638],

| 99.99th=[ 7373]

bw ( MiB/s): min= 2023, max= 4422, per=100.00%, avg=3519.69, stdev=628.80, samples=111

iops : min= 2023, max= 4422, avg=3519.14, stdev=628.79, samples=111

lat (usec) : 250=56.73%, 500=42.20%, 750=0.81%, 1000=0.07%

lat (msec) : 2=0.13%, 4=0.04%, 10=0.02%, 20=0.01%, 50=0.01%

fsync/fdatasync/sync_file_range:

sync (nsec): min=1676, max=39949, avg=7779.92, stdev=7421.44

sync percentiles (nsec):

| 1.00th=[ 1672], 5.00th=[ 1672], 10.00th=[ 1816], 20.00th=[ 2024],

| 30.00th=[ 2096], 40.00th=[ 2096], 50.00th=[ 2160], 60.00th=[10944],

| 70.00th=[12352], 80.00th=[12736], 90.00th=[13504], 95.00th=[14144],

| 99.00th=[40192], 99.50th=[40192], 99.90th=[40192], 99.95th=[40192],

| 99.99th=[40192]

cpu : usr=6.06%, sys=93.82%, ctx=1401, majf=0, minf=0

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,199680,0,38 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=3515MiB/s (3686MB/s), 3515MiB/s-3515MiB/s (3686MB/s-3686MB/s), io=195GiB (209GB), run=56800-56800msec

I use this and note that I turn off ARC temporarily when writing and then turn it on again to read directly from disk:

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]# zfs set primarycache=metadata work

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]# fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=write --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

TESTSeqWrite: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=1

fio-3.36

Starting 1 process

TESTSeqWrite: Laying out IO file (1 file / 51200MiB)

Jobs: 1 (f=1): [W(1)][11.7%][w=950MiB/s][w=950 IOPS][eta 00m:53s]

Jobs: 1 (f=1): [W(1)][20.0%][w=908MiB/s][w=907 IOPS][eta 00m:48s]

Jobs: 1 (f=1): [W(1)][28.3%][w=902MiB/s][w=901 IOPS][eta 00m:43s]

Jobs: 1 (f=1): [W(1)][36.7%][w=914MiB/s][w=914 IOPS][eta 00m:38s]

Jobs: 1 (f=1): [W(1)][45.0%][w=965MiB/s][w=964 IOPS][eta 00m:33s]

Jobs: 1 (f=1): [W(1)][53.3%][w=954MiB/s][w=954 IOPS][eta 00m:28s]

Jobs: 1 (f=1): [W(1)][62.7%][w=689MiB/s][w=688 IOPS][eta 00m:22s]

Jobs: 1 (f=1): [W(1)][70.0%][w=906MiB/s][w=905 IOPS][eta 00m:18s]

Jobs: 1 (f=1): [W(1)][78.3%][w=930MiB/s][w=929 IOPS][eta 00m:13s]

Jobs: 1 (f=1): [W(1)][88.3%][w=979MiB/s][w=978 IOPS][eta 00m:07s]

Jobs: 1 (f=1): [W(1)][96.7%][w=699MiB/s][w=699 IOPS][eta 00m:02s]

Jobs: 1 (f=1): [W(1)][100.0%][w=989MiB/s][w=989 IOPS][eta 00m:00s]

TESTSeqWrite: (groupid=0, jobs=1): err= 0: pid=42691: Fri Oct 4 07:00:50 2024

write: IOPS=942, BW=943MiB/s (989MB/s)(55.3GiB/60001msec); 0 zone resets

clat (usec): min=91, max=4895, avg=1041.56, stdev=337.13

lat (usec): min=99, max=4952, avg=1057.20, stdev=346.52

clat percentiles (usec):

| 1.00th=[ 215], 5.00th=[ 334], 10.00th=[ 783], 20.00th=[ 955],

| 30.00th=[ 971], 40.00th=[ 996], 50.00th=[ 1037], 60.00th=[ 1090],

| 70.00th=[ 1123], 80.00th=[ 1139], 90.00th=[ 1221], 95.00th=[ 1369],

| 99.00th=[ 2442], 99.50th=[ 2638], 99.90th=[ 3163], 99.95th=[ 3326],

| 99.99th=[ 3687]

bw ( KiB/s): min=597333, max=3812118, per=100.00%, avg=966791.63, stdev=336507.47, samples=117

iops : min= 583, max= 3722, avg=943.66, stdev=328.62, samples=117

lat (usec) : 100=0.23%, 250=4.58%, 500=1.40%, 750=3.09%, 1000=31.75%

lat (msec) : 2=56.47%, 4=2.47%, 10=0.01%

cpu : usr=1.93%, sys=29.98%, ctx=53444, majf=0, minf=0

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,56578,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=943MiB/s (989MB/s), 943MiB/s-943MiB/s (989MB/s-989MB/s), io=55.3GiB (59.3GB), run=60001-60001msec

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]# zfs set primarycache=all work

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]# fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=read --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

TESTSeqWrite: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=1

fio-3.36

Starting 1 process

Jobs: 1 (f=1): [R(1)][11.7%][r=1089MiB/s][r=1088 IOPS][eta 00m:53s]

Jobs: 1 (f=1): [R(1)][20.0%][r=1194MiB/s][r=1194 IOPS][eta 00m:48s]

Jobs: 1 (f=1): [R(1)][28.3%][r=1038MiB/s][r=1038 IOPS][eta 00m:43s]

Jobs: 1 (f=1): [R(1)][36.7%][r=1571MiB/s][r=1570 IOPS][eta 00m:38s]

Jobs: 1 (f=1): [R(1)][45.0%][r=1318MiB/s][r=1318 IOPS][eta 00m:33s]

Jobs: 1 (f=1): [R(1)][53.3%][r=1709MiB/s][r=1709 IOPS][eta 00m:28s]

Jobs: 1 (f=1): [R(1)][62.7%][r=1292MiB/s][r=1292 IOPS][eta 00m:22s]

Jobs: 1 (f=1): [R(1)][70.5%][r=2462MiB/s][r=2461 IOPS][eta 00m:18s]

Jobs: 1 (f=1): [R(1)][80.0%][r=2678MiB/s][r=2677 IOPS][eta 00m:12s]

Jobs: 1 (f=1): [R(1)][88.3%][r=2552MiB/s][r=2551 IOPS][eta 00m:07s]

Jobs: 1 (f=1): [R(1)][96.7%][r=1879MiB/s][r=1878 IOPS][eta 00m:02s]

Jobs: 1 (f=1): [R(1)][100.0%][r=2676MiB/s][r=2676 IOPS][eta 00m:00s]

TESTSeqWrite: (groupid=0, jobs=1): err= 0: pid=42736: Fri Oct 4 07:04:17 2024

read: IOPS=1684, BW=1684MiB/s (1766MB/s)(98.7GiB/60001msec)

clat (usec): min=207, max=59062, avg=590.24, stdev=606.70

lat (usec): min=208, max=59063, avg=591.05, stdev=606.71

clat percentiles (usec):

| 1.00th=[ 255], 5.00th=[ 330], 10.00th=[ 343], 20.00th=[ 355],

| 30.00th=[ 375], 40.00th=[ 392], 50.00th=[ 461], 60.00th=[ 502],

| 70.00th=[ 644], 80.00th=[ 799], 90.00th=[ 930], 95.00th=[ 1057],

| 99.00th=[ 2180], 99.50th=[ 2835], 99.90th=[ 6390], 99.95th=[10421],

| 99.99th=[21365]

bw ( MiB/s): min= 714, max= 2807, per=99.91%, avg=1682.50, stdev=603.52, samples=117

iops : min= 714, max= 2807, avg=1682.03, stdev=603.53, samples=117

lat (usec) : 250=0.82%, 500=58.66%, 750=16.46%, 1000=17.97%

lat (msec) : 2=4.58%, 4=1.26%, 10=0.20%, 20=0.04%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=1.05%, sys=94.61%, ctx=3650, majf=0, minf=259

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=101045,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=1684MiB/s (1766MB/s), 1684MiB/s-1684MiB/s (1766MB/s-1766MB/s), io=98.7GiB (106GB), run=60001-60001msec

root@workhorse[.../work_mirrored/999_DIV/999_speedtests]#

This matches very well what I see in real-world workload tests over SMB

Not great speeds for 24 disks but OK. Let me know if I should also test my other server with RaidZ2 pools again, which did not improve significantly by downgrading to 13.3 and still slow reads.

Would be interesting to see what you get with my testing approach.

Hi @simonj ,

Thanks for testing as well, seems we may be busy with troubleshooting the same thing.

I repeated your commands on my high-spec system, using TrueNAS 13.3, configured for “raid10” (aka 2vDevs with 2 drives per vdev) and dataset compression turned off:

# WRITE TEST:

zfs set primarycache=metadata nas_data1

fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=write --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

WRITE: bw=552MiB/s (579MB/s), 552MiB/s-552MiB/s (579MB/s-579MB/s), io=32.4GiB (34.8GB), run=60002-60002msec

# READ TEST:

zfs set primarycache=all nas_data1

fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=read --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

READ: bw=6491MiB/s (6806MB/s), 6491MiB/s-6491MiB/s (6806MB/s-6806MB/s), io=380GiB (408GB), run=60001-60001msec

Write speed test looks great and like the expected “theory” write speed for that configuration.

Not sure what to conclude from the read speed test as I guess the number is that high due to caching? (I have 4x HDD’s 20TB each, CMR, so they can not reach that number without some sort of caching happening)

Hm. That looks indeed like reading from ARC. Are you sure it’s reading the exact file you created before with ARC off? You can also just turn ARC off for both tests to make sure its not caching.

Otherwise always good to look at: zpool iostat -v 1 to see what the disks are actually doing.

I have copy pasted your exact commands, and that is the output I get. Not sure how to check its reading the exact same file. Here is the output of zpool iostat -v 1 while its reading with your command:

zpool iostat -v 1

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

boot-pool 1.25G 215G 5 3 80.9K 24.5K

ada2p2 1.25G 215G 5 3 80.9K 24.5K

-------------------------------------- ----- ----- ----- ----- ----- -----

nas_data1 50.1G 36.3T 0 183 678 111M

mirror-0 25.8G 18.2T 0 91 337 57.3M

gptid/561f15f1-826f-11ef-a9e2-04d9f5f3d8ce - - 0 47 16 7 28.6M

gptid/560ccc0a-826f-11ef-a9e2-04d9f5f3d8ce - - 0 44 17 0 28.6M

mirror-1 24.3G 18.2T 0 91 340 54.2M

gptid/5617c9a6-826f-11ef-a9e2-04d9f5f3d8ce - - 0 45 16 7 27.1M

gptid/56260e35-826f-11ef-a9e2-04d9f5f3d8ce - - 0 46 17

This time got even higher read speed, BTW:

zfs set primarycache=all nas_data1

fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=read --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

TESTSeqWrite: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=1

READ: bw=9292MiB/s (9743MB/s), 9292MiB/s-9292MiB/s (9743MB/s-9743MB/s), io=544GiB (585GB), run=60001-60001msec

Setting zfs set primarycache=metadata nas_data1 and then running the read test command I get even higher speeds.

zfs set primarycache=metadata nas_data1

fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF.dat --rw=read --bs=1M --size=50G --numjobs=1 --time_based --runtime=60 --group_reporting

READ: bw=15.2GiB/s (16.4GB/s), 15.2GiB/s-15.2GiB/s (16.4GB/s-16.4GB/s), io=914GiB (981GB), run=60001-60001msec

So looks to me something is not disabling caching correctly?

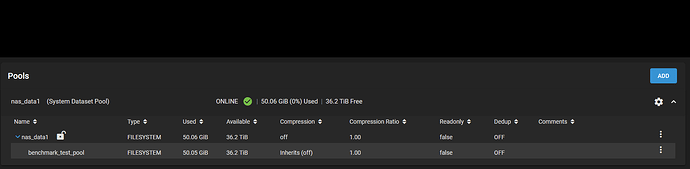

My pool/dataset setup looks like this:

@nvs Probably you are reading the same file you wrote earlier with cache on. You would need to put another filename in the fio command or delete the file to make sure you write a new file, which is not cached.

ARC all/metadata/none is relevant for writes. If you write with ARC on and turn it off later the file is still in cache and read from there.

Hi, @NVS, I rebooted the server after my test to make sure the ARC was empty. With ARC, my 10 Gb network is fully saturated after the first or second reading. Without it, I saw close to 400 MB/s but expected between 700 and 800 MB/s. It seems reading is only half of the 4 disks, like writing. (talking about the 4-disk mirror. Scale)

@simonj Unfortunately I am not familiar with how the “zfs set primarycache” command works. I would need a bit more guidance how to use it correctly. I see there are three options: all/metadata/none.

First, it would be good to know if I set this command on the correct dataset at all. See the screenshot in my previous post. I ran the zfs commands (see my previous post) for the parent dataset called “nas_data1”. I am writing the test file into the nas_data1/benchmark_test_pool child dataset however. So maybe I did that wrong and the zfs commands should actually point at nas_data1/benchmark_test_pool instead? Could you confirm that?

If yes, could you tell me what command to use to configure the nas_data1 parent dataset to the default setting again?

Next, I would like to repeat your fio read/write commands again. The file will go into nas_data1/benchmark_test_pool. Could you tell me exactly what the commands/steps are I should take for testing?

I.e. something like this:

- Delete any existing fio file in nas_data1/benchmark_test_pool

- zfs set primarycache=none nas_data1/benchmark_test_pool

- run fio write command

- Delete any existing fio file in nas_data1/benchmark_test_pool

- run fio read command

Above commands may be wrong, but just wanted to give an impression what kind of instructions would be useful for clarity to make sure I do the next test correctly. Thanks.

P.S. Please let me know in case you want me to do any other test on this “high-spec” machine, as I have to switch back to my “low-spec” machine soon again.

BTW, I did some more reading in the official TrueNAS docs about expected read/write performance for a mirror.

On this page, about 70% down the page: ZFS Primer | TrueNAS Documentation Hub

[…]

These resources can also help determine the RAID configuration best suited to the specific storage requirements:

First post in the first link mentions in the table:

# Spares Pairity Lost Read Write Raid level

1 0 0.00 0.00% 96 96 Striping

2 0 48.00 50.00% 96 1 Mirroring

Where: The '#' symbol is the number of disks in the pool, lost means lost capacity (due to parity or spares), read and write are the multiplier of a single disk speed, assuming that the disk is the bottleneck.

^ Thus stating that the expected read speed for a mirror should scale directly with the amount of disks (as we also expected).

The second link mentions:

Does it make RAID-Z the winner? Let’s check reads:

- Mirroring n disks will give you n times a single disk’s IOPS and bandwidth read performance. And on top, each disk gets to position its head independently of the others, which will help random read performance for both IOPS and bandwidth.

- RAID-Z would still give you a single disk’s performance for IOPS, but n-1 times aggregate bandwidth of a single disk. This time, though, the reads need to be correlated, because ZFS can only read groups of blocks across the disks that are supposed to hang out together. Good for sequential reads, bad for random reads.

Assuming a workload that cares about both reads and writes, and assuming that when the going gets tough, IOPS is more important than bandwidth, I’d opt for mirroring whenever I can.

^Equally states that mirroring n disks will give n times a single disk’s IOPS and bandwidth read performance.

So both references (experts) that TrueNAS cites themselves in the official documentation state that the read speed for a mirror should scale with n-disks. Which we pretty much proved is not the case. Shouldn’t this be enough reason for iX wanting to take a look at this? (otherwise the current documentation could be considered misleading)

Booted the same mirror with 2 discs removed (mirror degraded aka Stripe. Maybe some overhead caused by the missing disks) and got 320 MB/s vs 380 to 400MB/s not degraded.

So we are far away from n disk performance of the official manual with a working 4 disk mirror read performance wise.

Hi @nvs

It should work like this:

zfs set primarycache=metadata nas_data1 #The pool.

your fio write test command with a new filename

(optional: zfs set primarycache=all nas_data1 )

your fio read test command reading from the file you created above.

Observe what the disks do in the meantime with zpool iostat -v 1 in a second terminal.

If the whole ARC thing doesn’t work just write and read a file that is at least double than your RAM and observer speeds towards the end of the test with zpool iostat, so you see your actual read from disk performance.

Otherwise:

IMO there is definitely a bug in TrueNAS Scale. It might be only affecting older systems. You seem to have a similar CPU generation as mine.

For me the 24 disks as mirrors did scale. Not to the full theoretical max of 24x240=5GB/s - thats unrealistic but getting something around 1.5GB/s - 2GB/s, which seems to be in line with what can be expected. But only after downgrading from Scale to Core 13.3

Hi @simonj, my server has an Intel Xeon CPU E3-1270 v6 CPU, not the newest one but a few generations younger and more powerful. So for me, the CPU is not the limiting factor, I guess.

Hi, I have done exactly that before, see results in my previous post.

Can you be concrete how exactly you want me to “just write and read a file that is at least double my RAM” ? Do you just want me to increase the fio outfile size to 256 GB (RAM is 128GB on this machine)?

My testing results (with dd) in some posts further up have shown that the read/write speed for different pool configurations were pretty much identical for TrueNAS SCALE vs TrueNAS CORE 13.3 for me. Note that the recent tests I did were on what I called a “high-spec” machine having a Ryzen 9 5950X CPU with 128 GB RAM.

What generally became clear with the dd testing was that when mirrors are involved the read performance does not reach anywhere near the theoretical numbers (even though the official TrueNAS documentation references sources say that mirroring n disks will give n times a single disk’s IOPS and bandwidth read performance). This leaves me to conclude that the read performance I see is unexpectedly low, across both CORE and SCALE. @B52 has done testing as well which seems to confirm this.

The 1.5 - 2 GB/s read speed you measure also seems too far off the theoretical expected values to me (you reach only 30-40% of the theoretical value that are referenced in the documentation). So this looks equally underperforming to me.

Regarding difference SCALE VS CORE: One thing my testing did show is that I clearly got a lower SMB write speed on SCALE (compared to CORE). You can read back the results in more detail in the posts from a few days ago if you like.

I think there are several things at play here:

At least in my case with very strong but older hardware:

1: on truenas Scale (24.04/24.10) I had a mysterious read speed cap of around 300MB/s on everything stripe/mirror/raidz. Downgrading to Core 13.3 solved it at least for the mirror. On my other server with same hardware and (also on 13.3 now) reads on raidz pools are still much slower than expected (around 400MB/s). This seems to be clearly a bug (at least on older hardware) but maybe not limited to TrueNAS but could be also ZFS on Linux in general.

2: in case of @nvs I’m not sure if the results are due to inconsistent testing. I don’t think that dd delivers meaningful results. When I tested with dd it was reading and writing at the same time.

If you want to test again with FIO and no cache do exactly this:

#cd into a directory on your nas. Eg. cd /mnt/nas_data1/dataset/speedtest

zfs set primarycache=metadata nas_data1

fio --name TESTSeqWriteRead --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF_NEW.dat --rw=write --bs=1M --size=50G --numjobs=1 --time_based --runtime=60

fio --name TESTSeqWriteRead --eta-newline=5s --filename=fio-tempfile-WSeqARC-OFF_NEW.dat --rw=read --bs=1M --size=50G --numjobs=1 --time_based --runtime=60

rm fio-tempfile-WSeqARC-OFF_NEW.dat

# repeat if needed

#Watch iostat in another shell window

zpool iostat -v nas_data1 1

# Turn cache on again after testing:

zfs set primarycache=all nas_data1

3: In reality the idea that stripes/mirrors/raidZ deliver exactly a multiple of the performance of the single disks x the number of disks is not true. In my case my pool of 24 disks in 12 mirrored pairs delivers around 80MB/s per disk max. So @B52 if you get 400MB/s from a 4 disks as two mirrors reflects what I’m seeing on my system. Mirrors vs RaidZ mostly benefit from better IOPS.

If you look at this a bit older article you see very similar speeds to what we are measuring here:

https://calomel.org/zfs_raid_speed_capacity.html

Agree, if this is the behaviour you have seen then this seems to be some kind of bug, possibly version dependent related to your specific HW config. This seems likely to be a different issue then however compared to what I see. Cause I don’t see this difference between the two TrueNAS version as you do (at least not with the extensive dd testing I did).

Feel free to read back in the thread regarding the testing that was done. It was consistent testing with dd and the results were reliably reproducible. I don’t think the use of the dd command with a 195 GB file is wrong, but I am happy to be corrected. However at this moment the fio read command you sent has produced clearly cached measurements and so can not be trusted for sure. I can give the commands you sent now one more try tomorrow, I hope they will result in a meaningful read speed test.

We are not expecting the exact theoretical performance, of course. But again, the TrueNAS official documentation points at only 2 references that address this topic and both references state that “mirroring n disks will give n times a single disk’s IOPS and bandwidth read performance”. Reading online I have found this also from other sources. This creates expectations that, lets say, one would at least assume to approach towards 80% of such values, but in reality this seems significantly lower, more towards 30-50%. If there is a valid proof that 30-50% of theoretically expected speeds are “normal” then that would be great, but (official) resources online that I have found suggested otherwise so far. (also btw the link you sent shows 100 MB/s write speed for a single drive, that’s already not comparable with the testing (setup) I have here which does significantly higher speeds for seq write).

This really is a key question: What is really the expected read/write performance for a mirror config in ZFS? (and I don’t mean with that calling anyone’s measured results expected)