Hi,

I have the following HW:

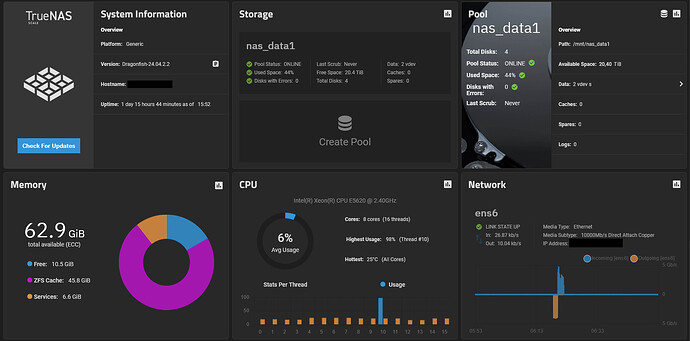

- CPU: Intel Xeon CPU E5620 @ 2.40 GHz

- RAM: 64 GiB ECC

- Boot drive: 256 GB Samsung SSD (SATA)

- Data drives: 4x 20TB Seagate EXOS X20 (SATA, CMR)

- Network: 10 Gbit/s

- Its a rather old machine, prob from around 2013 (IIRC doesn’t support AVX instructions for example)

To give some background:

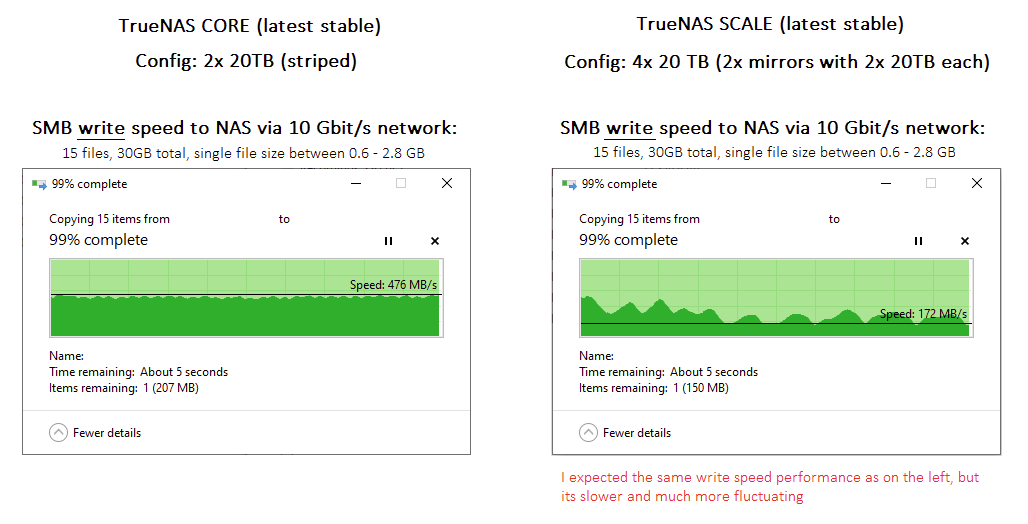

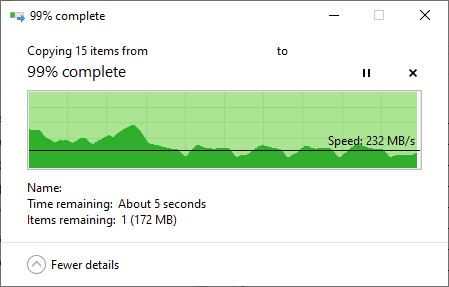

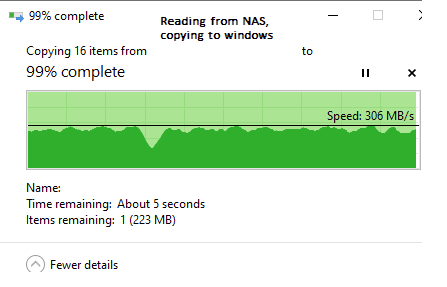

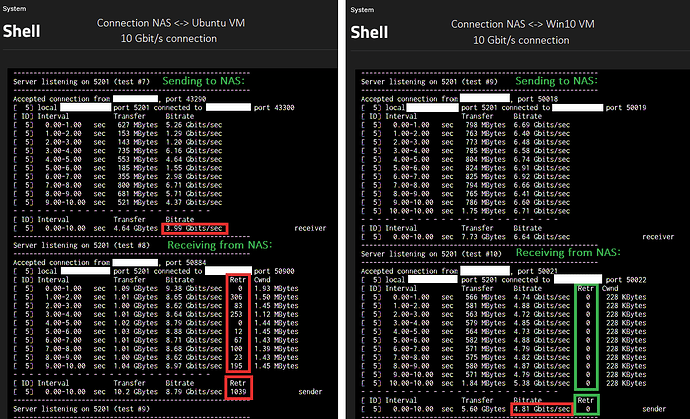

- Until last week, I had this server configured with 2x 20TB drives in STRIPE configuration, running the latest TrueNAS CORE. I had a SMB share configured and got very decent sustained read and write performance to the NAS from a Win10 machine via the 10 Gbit/s connection. Sustained write performance was around 450-500 MB/s, see screenshot further below.

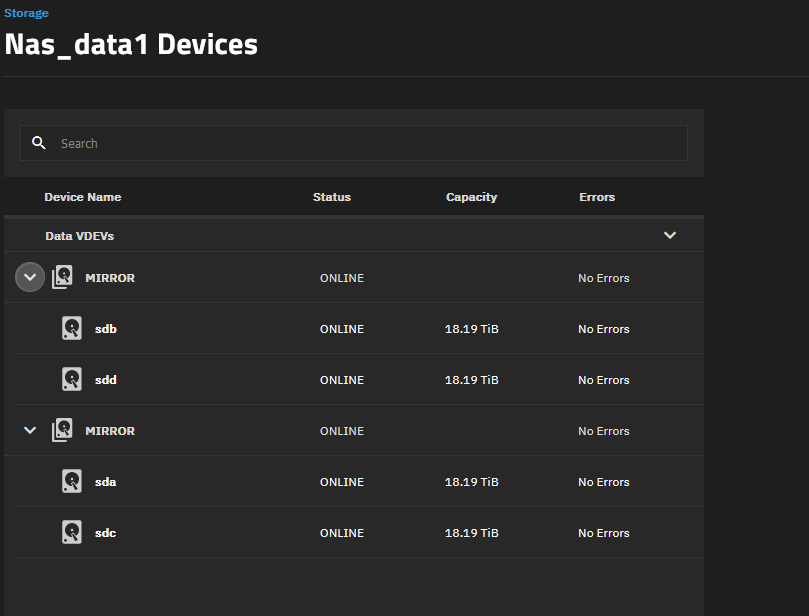

- This week I upgraded the server to the latest TrueNAS SCALE (with a fresh ISO install) and installed two more of the same 20 TB drives, bringing the total amount of data drives now to 4x 20TB drives. My idea was to basically mirror my original 2x 20TB stripe. Which, if I understand correctly, should give me the same write performance as before and double my read performance. Reading online, if I understand correctly, the correct way to do this is to configure 2 VDEVs with 2x 20TB drives each in them. TrueNAS then combines both VDEVs automatically for use into the pool, giving me my wanted ~40TB of useful space while mirrored on two drives. I configured a SMB share again but noticed that the read/write performance seemed now worse than previously, which is not what I expected.

- See the below screenshot showing the difference in write performance:

Some additional notes/screenshots:

- Pool is encrypted (before and also now)

- In TrueNAS CORE I used the following SMB aux. parameters (to force SMB3 and encryption):

server min protocol = SMB3_11

server smb encrypt = required

server signing = required

client min protocol = SMB3_11

client smb encrypt = required

client signing = required

- In TrueNAS SCALE I pushed the same SMB parameters via CLI using the following command (because the aux. parameters field seems to have been disabled in the web UI):

cli

service smb update smb_options="server min protocol = SMB3_11\nserver smb encrypt = required\nserver signing = required\nclient min protocol = SMB3_11\nclient smb encrypt = required\nclient signing = required"

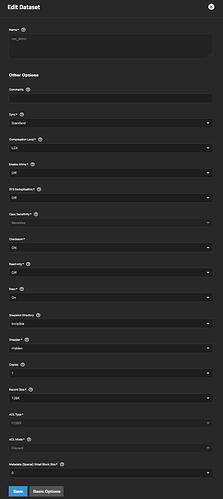

- TrueNAS scale screenshots:

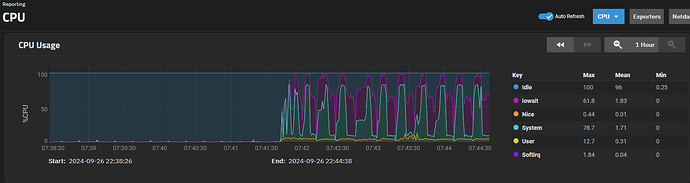

^ This is showing the CPU usage during a write of the same files as above (just double the amount of files)

If I understand correctly, under this configuration the write speed should normally be the same as with my previous configuration and the read speed should be ~2x faster even.

Any ideas what causes the worse read/write performance? Is the configuration I have done correct for the configuration I want to have/is this the best configuration?

Thanks in advance for any feedback.