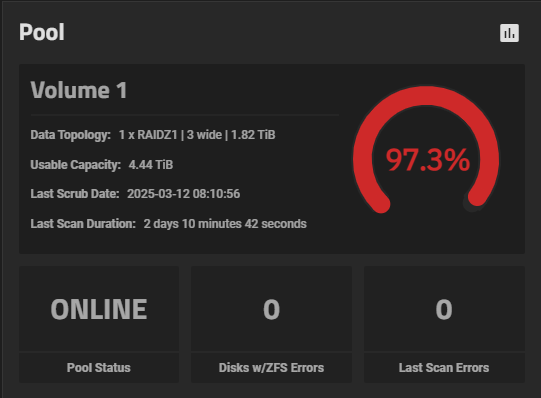

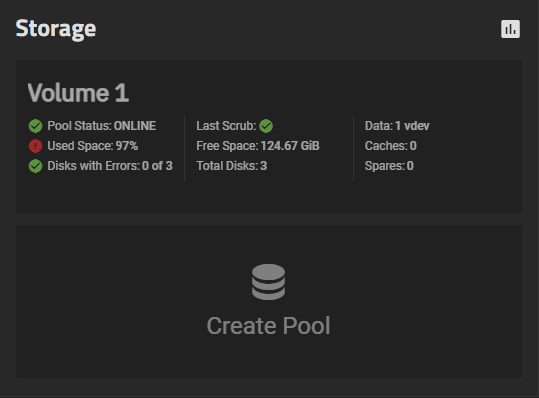

For a while now the disk space monitoring tool has been getting bugged. I had experienced something similar to this earlier and it turned out to be bclone, which got automatically used by the mv command. Because of it it shows that i only have 100Gb storage left, even though i have more than 400. I really have been struggling to get this fixed because i want to avoid SMB errors and filling up the Disk without realizing.

Also, i have 3.64Tb disk space in total

Forgot to mention:

SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT BCLONE_USED BCLONE_SAVED BCLONE_RATIO

5.45T 4.90T 570G - - 26% 89% 1.00x ONLINE /mnt 331G 939G 3.83x

BCLONE or Block Cloning is a new feature when copying data. It actually saves space. Technology wise, it is similar to hard links.

Anytime you copy a file when block cloning is enabled and available, it does not actually copy the data blocks. Just creates a new directory entry in the new location. Any change to the file at any of the directory entries causes those changes to become unique and no longer part of the block clone series.

So, Block Cloning is GOOD and works like copy on write hard links.

Now what might be eating up your space are ZFS snapshots. Any changes to the original files that had snapshots takes up additional space. Snapshots are static, they never change unless you destroy it / them.

Please post the output of zfs list -t all -r Volume 1" in forum CODE tags and we can see if snapshots are a problem for you.

Could you maybe say what you mean by forum code tags?

Here’s the output of that command, and it seems like i’ve got a few. But how are they taking up 939Gb??

NAME USED AVAIL REFER MOUNTPOINT

Volume 1 4.32T 115G 788G /mnt/Volume 1

Volume 1/.system 2.12G 115G 1.47G legacy

Volume 1/.system/configs-ae32c386e13840b2bf9c0083275e7941 11.9M 115G 11.9M legacy

Volume 1/.system/cores 27.2M 997M 27.2M legacy

Volume 1/.system/netdata-ae32c386e13840b2bf9c0083275e7941 623M 115G 623M legacy

Volume 1/.system/nfs 170K 115G 170K legacy

Volume 1/.system/samba4 884K 115G 437K legacy

Volume 1/.system/samba4@update--2025-01-16-12-05--SCALE-24.10.0.2 186K - 320K -

Volume 1/.system/samba4@update--2025-02-28-08-22--SCALE-24.10.1 261K - 394K -

Volume 1/Discord_Bots-k5bgdh 67.7G 166G 14.1G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-17_00-00 0B - 8.80G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-18_00-00 0B - 8.80G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-20_00-00 0B - 8.80G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-21_00-00 0B - 8.80G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-22_00-00 9.74M - 8.93G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-23_00-00 5.66M - 8.94G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-24_00-00 6.54M - 9.43G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-25_00-00 0B - 9.44G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-27_00-00 0B - 9.44G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-28_00-00 0B - 9.44G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-29_00-00 0B - 9.44G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-01-30_00-00 0B - 9.44G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-03-16_00-00 0B - 14.1G -

Volume 1/Discord_Bots-k5bgdh@auto-2025-03-23_00-00 0B - 14.1G -

Volume 1/alpine-dov8wc 50.8G 163G 3.29G -

Volume 1/ix-apps 3.43T 115G 176K /mnt/.ix-apps

Volume 1/ix-apps/app_configs 28.8M 115G 28.8M /mnt/.ix-apps/app_configs

Volume 1/ix-apps/app_mounts 3.39T 115G 202K /mnt/.ix-apps/app_mounts

Volume 1/ix-apps/app_mounts/homarr 6.54M 115G 128K /mnt/.ix-apps/app_mounts/homarr

Volume 1/ix-apps/app_mounts/homarr@2.0.15 0B - 128K -

Volume 1/ix-apps/app_mounts/homarr@2.0.17 0B - 128K -

Volume 1/ix-apps/app_mounts/homarr/data 6.42M 115G 2.28M /mnt/.ix-apps/app_mounts/homarr/data

Volume 1/ix-apps/app_mounts/homarr/data@2.0.15 2.07M - 2.11M -

Volume 1/ix-apps/app_mounts/homarr/data@2.0.17 2.07M - 2.14M -

Volume 1/ix-apps/app_mounts/minecraft 256K 115G 128K /mnt/.ix-apps/app_mounts/minecraft

Volume 1/ix-apps/app_mounts/minecraft/data 128K 115G 128K /mnt/.ix-apps/app_mounts/minecraft/data

Volume 1/ix-apps/app_mounts/navidrome 890M 115G 128K /mnt/.ix-apps/app_mounts/navidrome

Volume 1/ix-apps/app_mounts/navidrome@1.1.9 0B - 128K -

Volume 1/ix-apps/app_mounts/navidrome@1.1.10 0B - 128K -

Volume 1/ix-apps/app_mounts/navidrome@1.1.11 0B - 128K -

Volume 1/ix-apps/app_mounts/navidrome@1.1.12 0B - 128K -

Volume 1/ix-apps/app_mounts/navidrome@1.1.14 0B - 128K -

Volume 1/ix-apps/app_mounts/navidrome/data 890M 115G 203M /mnt/.ix-apps/app_mounts/navidrome/data

Volume 1/ix-apps/app_mounts/navidrome/data@1.1.9 170M - 171M -

Volume 1/ix-apps/app_mounts/navidrome/data@1.1.10 151M - 178M -

Volume 1/ix-apps/app_mounts/navidrome/data@1.1.11 153M - 179M -

Volume 1/ix-apps/app_mounts/navidrome/data@1.1.12 184M - 195M -

Volume 1/ix-apps/app_mounts/navidrome/data@1.1.14 7.21M - 202M -

Volume 1/ix-apps/app_mounts/nextcloud 3.28T 115G 128K /mnt/.ix-apps/app_mounts/nextcloud

Volume 1/ix-apps/app_mounts/nextcloud@1.5.15 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud@1.5.18 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud@1.6.3 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud@1.6.7 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud@1.6.9 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud@1.6.12 0B - 149K -

Volume 1/ix-apps/app_mounts/nextcloud/data 3.27T 115G 1.70G /mnt/.ix-apps/app_mounts/nextcloud/data

Volume 1/ix-apps/app_mounts/nextcloud/data@1.5.15 3.78M - 406G -

Volume 1/ix-apps/app_mounts/nextcloud/data@1.5.18 11.7M - 407G -

Volume 1/ix-apps/app_mounts/nextcloud/data@1.6.3 58.3M - 1.83T -

Volume 1/ix-apps/app_mounts/nextcloud/data@1.6.7 33.2M - 1.85T -

Volume 1/ix-apps/app_mounts/nextcloud/data@1.6.9 74.1G - 2.30T -

Volume 1/ix-apps/app_mounts/nextcloud/data@1.6.12 946G - 3.15T -

Volume 1/ix-apps/app_mounts/nextcloud/html 1.93G 115G 532M /mnt/.ix-apps/app_mounts/nextcloud/html

Volume 1/ix-apps/app_mounts/nextcloud/html@1.5.15 218K - 454M -

Volume 1/ix-apps/app_mounts/nextcloud/html@1.5.18 218K - 454M -

Volume 1/ix-apps/app_mounts/nextcloud/html@1.6.3 454M - 455M -

Volume 1/ix-apps/app_mounts/nextcloud/html@1.6.7 282K - 532M -

Volume 1/ix-apps/app_mounts/nextcloud/html@1.6.9 282K - 532M -

Volume 1/ix-apps/app_mounts/nextcloud/html@1.6.12 0B - 532M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data 1.18G 115G 139K /mnt/.ix-apps/app_mounts/nextcloud/postgres_data

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.5.15 73.1M - 109M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.5.18 92.4M - 137M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.6.3 198M - 300M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.6.7 195M - 319M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.6.9 222M - 368M -

Volume 1/ix-apps/app_mounts/nextcloud/postgres_data@1.6.12 245M - 390M -

Volume 1/ix-apps/app_mounts/nextcloudmain 57.9G 115G 139K /mnt/.ix-apps/app_mounts/nextcloudmain

Volume 1/ix-apps/app_mounts/nextcloudmain/data 57.3G 115G 57.3G /mnt/.ix-apps/app_mounts/nextcloudmain/data

Volume 1/ix-apps/app_mounts/nextcloudmain/html 547M 115G 547M /mnt/.ix-apps/app_mounts/nextcloudmain/html

Volume 1/ix-apps/app_mounts/nextcloudmain/postgres_data 49.5M 115G 49.5M /mnt/.ix-apps/app_mounts/nextcloudmain/postgres_data

Volume 1/ix-apps/app_mounts/nginx-proxy-manager 84.0M 115G 128K /mnt/.ix-apps/app_mounts/nginx-proxy-manager

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.0.27 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.1 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.2 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.4 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.6 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.7 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager@1.1.9 0B - 128K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs 858K 115G 155K /mnt/.ix-apps/app_mounts/nginx-proxy-manager/certs

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.0.27 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.1 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.2 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.4 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.6 107K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.7 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/certs@1.1.9 95.9K - 155K -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data 83.0M 115G 37.8M /mnt/.ix-apps/app_mounts/nginx-proxy-manager/data

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.0.27 314K - 1.14M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.1 357K - 6.34M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.2 1.93M - 7.92M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.4 1.38M - 6.07M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.6 9.42M - 14.3M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.7 15.0M - 27.6M -

Volume 1/ix-apps/app_mounts/nginx-proxy-manager/data@1.1.9 4.64M - 25.5M -

Volume 1/ix-apps/app_mounts/nr2 266K 115G 128K /mnt/.ix-apps/app_mounts/nr2

Volume 1/ix-apps/app_mounts/nr2/mariadb_data 139K 115G 139K /mnt/.ix-apps/app_mounts/nr2/mariadb_data

Volume 1/ix-apps/app_mounts/plex 1.80G 115G 139K /mnt/.ix-apps/app_mounts/plex

Volume 1/ix-apps/app_mounts/plex@1.1.17 0B - 139K -

Volume 1/ix-apps/app_mounts/plex@1.1.18 0B - 139K -

Volume 1/ix-apps/app_mounts/plex/config 1.80G 115G 1.06G /mnt/.ix-apps/app_mounts/plex/config

Volume 1/ix-apps/app_mounts/plex/config@1.1.17 439M - 683M -

Volume 1/ix-apps/app_mounts/plex/config@1.1.18 311M - 903M -

Volume 1/ix-apps/app_mounts/plex/data 128K 115G 128K /mnt/.ix-apps/app_mounts/plex/data

Volume 1/ix-apps/app_mounts/plex/data@1.1.17 0B - 128K -

Volume 1/ix-apps/app_mounts/plex/data@1.1.18 0B - 128K -

Volume 1/ix-apps/app_mounts/portainer 5.37M 115G 128K /mnt/.ix-apps/app_mounts/portainer

Volume 1/ix-apps/app_mounts/portainer@1.3.21 0B - 128K -

Volume 1/ix-apps/app_mounts/portainer/data 5.24M 115G 794K /mnt/.ix-apps/app_mounts/portainer/data

Volume 1/ix-apps/app_mounts/portainer/data@1.2.19 181K - 384K -

Volume 1/ix-apps/app_mounts/portainer/data@1.2.20 224K - 426K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.3 458K - 554K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.6 522K - 618K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.12 288K - 629K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.13 298K - 623K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.15 314K - 794K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.16 330K - 810K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.17 336K - 810K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.20 288K - 810K -

Volume 1/ix-apps/app_mounts/portainer/data@1.3.21 240K - 805K -

Volume 1/ix-apps/app_mounts/prometheus 256K 115G 128K /mnt/.ix-apps/app_mounts/prometheus

Volume 1/ix-apps/app_mounts/prometheus/config 128K 115G 128K /mnt/.ix-apps/app_mounts/prometheus/config

Volume 1/ix-apps/app_mounts/qbittorrent 56.3G 115G 139K /mnt/.ix-apps/app_mounts/qbittorrent

Volume 1/ix-apps/app_mounts/qbittorrent/config 13.1M 115G 13.1M /mnt/.ix-apps/app_mounts/qbittorrent/config

Volume 1/ix-apps/app_mounts/qbittorrent/downloads 56.3G 115G 56.3G /mnt/.ix-apps/app_mounts/qbittorrent/downloads

Volume 1/ix-apps/app_mounts/radarr 14.8M 115G 128K /mnt/.ix-apps/app_mounts/radarr

Volume 1/ix-apps/app_mounts/radarr/config 14.7M 115G 14.7M /mnt/.ix-apps/app_mounts/radarr/config

Volume 1/ix-apps/app_mounts/thehuhchain 115M 115G 139K /mnt/.ix-apps/app_mounts/thehuhchain

Volume 1/ix-apps/app_mounts/thehuhchain/data 91.9M 115G 91.9M /mnt/.ix-apps/app_mounts/thehuhchain/data

Volume 1/ix-apps/app_mounts/thehuhchain/mariadb_data 22.6M 115G 22.6M /mnt/.ix-apps/app_mounts/thehuhchain/mariadb_data

Volume 1/ix-apps/docker 44.5G 115G 44.5G /mnt/.ix-apps/docker

Volume 1/ix-apps/truenas_catalog 197M 115G 197M /mnt/.ix-apps/truenas_catalog

It seems like Nextcloud data is using an absurd 3Tb which doesn’t make sense because both users are using only 51Gb???

Mind you that Nextcloud doesn’t exist. Only Nextcloudmain exists.

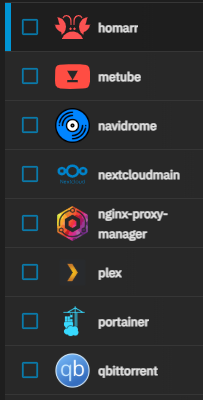

Heres a total list of my Apps + wordpress server with questionable name

You got the output posted in CODE tags. Helps alot in reading pre-formated text.

Yes, this is one of the causes of space usage. And yes, the parent is taking up a lot of space.

As for why, I don’t know NextCloud App, but snapshots work by preserving old data. So if you over write a file that was written into the the parent dataset via the NextCloud App, it won’t remove the space used by the snapshot version of the file. Thus, taking up more space for the snapshot.

For the parent’s 3TB of space, don’t know the NextCloud App…

So knowing that the space is mainly being taken up by old snapshots of it, how could i remove it since 3Tb is absurdly lot.

I found a potential solution on how to delete all snapshots of Volume\ 1

Is it okay to use it tho? (I don’t mind the deletion of all docker snapshots)

sudo zfs destroy -vr tank/.system@% (shows what it will delete)

sudo zfs destroy -nvr tank/.system@% (actually deletes it)

The GUI has the means to delete snapshots - why not use that?

My bad. I’ll delete the Snapshots and see what happens.

Seems like theres still some Bclones left. Just as a info, i’ve never intentionally made a bclone, so im planning to get rid of all.

How could i find the rest?

It’s the other way around.

Removing files (that share data blocks because of block-cloning) will not free up any space.

The GUI dashboard does not show the pool’s actual available space or used capacity. For some reason, TrueNAS (since forever?) decided to display the root dataset’s properties on the pool’s dashboard, rather than the pool’s property itself.

Datasets are unaware of cloned blocks or deduplication that are shared across a pool. This is why querying the root dataset yields incorrect information about the pool’s capacity. It’s adding up the “used” space of its children and cloned blocks, rather than reporting the pool’s actual capacity.

A child dataset suffers the same “problem”. If you copy a large file multiple times, it will think its filesystem is “filling up”, when really no additional space is being consumed. Only the pool is aware of the true capacity of itself. “Used” and “free”.

Would you rather have a 10-GiB movie file consume 30 GiB of space over three “copies”?

Would you rather have a 10-GiB movie file consume 10 GiB of space over three “clones”?

You would rather not save 20-GiB of spae?

Block Clones are your friend. They don’t take up space, but save it. Block cloned files are totally different from ZFS Snapshots.

If you think you want to get rid of all block clones, I don’t know of a method to find them. Perhaps someone else will know.

As for disabling block cloning, you can disable the pool feature:

zpool set feature@block_cloning=disabled POOL_NAME

Not sure if this will work when a pool already has block cloned files.

You can temporarily disable it with the ZFS module parameter:

echo 0 > /sys/module/zfs/parameters/zfs_bclone_enabled

This applies to the entire system, not a specific pool.

You would need to make it a Pre-Init script for it to apply after every reboot.

I just don’t see the reason to disable block-cloning if you want to save as much space as possible.

Thanks for the Help @winnielinnie and @Arwen. It seems like i won’t keep it disabled but im also pretty much sure i’ll never use Block cloning. Since usually i move the files and delete the original file location. Its not like i have infinite storage. As for the block clones, seems like i don’t need to find any more since it seems like the snapshots were the only ones left.

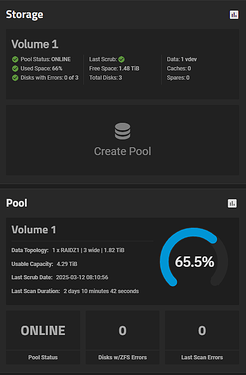

And its a huge difference

(the summed up solution since i’ve never found any forum posts about this online):

@Arwen

(Explanation and How to view Snapshots)

BCLONE or Block Cloning is a new feature when copying data. It actually saves space. Technology wise, it is similar to hard links.

Anytime you copy a file when block cloning is enabled and available, it does not actually copy the data blocks. Just creates a new directory entry in the new location. Any change to the file at any of the directory entries causes those changes to become unique and no longer part of the block clone series.

So, Block Cloning is GOOD and works like copy on write hard links.

Now what might be eating up your space are ZFS snapshots. Any changes to the original files that had snapshots takes up additional space. Snapshots are static, they never change unless you destroy it / them.

To see all of the Snapshots you have on your Volume (Volume\ 1) use

zfs list -t all -r Volume\ 1

@NugentS

(How can i delete all or some Snapshots?)

To delete Snapshots you can use the GUI under the Dataset tab. Just select the Parent Dataset and click on Manage Snapshots

@winnielinnie

(Why does this happen?)

Datasets are unaware of cloned blocks or deduplication that are shared across a pool. This is why querying the root dataset yields incorrect information about the pool’s capacity. It’s adding up the “used” space of its children and cloned blocks, rather than reporting the pool’s actual capacity.

@winnielinnie @Arwen

(How to disable Block cloning)

To disable Block cloning, you can disable the pool feature:

zpool set feature@block_cloning=disabled POOL_NAME

or disable the feature entirely on your System:

echo 0 > /sys/module/zfs/parameters/zfs_bclone_enabled

To disable it System-Wide you will have to make it a Pre-Init script.