ifconfig:

br-74b6ae837ae9: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.16.1.1 netmask 255.255.255.0 broadcast 172.16.1.255

inet6 fe80::42:14ff:fed8:6179 prefixlen 64 scopeid 0x20

inet6 fdd0:0:0:1::1 prefixlen 64 scopeid 0x0

ether 02:42:14:d8:61:79 txqueuelen 0 (Ethernet)

RX packets 698 bytes 183464 (179.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 653 bytes 227539 (222.2 KiB)

TX errors 0 dropped 2 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.16.0.1 netmask 255.255.255.0 broadcast 172.16.0.255

inet6 fdd0::1 prefixlen 64 scopeid 0x0

ether 02:42:8c:ef:42:59 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 24 overruns 0 carrier 0 collisions 0

enp6s0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.75 netmask 255.255.252.0 broadcast 192.168.7.255

inet6 fe80::dabb:c1ff:fe66:b491 prefixlen 64 scopeid 0x20

inet6 fd8a:aec5:80a3:1:dabb:c1ff:fe66:b491 prefixlen 64 scopeid 0x0

ether d8:bb:c1:66:b4:91 txqueuelen 1000 (Ethernet)

RX packets 5932 bytes 4956878 (4.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3623 bytes 1806541 (1.7 MiB)

TX errors 0 dropped 1 overruns 0 carrier 0 collisions 0

device memory 0x82100000-821fffff

incusbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.125.143.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::216:3eff:fee0:4594 prefixlen 64 scopeid 0x20

inet6 fd42:ca0e:dea:e3ab::1 prefixlen 64 scopeid 0x0

ether 00:16:3e:e0:45:94 txqueuelen 1000 (Ethernet)

RX packets 834 bytes 197915 (193.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2179 bytes 2787889 (2.6 MiB)

TX errors 0 dropped 10 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 2960 bytes 2727113 (2.6 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2960 bytes 2727113 (2.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

tapef52c2d1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 96:20:a3:39:e1:7e txqueuelen 1000 (Ethernet)

RX packets 834 bytes 209591 (204.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2178 bytes 2787659 (2.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethb981618: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::c448:8cff:feaf:d349 prefixlen 64 scopeid 0x20

ether c6:48:8c:af:d3:49 txqueuelen 0 (Ethernet)

RX packets 698 bytes 193236 (188.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 666 bytes 228705 (223.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: enp6s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether d8:bb:c1:66:b4:91 brd ff:ff:ff:ff:ff:ff

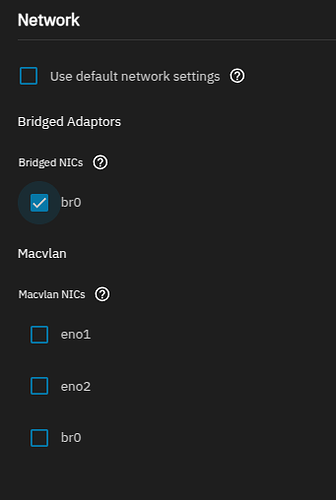

3: incusbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:16:3e:e0:45:94 brd ff:ff:ff:ff:ff:ff

5: br-74b6ae837ae9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:14:d8:61:79 brd ff:ff:ff:ff:ff:ff

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:8c:ef:42:59 brd ff:ff:ff:ff:ff:ff

8: vethb981618@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-74b6ae837ae9 state UP mode DEFAULT group default

link/ether c6:48:8c:af:d3:49 brd ff:ff:ff:ff:ff:ff link-netnsid 0

9: tapef52c2d1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master incusbr0 state UP mode DEFAULT group default qlen 1000

link/ether 96:20:a3:39:e1:7e brd ff:ff:ff:ff:ff:ff

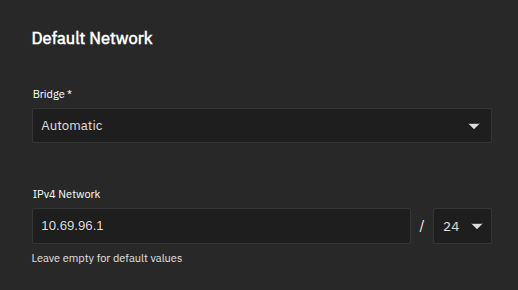

The LAN gateway is 192.168.4.1 and the VMs are currently set to (by “default” generation) 10.125.143.1/24