Thanks. My guess is that what you’re doing simply isn’t possible with Scale 25.04. When I setup an instance, the default is a bridge. Once that’s setup the only options for additional NICs are MACVLANs.

Thanks for the reply.

Have you just added apps to the ACL or are you actually using the mapped container user to actually perform the actions?

I’m adding apps to the ACL via the TrueNAS web-application interface in the browser. In Instances > Configuration > Map User/Group IDs, both the apps user and the apps group are automatically mapped.

This will be allowed in 25.04.1

That’s great!

Ok. I’ve finally had enough time to sit down and dig through the documentation. I believe what you’re describing is explained at the link which @djp-ix posted.

I’m still not clear on this. Are you performing actions and trying to access data in the container as the idmapped user (apps/568) or are you doing it as root or some other user? It needs to be the mapped container user sending permission requests to the corresponding user on the host.

Well, I’ve installed the Roon server using the installer. In this way, the server runs as root and so root would have to have access to the disk. I’ve not tried accessing the disk as the user apps. I’ll do so. If that works, I’ll install the Roon server manually as I had done under Core. When done so, Roon runs as user roon, if I remember correctly. I’ll then create a roon user on the TrueNAS host with the same IDs for a map. The other option that I’m exploring is using a bridge as suggested by @Emo so that I can simply mount the SMB share. Haven’t got that to work yet. It might have something to do with my router. I’ll be able to troubleshoot more this weekend.

In 25.04.1 you’ll be able to apply permissions on a dataset to a new builtin user truenas_container_unprivledged_root and then be able to access them as root from Instances.

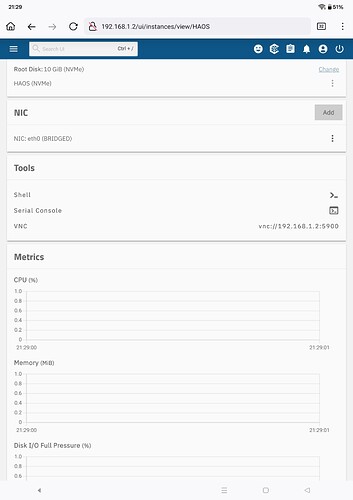

That’s what I did with 24.10 and HomeAssistant in a VM. Had a Bridge which was given to the VM.

For some reason when migrating I thought that mcvlan was the better option. I was afraid a bridge would use the Host IP and only use a Port for the VM… Not a separate MAC which works with DHCP in the router.

Stopped the VM. Deleted the mcvlan, created a bridge. Everything works now.

I just find it sad that the IP of the VM is not shown in the GUI.

I finally had some time to get the bridge configured with my containers using it. The instructions at Setting Up a Network Bridge | TrueNAS Documentation Hub were not helpful. At https://www.youtube.com/watch?v=uPkoeWUfiHU, Capt Stux cuts through all of the clutter and demonstrates how to correctly and easily configure the bridge. One thing that’s a bit frustrating when using a bridge, however, is that <hostname>.<domain> set in the general network settings is no longer reachable. One has to navigate to the TrueNAS machine and to individual containers using the IP addresses.

After having set up the bridge, I’ve discovered that it isn’t actually the solution to my problem. I can now ssh out to the TrueNAS host from containers, which is fine; however, my initial ssh attempts were only to troubleshoot issues involved with mounting either SMB or NFS shares which is still not possible. I suppose that this is a privilege issue, that ssh out to the host is allowed in unprivileged containers given a bridge has been set up first but that mounting host shares is still prohibited. The actual solution to my problem as others have suggested here is to add a “disk” to the container.

I’ve been able to get the permissions correctly configured/mapped on a dataset, which is also configured as an SMB share, so that when added as a “disk” in the container running Roon users within the container can now both read and write to the added “disk.” The ability to write to the “disk” is important as it’s best to store the automated Roon backups outside of the container and on a different pool.

The usage of added “disks” is going to do me just fine especially now that with TrueNAS Scale 25.04.1, one has the ability to add the same dataset as a “disk” to multiple containers. Thanks, @DjP-iX, for this information. I just upgraded this morning and can confirm that it works.

Thanks to everyone who’s provided feedback and support.

Did you try to ping it with <hostname>.local? It should work, at least for the truenas itself.

I use bridges, and I can access <hostname>.<domain>. However, my domain isn’t “local”, so it could be not applicable. Nevertheless, what is shown by hostname -d under your truenas and your container? Are those the same as your “local domain”?

Would be interested in hearing what you found unhelpful about the official doc compared to Stux’s presentation.

It’s now possible to navigate to <hostname>.<domain> set under Network > General. I’m not sure what was happening as I was using the same static IP as I had been before setting the bridge. Maybe the MAC address difference confused the router. It’s a mystery at this point.

Apologies for the late reply. I’m not exactly sure why the official documentation did not help. I was sure that I had followed the instructions and attempted several times but was never able to get the bridge to work. It could be that I was doing something that wasn’t explicitly stated as a “do not do.” In Capt Stux video, if I remember correctly, the steps starting at 4:00 are key. He has added the static IP address to the NIC and tests and saves. He then removes the static IP address but then does not test and save before creating the bridge during which he adds the interface to the bridge. He then saves.

Given that my original problem has now been solved by the ability to add as a “disk” a dataset to multiple LXC instances and that I don’t actually need to mount SMB shares because of this, are there any advantages to the bridge setup other than being able to SSH out to the TrueNAS host which I don’t really need?