Sorry for probably a stupid question but I am a beginner in Truenas but I am learning hard ![]() I will write out the entire commands I executed - maybe it will be useful to someone in a similar situation

I will write out the entire commands I executed - maybe it will be useful to someone in a similar situation

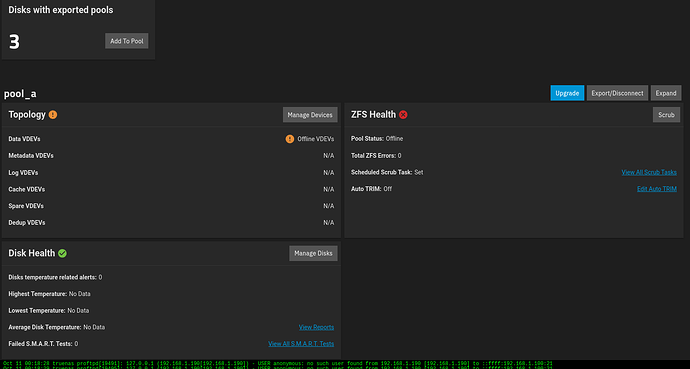

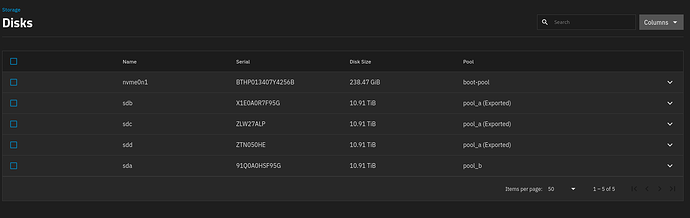

My Truenas ElectricEel-24.10-RC.2 after a normal restart lost one pool called pool_a, and all disks (3 pieces) are in pool_a(exported) status. I checked the status of the disks themselves and they seem to be OK:

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 10.9T 0 disk

├─sda1 8:1 0 2G 0 part

└─sda2 8:2 0 10.9T 0 part

sdb 8:16 0 10.9T 0 disk

├─sdb1 8:17 0 2G 0 part

└─sdb2 8:18 0 10.9T 0 part

sdc 8:32 0 10.9T 0 disk

├─sdc1 8:33 0 2G 0 part

└─sdc2 8:34 0 10.9T 0 part

sdd 8:48 1 7.2G 0 disk

nvme0n1 259:0 0 238.5G 0 disk

├─nvme0n1p1 259:1 0 1M 0 part

├─nvme0n1p2 259:2 0 512M 0 part

├─nvme0n1p3 259:3 0 222G 0 part

└─nvme0n1p4 259:4 0 16G 0 part

I checked the disks and they all look as good as one of them below - I hope:

sudo fdisk -l

Disk /dev/sda: 10.91 TiB, 12000138625024 bytes, 23437770752 sectors

Disk model: ST12000NE0008-2P

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 3BDF6087-7854-446B-84E8-9253EC5B7381

Device Start End Sectors Size Type

/dev/sda1 128 4194304 4194177 2G Linux swap

/dev/sda2 4194432 23437770718 23433576287 10.9T Solaris /usr & Apple ZFS

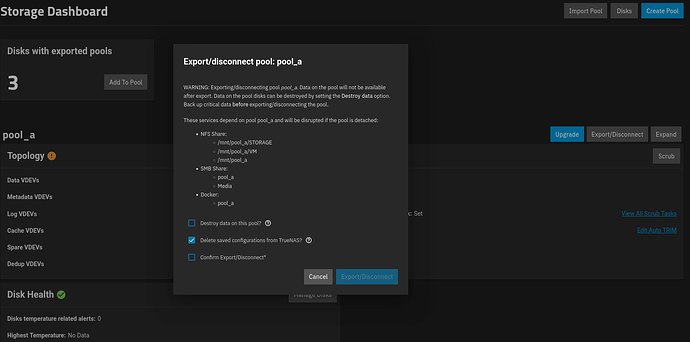

I tried to import the pool but I got an error message:

sudo zpool import pool_a

cannot import 'pool_a': insufficient replicas

Destroy and re-create the pool from

a backup source.

After that I imported the pool in readonly mode and all the pools and vdevs showed up in the browser window, but the pool itself did not mount in /mnt

sudo zpool import -o readonly=on pool_a

cannot mount '/pool_a': failed to create mountpoint: Read-only file system

Import was successful, but unable to mount some datasets

after that I made another status query and got a list of errors:

sudo zpool status -v

pool: pool_a

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: resilvered 156M in 00:01:28 with 592 errors on Mon Oct 7 17:55:18 2024

config:

NAME STATE READ WRITE CKSUM

pool_a ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

2fc128e1-b472-42ea-8a56-715eb5305916 ONLINE 0 0 0

39d99900-6aa3-4c05-862e-73f24438a182 ONLINE 0 0 8

d370c168-7b3b-4c12-9421-af4dd222fc09 ONLINE 0 0 8

errors: Permanent errors have been detected in the following files:

<metadata>:<0x0>

<metadata>:<0x475>

<metadata>:<0x38e>

pool_a/reolink:/CAM/outdoor/2024/10/07/RLC-833A_00_20241007174308.mp4

pool_a/VM/HAOS:<0x1>

… and now unfortunately I don’t know how to remove this data or fix it because the pool is not mounted, SCRUB does nothing either - is it because the pool is not mounted to /mnt?

Can I still fix it or did I lose the entire only backup??

The situation is very sad because I haven’t had any replications / backups for a long time ![]()