Inexperienced user here. Much hand holding required.

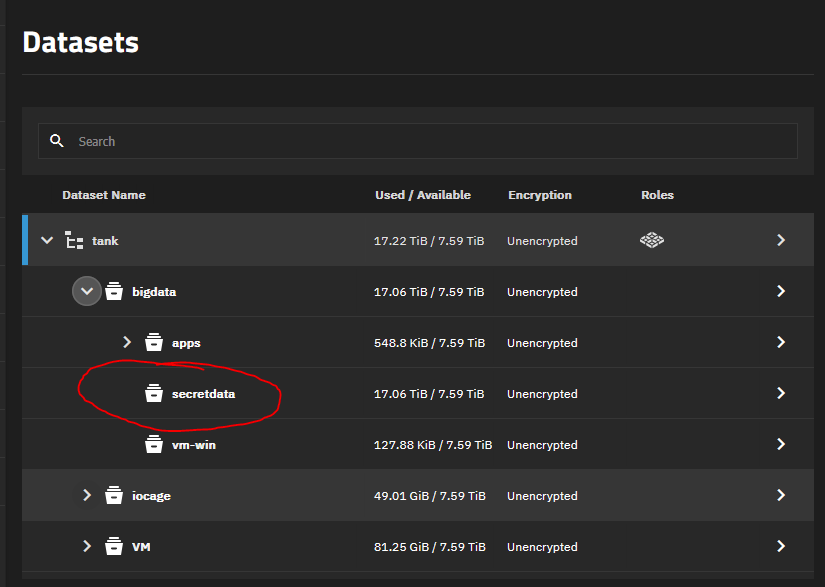

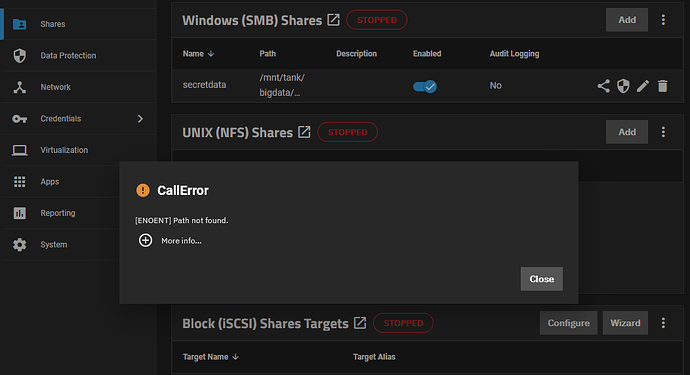

Recently migrated from CORE to SCALE (24.10.2), in order to extend my vdev with another drive. When it’s done, I started having issue with SMB hanging up, so I decided a fresh install. When I try to import pool from the web GUI, it gets stuck at 0% for hours. Started google-fu to import via CLI, still no success.

root@truenas[/home/truenas_admin]# zpool status -v

pool: boot-pool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

sda3 ONLINE 0 0 0

errors: No known data errors

root@truenas[/home/truenas_admin]# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

boot-pool 464G 2.63G 461G - - 0% 0% 1.00x ONLINE -

root@truenas[/home/truenas_admin]# zpool import

pool: tank

id: 9307007750265967810

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-EY

config:

tank ONLINE

raidz1-0 ONLINE

45c27aff-fc78-11ec-a7a0-bc5ff48ba3c8 ONLINE

45ab5b24-fc78-11ec-a7a0-bc5ff48ba3c8 ONLINE

cd7e41f9-4c94-441d-89b8-d45250d3150a ONLINE

d435051f-34bd-4606-8e44-1e2c58249fc1 ONLINE

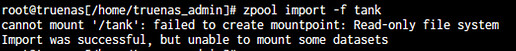

zpool import -f tank freezes my shell, but still doesn’t show the pool in my GUI. typing it again shows:

root@truenas[/home/truenas_admin]# zpool import

no pools available to import

What should I try next?