Not true. Some SMR HDDs do indeed support TRIM, however IMO it is of limited use for ZFS, and here’s why:

Trim on SMR HDDs works similarly but also very differently than for SSDs.

On both technologies the disk is divided into large areas which are written at once, and in both cases the TRIM helps the firmware to make more efficient writes - but once you get into the details they work very differently indeed.

SSDs have cells containing many sectors and can read and write each sector individually, however you can only write to an erased sectors - when you want to overwrite a used sector, then you need to:

- read the contents of the existing cell;

- change the sector you want to overwrite;

- write the contents of to a completely erased cell;

- send the original cell to a background queue to be erased for reuse

This need to read an entire cell just to write one sector is called “write amplification”. And there is a mapping that allows cells to be mapped to LBA ranges to make this work.

When you delete files, the TRIM command tells the SSD firmware that the relevant sectors are no longer in use, and the firmware can then:

- check if cells have all sectors TRIMmed and if so send the cell for erasure;

- for partially empty cells, decide whether it is worth copying the non-TRIMmed cells to an empty cell ready for the TRIMmed sectors to be written to at a later time.

SMR HDDs are different in that you can read sectors individually, but you can only write the entire cell. So, to write a single sector, if a cell is used then you need to read the entire cell, change the sector and write the entire cell back again similar to SSDs, but if the disk knows that the cell is completely empty it can simply write to it without reading the existing contents which is twice as fast. SMR drives have a CMR cache where writes are written first, and then in the background it destages these writes to the main SMR area. (And if you are doing bulk writes of random blocks, you can fill up the cache far faster than it can be destaged in the background, and then everything slows down by a factor of (say) 100.)

Having now thought about this, IMO I consider it likely that this means that for SMR HDDs:

-

TRIM is only effective when you have large contiguous empty areas of disk - this happens only when the disk is relatively new or when you have created such areas by running defrag. If you don’t do defrag, it becomes increasingly likely that every cell has at least one sector used, and then bulk write performance becomes universally poor - but if you do defrag to create large areas of contiguous free space, then bulk performance will be better. ZFS does to have any ability to defrag, so over time it is likely that every cell will have at least one used sector and trim will have zero impact on performance and bulk writes with will slow to a crawl.

-

The performance of SMR drives during resilvering of redundant RAID1/5/6 or ZFS mirrors or RAIDZ will very much depend on whether the resilvering code TRIMS the entire drive before starting to write and whether the writes are then done sequentially across the drive or randomly. Hardware RAID does resilvers sequentially across the disk and so performance can still be reasonable. Now that mirrors are resilvered similarly by ZFS (in order to reduce seek times not for SMR reasons) ZFS mirror resilvering on SMR drives with TRIM might have reasonable times if TRIM is invoked first (but I haven’t seen any benchmarks or anecdotal evidence to support this). For resilvering of ZFS RAIDZ the writes are done randomly and thus the time for resilvering SMR drives (with or without TRIM) are usually exponentially larger.

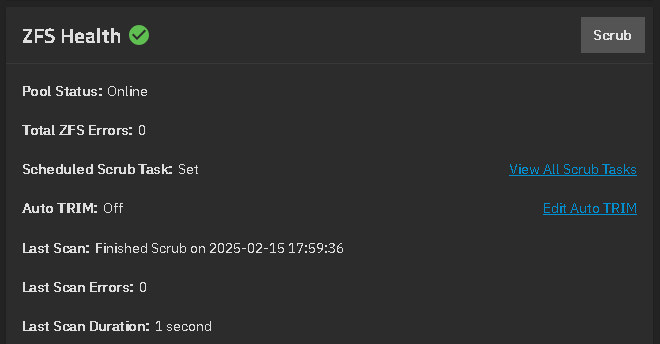

Finally the zpool autotrim parameter simply does a TRIM as and when ZFS moves blocks to the free pool and only when the drive supports TRIM. So if the zpool trim commands doesn’t recognise that the pool supports TRIM, I doubt that autotrim will either.

I suspect that since ZFS doesn’t work well with SMR drives, and the only types of HDD that support TRIM are SMR drives, I suspect that no attempt has been made to allow ZFS to detect trim on HDDs.