Hi all,

Having some issues with checksum errors as I resilver my pool and would like some clarification to make sure I’m on the right path and not going to lose/corrupt data.

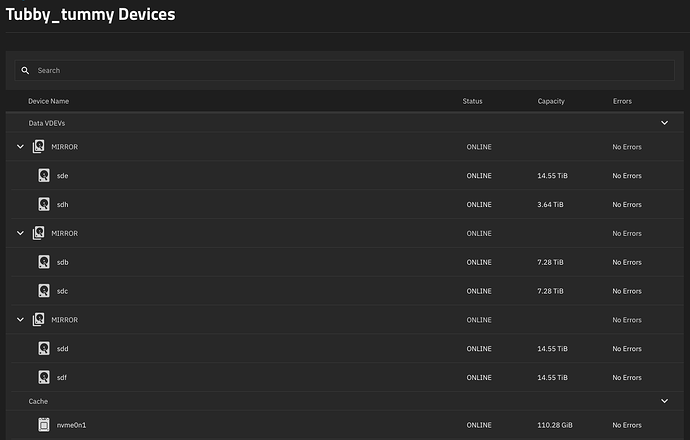

The situation: One of the vdevs in my pool is 4-TB mirror, and I plan to swap both drives in series for 16-TB ones to expand the space on my pool. Full system information at the end of this post. I have had checksum errors with this system before (without changing hardware) every few months, and usually a cable swap or reseat fixes it. I admit I haven’t been super rigorous about tracking this issue, but I just want to put it out there that this system has a history. All drives are <18 months old so I think it’s the motherboard if anything, but that’s another story. The last of these errors was over 3 months ago since which the system has run continuously.

Sequence of events

- Ensure system is up to date for current release channel (Dragonfish-24.04.2.5) and that there are no pending alerts/errors

- “Offline” the 4-TB drive I intend to replace from the GUI storage menu

- Shut down system

- Physically remove the 4-TB drive and replace it with a 16-TB drive. I did this with minimal disruption to other system components, and the other 4-TB drive did not get touched at all.

- Boot system

- Replace the 4-TB drive with the 16-TB drive from the web GUI, which starts the resilvering process

- I notice the remaining 4-TB drive has two checksum errors. This worries me a little given the system’s history, but I decided to let the resilver complete

- Resilver finishes

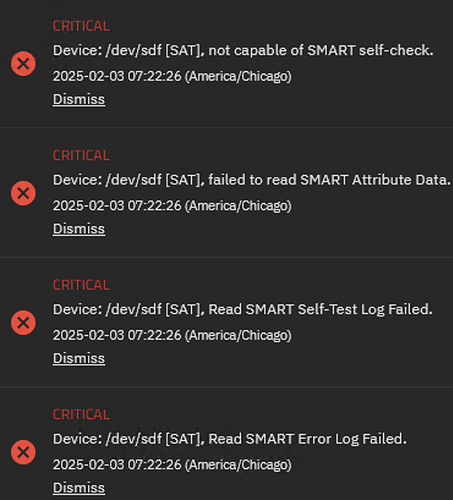

- The 4-TB reference drive and new 16-TB drive each have two checksum errors. I get an alert “One or more devices has experienced an error resulting in data corruption. Applications may be affected.”

- Considering how I have solved this problem in the past, I shut down the system, reseat the SATA cables for both drives in question, and boot

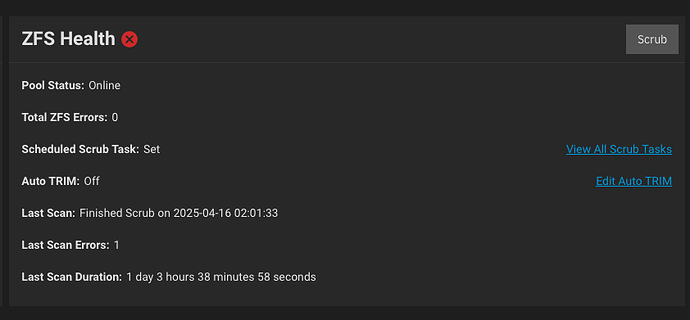

- The storage menu no longer shows checksum errors for either drive. However, the zpool status command (output below) shows that there is still an error

- I started a scrub right before writing this post, since I assumed that would be the next logical step.

I want to mention here that no data was written to the NAS during this process except for internal affairs (e.g. snapshots), and I still have the removed 4-TB drive and have not modified it. I also have a backup of the system but would really prefer to not rebuild a 28-TB pool over my 1-gig connection (yeah yeah I know get better networking).

My questions:

a) Considering the “reference” drive has not has its data changed, are the checksum errors an issue assuming they don’t reappear during the scrub? My understanding is that checksum errors can be corrected by looking at another copy of the data (like on a parity drive), so while the parity is no longer online, the drive hasn’t had its data changed since it was running with its partner (when I shut it down in step 3). I assume the “Permanent error” in the zpool status report is not going to correct itself after the scrub.

b) If the checksum errors do reappear on those drives, what do I do? I could try reseating cables, or can/should it be solved by reinstalling the other 4-TB drive so the two can reference each other, then retry the new drive installation/resilvering process? How would I go about telling ZFS that a drive it should already recognize is “back” so it knows the two 4-TBs should already match?

c) Why did the reboot clear the error from the alerts panel, but not the zpool status output at the command line (I ran the command right after the resilver and after reseating/rebooting, and it seems weird to have the error cleared in only one place.)

d) Is there anything I can do to prevent these checksum errors in the future? The drives are directly connected to the motherboard with Silverstone SATA cables. I’m sure there are better ones, but they’re not garbage. The system isn’t a hot, noisy, or vibration-heavy environment.

Thanks for reading, and I appreciate any help.

root@Tubby[~]# zpool status -v Tubby_Tummy

pool: Tubby_Tummy

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: Message ID: ZFS-8000-8A — OpenZFS documentation

scan: scrub in progress since Mon Apr 14 22:22:35 2025

10.6T / 23.3T scanned at 5.00G/s, 1.13T / 23.3T issued at 546M/s

0B repaired, 4.84% done, 11:48:28 to go

config:

NAME STATE READ WRITE CKSUM

Tubby_Tummy ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

65b327f5-28de-11ee-b17a-047c16c6facd ONLINE 0 0 0

5ee564fd-4a3a-4d4d-826e-08b4bac65d84 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

dcacc904-2e85-11ee-93a8-047c16c6facd ONLINE 0 0 0

dc9b3b3c-2e85-11ee-93a8-047c16c6facd ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

b3cab4b1-3626-11ef-a228-047c16c6facd ONLINE 0 0 0

b3dc73fe-3626-11ef-a228-047c16c6facd ONLINE 0 0 0

cache

nvme0n1p1 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

Tubby_Tummy/Plex_Media@Auto_Tubby_Sch05_2024-08-04_00-00:<0x10178>

System Information

TrueNAS Scale Dragonfish-24.04.2.5

MSI Pro B550M-VC WiFi Motherboard

Ryzen 5 4600G CPU

32 GB ECC Memory

2x Kingston SA400S37 240-GB SSD (Boot drives)

1x Intel Optane 128-GB SSD (L2 ARC)

2x Seagate Ironwolf 4-TB HDDs (Swapping to 16-TB versions)

2x Seagate Ironwolf 8-TB HDDs

2x Seagate Ironwolf 16-TB HDDs

^1 pool, 3 mirrored vdevs, all connected directly to motherboard with SATA cables

EVGA 220-P2-0650-X1 PSU

Fractal Design Node 804 Case