Dear TrueNAS community,

The past couple of weeks I’ve been reading a lot about DIY NAS builds, different file systems, ECC memory, OS options and more.

I’ve come to the point that I feel I have a broad understanding of the things to take into account when building the NAS, however I could still use some insights about picking the right vdev layout that works best for my specific situation.

I have a 2.5Gb home network with a hand full of devices: a desktop PC for music production, photo editing and gaming, a laptop (also for music production), Nvidia Shield and an Ubuntu server running various applications in docker containers.

The main reasons I want to add a NAS to my home network are:

- Backing up personal documents and photos (files I won’t be accessing much)

- Syncing photos from my phone to the NAS (using Nextcloud)

- Store media for my Plex Server

- Store music production projects and audio sample libraries, so I can both work on my Desktop and Laptop.

- Store photos in RAW for editing in Adobe Lightroom

Because I do a lot of photo editing and music production, browsing through files should feel snappy and quick, even when accessed over the network. So the read speed of small files is important to me.

I’ve decided to go for a Mini ITX build. The case I have in mind has 5 external 3,5” bays and 1 internal 2,5” bay. I am aiming for 20 to 30 TB of NAS storage space.

I am able to afford a maximum of 4x 10TB drives at the moment. I’ve got the following ZFS configuration options in mind:

Option 1 | Start with 3 disks

- Raidz1 with 1 vdev containing 3 disks (2 storage + 1 parity). I could later extent the pool with another vdev with 2 mirrored drivers.

Pros:

- Room for expansion

- I can use all 5 bays in the near future

- Lower initial cost (3 drives instead of 4, compared to my other options)

Cons:

- When I want to extent my storage capacity in the near future I get 2 different vdev configurations in the pool (a raidz1 vdev and a vdev with mirrored drives). Not sure how this impacts read/write performance.

**Option 2 | ** Start with 4 disks (and leave 1 bay open for some other purpose like L2ARC)

- Raidz1 with 3 disks storage + 1 parity disk

Pros:

- Storage capacity efficiency (3 out of 4 disks of usable storage)

Cons:

- Not ideal number of drives in a raidz1 configuration considering the “magic” (2^n)+p formula. (Not even sure this is a con when using lz4 compression cause block sizes will vary anyway when compressed)

- Can’t use the 5th bay for storage with data redundancy.

Option 3 | Start with 4 disks (and leave 1 bay open for some other purpose like L2ARC)

- 2 identical vdevs (mirrored), with each 2 striped disks.

Pros:

- From my understanding this configuration has the fastest read/write performance because of increased IOPS.

- Faster resilvering in case a drive fails (not sure if it makes a big difference compared to Raidz1 with 4 disks)

Cons:

- Lose 50% of storage capacity

- Can’t use the 5th bay for storage with data redundancy.

NAS build I’ve got in mind:

Case: Jonsbo N2 (ITX)

- 5x 3,5” and 1x 2,5” bays

MOBO: ASRock B550M-ITX/ac

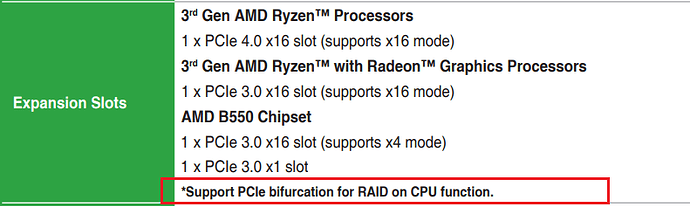

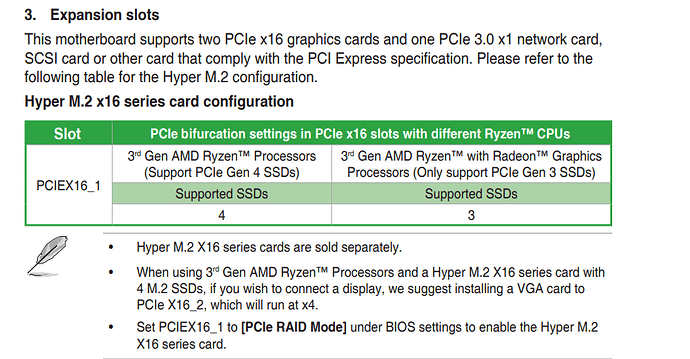

- 1x PCI-e 4.0 x16

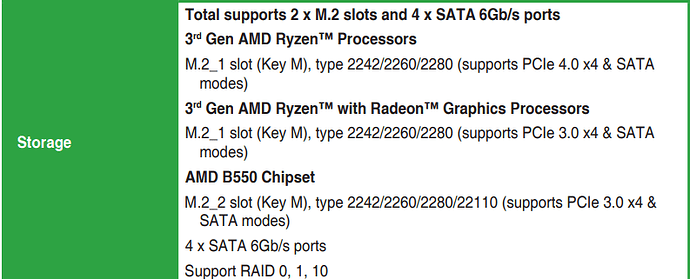

- 1x M.2 (PCI-e 4.0 x4 + SATA), 4x SATA-600

CPU: Ryzen 5 Pro 4650G (already bought this)

RAM: Kingston KSM32ED8/32HC (will upgrade to 64GB total later)

Storage:

- 1x SSD (Truenas boot disk)

- 3x or 4x 10 TB HDD 7200RPM (prob. Red Pro or IronWolf)

Right now I am leaning towards option 3 because of the increased IOPS. However, maybe I won’t even notice a difference because of how ZFS uses caching. What do you guys think?

I prefer speed over storage capacity. If possible, I’d like to have the feeling as if I am working from my local SSD. What do you guys think? Do you have any additional advice? Which option should I go for, or maybe there are other options that I haven’t thought about.

Thank for taking the time to read my post! ![]()