I am not able to create a pool. It keeps throwing this error:

FAILED

('no such pool or dataset',)

with the following traceback:

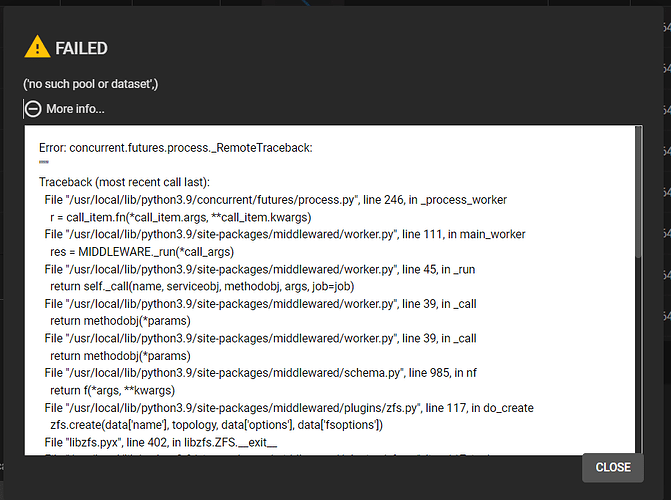

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 246, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 111, in main_worker

res = MIDDLEWARE._run(*call_args)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 985, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 117, in do_create

zfs.create(data['name'], topology, data['options'], data['fsoptions'])

File "libzfs.pyx", line 402, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 117, in do_create

zfs.create(data['name'], topology, data['options'], data['fsoptions'])

File **"libzfs.pyx", line 1376, in libzfs.ZFS.create

libzfs.ZFSException: no such pool or dataset**

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 355, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 391, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 981, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 735, in do_create

raise e

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 688, in do_create

z_pool = await self.middleware.call('zfs.pool.create', {

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1279, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1236, in _call

return await methodobj(*prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/service.py", line 496, in create

rv = await self.middleware._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1244, in _call

return await self._call_worker(name, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1250, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1169, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1152, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

libzfs.ZFSException: ('no such pool or dataset',)

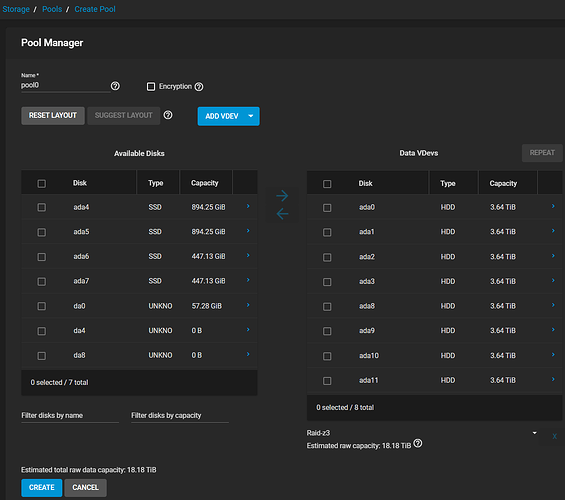

I tried to add two SSDs to the Cache VDEV, but even after removing one and trying again it still fails to create the pool, so I am not sure that is the cause.

Any ideas, anyone?