Hello! I am setting up my home TrueNAS server (spec in my signature).

The main purpose of my server is home file storage with installed apps: nextcloud, collabora, home assistant. For this I want to use RAIDZ2 8 x 1TB HDD.

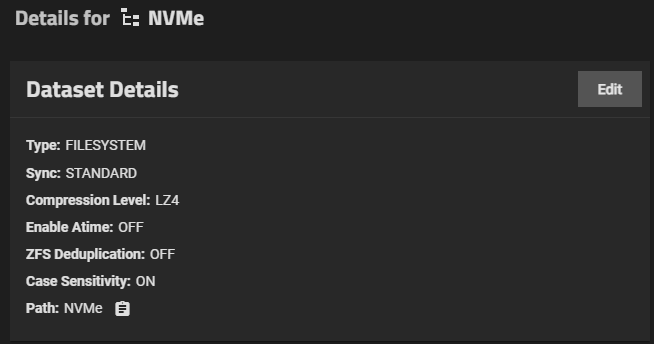

An additional task that I want to solve is running several VMs, for this I installed 9 NVMe. For testing, I created a RAIDZ2 pool and installed a Windows 10 VM, but faced a problem with low performance. For any actions inside the VM, the task manager shows 80-100% disk system load. What are the best practices for configuring a pool and VMs for high performance?

Thanks for any help!

Use Mirrors instead of a RaidZ2 for the VM Pool.

To explain Farout’s answer…

VMs (usually) use virtual file systems inside a zVol. ZFS doesn’t understand what is happening inside a zVol and cannot provide any optimisations, so these are going to be small reads and writes to random locations.

The problems with RAIDZ are:

-

Block size is the width of the data parts of RAIDZ1 - and this is likely to be far larger than the block size of the virtual disk leading to read and write amplifications.

-

IOPS are per vDev and mirrors have more vDevs and so more IOPS - and reads from mirrors can be from either drive so you get twice as many IOPS.

Also, you probably use synchronised writes for virtual disks/zvols, which have a large overhead and so putting your VM O/S onto a virtual drive (which need sync=always) and putting your data into normal ZFS datasets accessed by e.g. NFS is a way to limit the sync=always to only O/S writes which are far fewer.

Finally, you should see whether the new LXC support in Fangtooth can allow you to replace Linux VMs with LXCs which can be far more efficient than a VM.

Thanks to all for the answers and clarifications

I followed your advice and did the following:

- Moved Zvol VM to another Pool (so as not to reinstall the VM operating system)

- Deleted Pool RAIDZ2

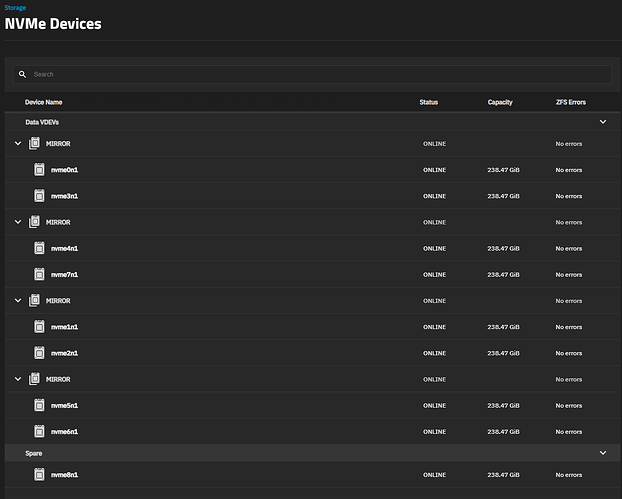

- Created Pool Mirror (configuration screenshot attached)

- Moved Zvol VM back to the new Pool Mirror

But nothing changed.

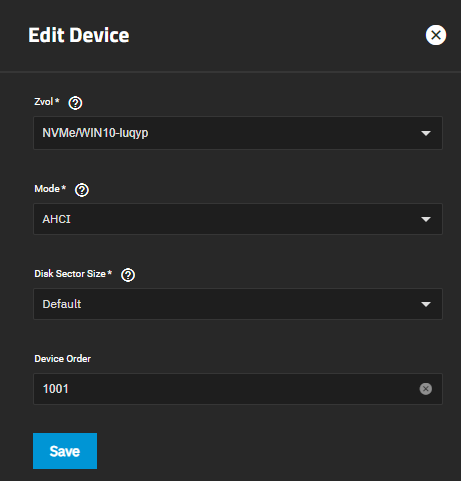

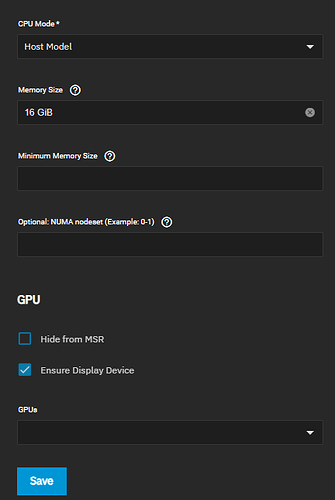

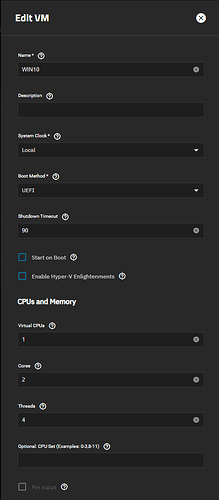

I am attaching screenshots of the current settings.

You’re using AHCI for an emulated storage controller which is probably contributing. It will probably require a reinstall, but changing this to virtio would likely help.

You should have enough cores on that 7551P that the 8 threads assigned to your CPU isn’t an issue.

Changed ACHI to virtio, OS did not boot, reinstalled, during Windows 10 installation the disk was not displayed, loaded iso image with virtio drivers, installation was successful.

Installed all necessary virtio drivers and latest OS updates. At first glance, VM works better, I will test.

Thank you all very much for your help!

1152MiB/s is pretty darned low IMO for 4x NVMe vDevs.

It’s also what my VMs peaked at on a Xeon D when benchmarking memdisk performance…

@stux Any idea what the bottleneck might be?

I think it’s related to raw memcpy performance in the VM.

These results confirmed mine.

Note max mem perf is circa 2.2GB/s.

So half of that is 1.1GB/s, which is what you see if there are two memory copies in the data path.

I continue testing:

I created a VM (Cores - 4, Threads - 8, Memory Size - 32 GiB), installed Ubuntu 22 and launched “meta-vps-bench”.

I encountered a lot of errors during installation and launch:

“WARNING: the --test option is deprecated…”

“WARNING: --max-time is deprecated…”

The errors were related to the syntax change in the new version of sysbench. So I edited the files “setup.sh” and “bench.sh” to fix the errors and merged the output of the results into one file “meta-vps-bench.result”

I attach my test results and my files “setup.sh” and “bench.sh” (maybe they will be useful to someone).

bench.sh (5.3 KB)

setup.sh (863 Bytes)

meta-vps-bench.result.txt (14.7 KB)

My memory test results:

Total operations: 48999476 (4898039.74 per second)

47851.05 MiB transferred (4783.24 MiB/sec)

I have no specific knowledge of your environments nor of the tools you are using to do these benchmarks, but as someone who at one point did performance analysis as a living I should advise that you need to understand what is happening under the covers (e.g. with what the O/S does with caching or zeros) and how the tool interfaces with the O/S to e.g. avoid any caching in order to be able to make any comparisons with other benchmarks or with specifications or to draw any conclusions about performance.

Thanks for your reply.

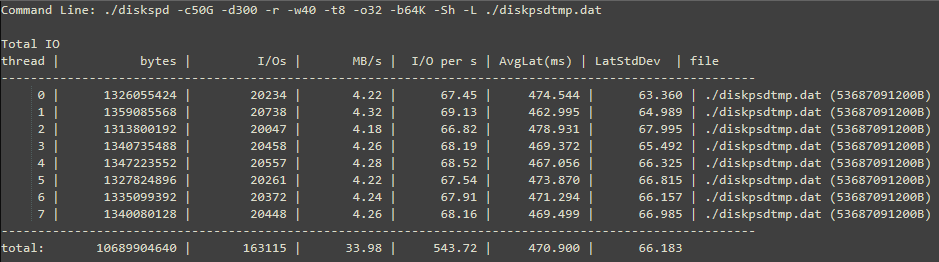

To rule out different behavior of Ubuntu and Windows in diskspd I went back to VM with Windows.

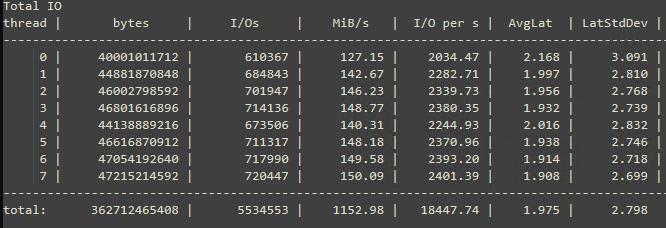

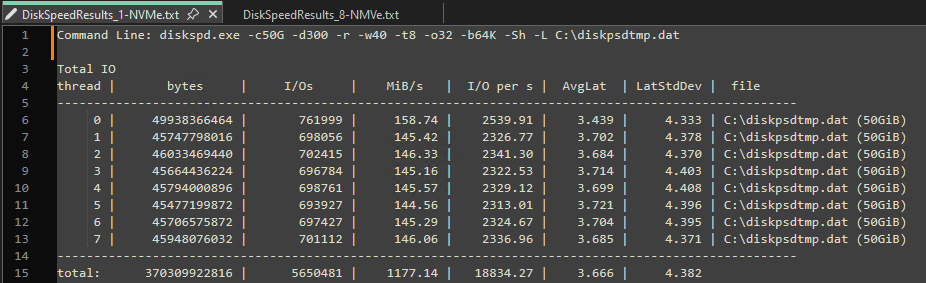

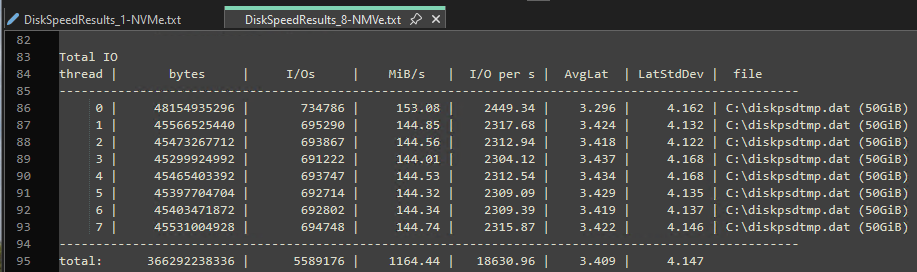

I created a new Stripe pool consisting of one NVMe disk and moved Zvol Windows 10 to it, results:

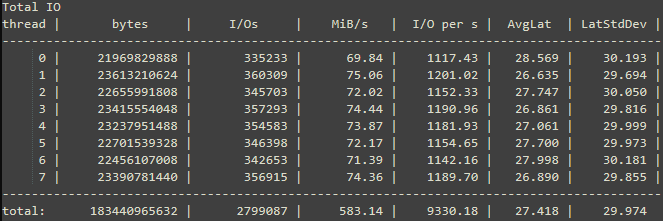

Then I moved Zvol Windows 10 back to Pool consisting of 8 NVMe (4 x MIRROR | 2 wide ) and the result is almost the same:

9 NVME for testing. I wish I was you. :). Thought my setup was good but this is the shizzle.

In fact, I was very lucky because I got all the HDDs and SSDs for free, almost new.

When most of them fail, I plan to buy one large PCI-E SSD for VMs.

Perhaps someone will be interested in the continuation of my story:

At the moment, I could not get a comfortable level of work for VM Windows, all the same, with any access to the disk inside the machine (opening folders, copying) there is a delay (I can compare this speed with the speed of the system installed on a regular HDD).

For comparison, I installed ESXi 8 on one of the NVMe SSDs and created a VM with Windows. I got very high speed of Windows and instant response to any operations inside the VM. The difference is huge! Therefore, now I am considering the option with VM TrueNAS on ESXi with Passthrough SAS controller. At the moment, we need to solve the problem with the network adapter:

In ESXi, the virtual interfaces of my PCI-E Mikrotik router do not work. In TrueNAS Scale, this works. So I ordered Mellanox ConnectX-4 to connect ESXi and hence TrueNAS VMs to my LAN at high 10/25G speed.

My experience is that a LOG (SLOG) device is more important than RAIDz(n) vs mirror as long as the SLOG device is a very fast (at small random writes) SSD. Most consumer SSD devices are no better at small random writes than HDD.

I have been using Intel datacenter SSDs rated for 50,000+ I/Ops for 4kB random writes with very good luck on zpools for VMs. They do not need to be very large, but you need the very high I/Ops.

Look for the spec for small (4kB) random write performance on the SSD. If that is not spec’d assume it is not great ![]()

WHY is this? Whether the vdev is RAIDz or mirror, a sync write will have to be committed to non-volatile storage before the write call is returned to meet POSIX specs. A zpool without a separate LOG device will commit the write to an area at the start off the zpool and at a later time move the data to free space in the zpool. This operation fights with other operations for the vdev devices. By using a SLOG device, that device is dedicated to sync write operations (and later read operations as the data is moved into the zpool itself).

This is true but don’t underestimate the performance hit you may get from read and write amplification.