I am running truenas on proxmox with 48GB DDR5 RAM with 3950x with 24 CPUs assigned. I amrunning 25.04.0

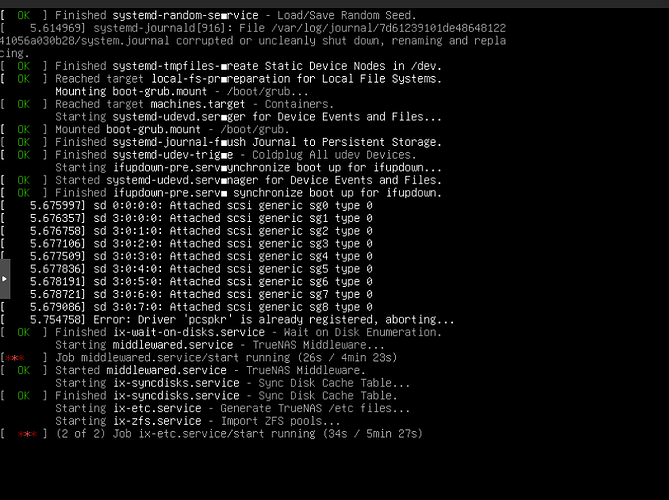

I have Raidz2 with 5x18TB drives, I had one drive which developed bad sectors. I replaced it and resilvered with no issues. Next, I added another drive with the intention to expand the vdev turning it into 6x18TB pool. This process started but at some point truenas crashed and got on boot. I removed the drive and booted and it showed a degraded pool.

I ran smart checks both long and short both passed for the disk. I manually removed and added the disk again, it restarted the resilver and when that finished it crashed again during expand and stuck on boot again. I have tried it multiple times with the same result.

I did a scrub all good on the data front, but the pool is degraded. I am unable to cancel expand or able to re-run successfully.

Here is the output from zpool status

pool: Storage

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using ‘zpool replace’.

see: msg/ZFS-8000-4J

scan: resilvered 230G in 00:57:04 with 0 errors on Sat Apr 26 18:07:16 2025

expand: expansion of raidz2-0 in progress since Thu Apr 17 18:51:53 2025

29.0T / 58.1T copied at 38.0M/s, 49.88% done, paused for resilver or clear

config:

NAME STATE READ WRITE CKSUM

Storage DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

f88a42c3-7304-496d-ba86-0db7af32d212 ONLINE 0 0 0

f95bcba6-ebcb-484c-8680-bed661af0b89 ONLINE 0 0 0

13e1c816-a9a9-4035-9a81-eaf115f6d127 ONLINE 0 0 0

e9a031aa-e5ca-4e56-8660-e93f3bb7b5c1 ONLINE 0 0 0

3f4c9848-d7b6-4ce5-85af-f4217d979504 ONLINE 0 0 0

7580203092224097384 UNAVAIL 0 0 0 was /dev/disk/by-partuuid/0c754d23-17af-483c-8928-f67f70955149

errors: No known data errors