sorry, also newbie here

OS Version:TrueNAS-SCALE-24.10.0.2

Product:ProLiant MicroServer

Model:AMD Turion™ II Neo N54L Dual-Core Processor

Memory:16 GiB

NIC: 2.5Gb

4x 16TB Seagate ST16000NM001G-2KK103

Here is, what fdisk sees:

truenas_admin@truenas:~$ sudo fdisk -l /dev/sda

Disk /dev/sda: 14.55 TiB, 16000900661248 bytes, 31251759104 sectors

Disk model: ST16000NM001G-2K

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 7FB75C4C-D652-4209-B220-46B46B624F5D

Device Start End Sectors Size Type

/dev/sda1 2048 31251757055 31251755008 14.6T Solaris /usr & Apple ZFS

here is what blkid sees:

truenas_admin@truenas:~$ sudo blkid

/dev/sdf3: LABEL="boot-pool" UUID="3370188939962827323" UUID_SUB="13189111619698390422" BLOCK_SIZE="4096" TYPE="zfs_member" PARTUUID="7e3b6cf8-eaa2-4d48-a443-40a145ecbf9e"

/dev/sdf2: LABEL_FATBOOT="EFI" LABEL="EFI" UUID="0D4D-2BA7" BLOCK_SIZE="512" TYPE="vfat" PARTUUID="4796eaee-887d-4982-b509-9917f97bf0b5"

/dev/sdd1: UUID_SUB="544723011062381214" BLOCK_SIZE="4096" TYPE="zfs_member" PARTLABEL="data" PARTUUID="b953b2f5-e269-43bf-9d20-b4d34e5ece63"

/dev/sdb1: LABEL="RAID" UUID="1901115498841768824" UUID_SUB="12922634147688021886" BLOCK_SIZE="4096" TYPE="zfs_member" PARTLABEL="data" PARTUUID="a83058de-032f-4f81-9c0a-f62bb50cc359"

/dev/sde1: LABEL="RAID" UUID="1901115498841768824" UUID_SUB="538098376802416322" BLOCK_SIZE="4096" TYPE="zfs_member" PARTLABEL="data" PARTUUID="bb9f4f4b-9457-4f2b-a398-b9eab36d5e96"

/dev/sdc1: LABEL="RAID" UUID="1901115498841768824" UUID_SUB="4407132540304298790" BLOCK_SIZE="4096" TYPE="zfs_member" PARTLABEL="data" PARTUUID="6fb1e42b-76a5-44a4-b63a-c608e643435a"

/dev/sdf1: PARTUUID="09d4a535-53ad-4f0f-86dc-bfa14b3ad6c6"

truenas_admin@truenas:~$

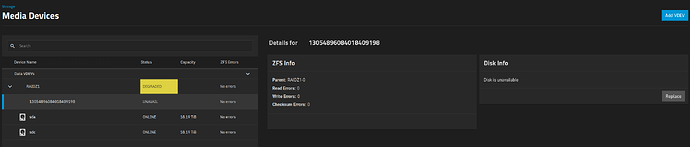

here is what zstatus says:

truenas_admin@truenas:~$ sudo zpool status -x

pool: RAID

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

scan: scrub repaired 0B in 10:59:19 with 0 errors on Sat Nov 16 20:37:35 2024

expand: expanded raidz1-0 copied 28.2T in 2 days 01:31:58, on Sat Nov 16 09:38:16 2024

config:

NAME STATE READ WRITE CKSUM

RAID DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

a83058de-032f-4f81-9c0a-f62bb50cc359 ONLINE 0 0 0

bb9f4f4b-9457-4f2b-a398-b9eab36d5e96 ONLINE 0 0 0

6fb1e42b-76a5-44a4-b63a-c608e643435a ONLINE 0 0 0

2e58e50f-49bc-4ada-979d-68ed8582e70c UNAVAIL 0 0 0

cache

b953b2f5-e269-43bf-9d20-b4d34e5ece63 ONLINE 0 0 0

errors: No known data errors

truenas_admin@truenas:~$ sudo zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

RAID 43.7T 29.5T 14.2T - 14.5T 0% 67% 1.00x DEGRADED /mnt

boot-pool 56.5G 4.64G 51.9G - - 2% 8% 1.00x ONLINE -

truenas_admin@truenas:~$ sudo smartctl -x /dev/sda

smartctl 7.4 2023-08-01 r5530 [x86_64-linux-6.6.44-production+truenas] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Seagate Exos X16

Device Model: ST16000NM001G-2KK103

Serial Number: ZL26DXG6

LU WWN Device Id: 5 000c50 0c6471213

Firmware Version: SN03

User Capacity: 16,000,900,661,248 bytes [16.0 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database 7.3/5528

ATA Version is: ACS-4 (minor revision not indicated)

SATA Version is: SATA 3.3, 6.0 Gb/s (current: 3.0 Gb/s)

Local Time is: Sun Nov 17 04:07:11 2024 PST

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

AAM feature is: Unavailable

APM feature is: Unavailable

Rd look-ahead is: Enabled

Write cache is: Enabled

DSN feature is: Disabled

ATA Security is: Disabled, NOT FROZEN [SEC1]

Write SCT (Get) Feature Control Command failed: Connection timed out

Wt Cache Reorder: Unknown (SCT Feature Control command failed)

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

...and so on...

and finally

truenas_admin@truenas:~$ sudo lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 14.6T 0 disk

└─sda1 8:1 0 14.6T 0 part

sdb 8:16 0 14.6T 0 disk

└─sdb1 8:17 0 14.6T 0 part

sdc 8:32 0 14.6T 0 disk

└─sdc1 8:33 0 14.6T 0 part

sdd 8:48 0 953.9G 0 disk

└─sdd1 8:49 0 953.9G 0 part

sde 8:64 0 14.6T 0 disk

└─sde1 8:65 0 14.6T 0 part

sdf 8:80 1 57.3G 0 disk

├─sdf1 8:81 1 1M 0 part

├─sdf2 8:82 1 512M 0 part

└─sdf3 8:83 1 56.8G 0 part

truenas_admin@truenas:~$

![]()