This is the beginning of my custom Trunas build using a Dell Optiplex 3000 Thin Client with an intel N6005 processor. I will edit/update this post as the build progresses. Ultimately this is to be a low power offsite backup of data mirroring my equally ill advised virtualized Trunas core on Hyper-V with disks that are passed through and has been running rock solid for over 4 years. Since everything I am doing here will inevitably result in a swath of people telling me what I am doing is shite and it’s not server grade, consumer SATA sucks, your not using ECC Memory, it’s going to be unreliable, why are you doing this? I will only bother responding to the last in my list. Because it’s fun and really enjoyable to contradict everyone that insists Truenas is only for server grade hardware. So you are welcome to point out all the things that make this build such a bad Idea, but you would really be wasting your time. So let’s get into the details, shall we!

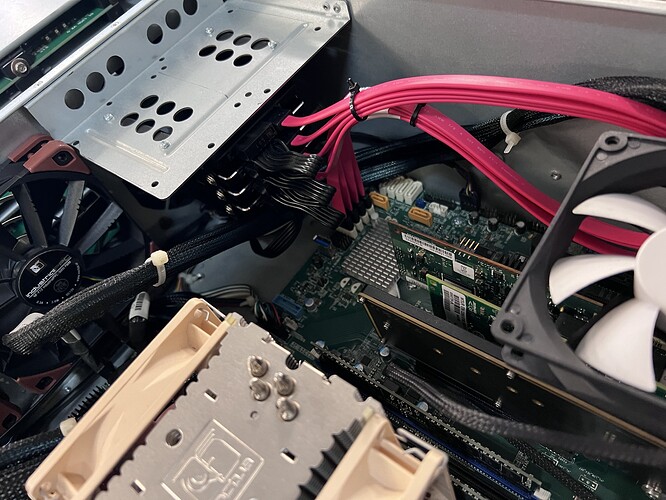

Upon recieving the thin client it came with 4GB of RAM and two sodimm slots which I immediately upgraded to a single 16 GB DDR4-3200 sodimm. This will enable me to upgrade to another 16GB at my lesiure. I then installed a 10Gtek M.2 (M Key) to 6xSATA Adapter. Since this was longer than the stock M.2 2230 slot I desoldered the standoff and used one of those double sided adhesive hanging strips to hold the adapter down.

I initially installed Truenas Core on the built in 32GB emcc storage, but enabling this disabled the onboard M.2 NVME slot, so I opted to install Truenas on a USB stick for the time being.

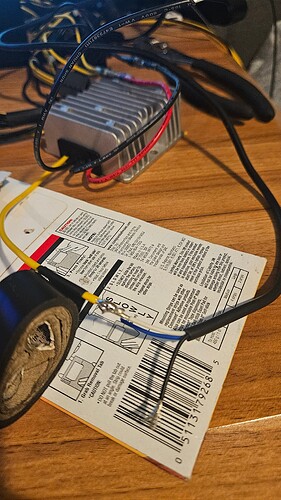

Next I had to address power. I plan to use 3.5in hard disks so I need 3.3, 5V and 12V power and the inlcuded power brick only supplies 19.5.

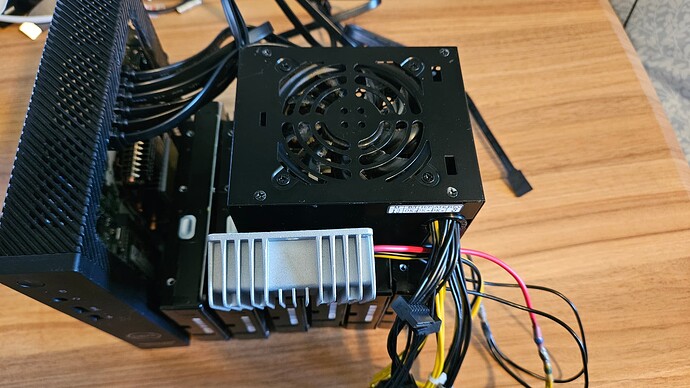

Using an old 400W power supply form an akitio EGPU box that I dismantled so I could use it’s guts for a thundebolt to PCIE adapter I wired it up to supply power to the hard drives and to the thin client. There was one problem however, the power supply only had 8 pin PCIE power connections. So upon opening up the power supply I was able to quickly identify the 3.3V and the 5V rails which I soldered some 5x sata power cables to in the sorrect pinout order.

Now I just needed to supply 19+V to the thin client. Using a 12V to 19V step up adapter I easily converted 12V coming off one of the 8 pin PCIE cables to 19V and wired an appropriately sized barrel connector to it’s output.

After triple checking all the voltages I first plugged in one hard drive and verifed it worked. I then took a deep breath and plugged in the barrel connector to the thin client and whoila! It booted up into Truenas. Easy peasy!

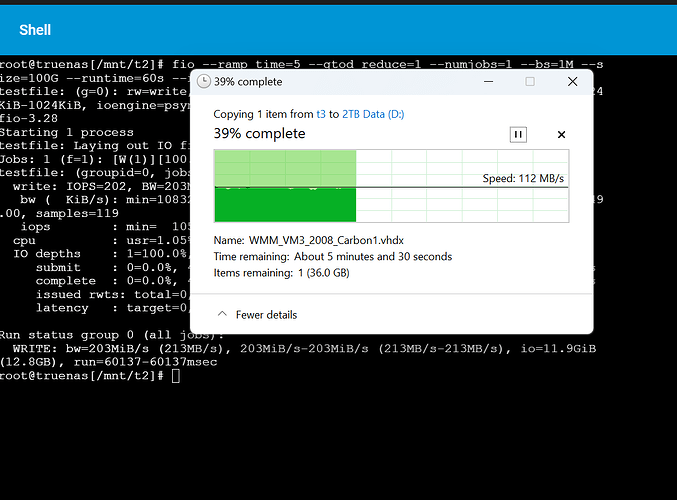

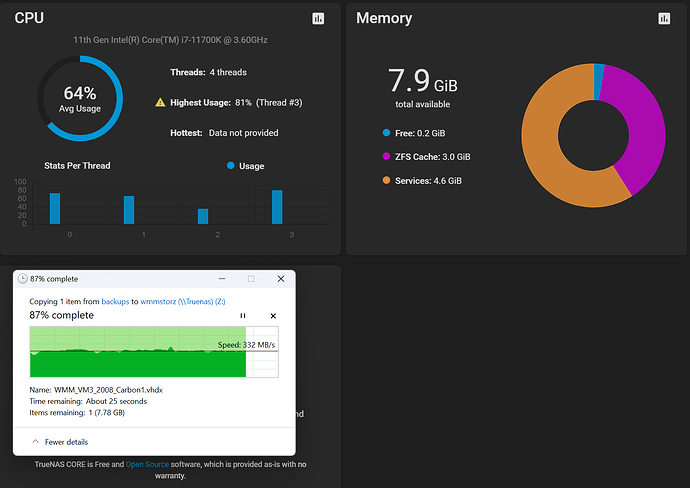

Since I have not yet recieved my 6 x 4TB 7200 RPM SATA drives yet I attached 4 2TB drives I had lying around and configured them in a RAIDz1 pool and tested the local throughput as well as the througput via the onboard Realtek NIC. My intial test results yielded arond 200MB/S locally and about 112MB/S over the 1Gbe network from my window workstation.

Neither scenario seemed to cause the CPU to spike excessively and it looks like I have some headroom to spare.

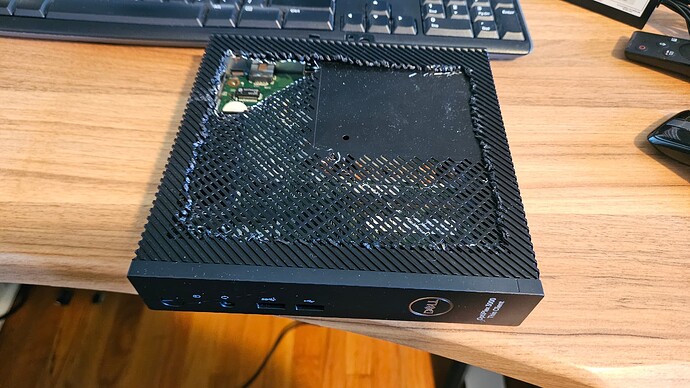

I’ve begun cutting away the top of the thin client chassis. I am first going to attemp to reuse the thin client chasis by cutting outmost of the top except for about a 1" border which will be used to support and mount a 3D printed housing extension onto which will host the drives and a 3D printed chassis for them, the oversied step up converter and the power supply. I could 3D print everything, and in the end I may.

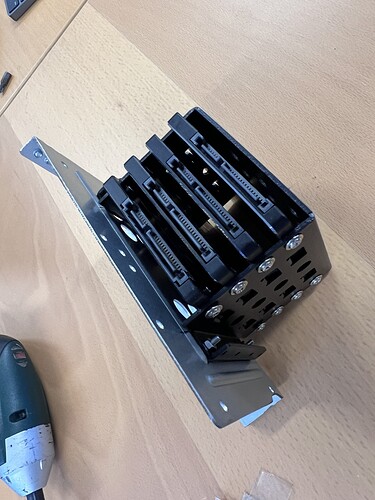

Next steps will be some further performance testing when I get my 4TB drives in. I also plan to 3D print a tooless hard drive chassis with a DIY backplane and locking mechanism. Yes, I am custom janking a backplane in the jankiest fashion. I have also ordered an E key m.2 to PCIE adapter and a 10GBE card with an AQC113C chipset which should be able to run at near fill speed over the PCIE Gen 3x1 M.2 slot originally used for wifi. To my understanding this won’t work with Trunas Core, so I may need to use scale for this build.

Yes I know, currenlty I am only seeing 200 MB/s read and write speeds locally, well I think I can better that. And while this will be a remote backup over a 1Gbps FIOS WAN on both ends I’d like to do the initially sync locally over 10GBE. I imagine I will end up taking this back home blowing this thing away and starting from scratch several times, so not having to wait days to copy all the data initially will be nice. However, if in the end I can’t get more than 2.5GBE speeds over 10GBE, I will just swap out a 2.5GBE card and call it a day and use the 10GBE card for something else. **

Update Day 2

I am still waiting on the 6x4TB 7200RPM 3.5" drives to be delivered.

I did however fix my initial hack job on the case where I started cutting the top open.

I used my dremel to cuto out a portion of the top leaving all the latching mechanisms in place.

As you can see it leaves plenty of space for the sata cables to pass through.

Below are some pictures of the hardware arranged to get an idea of the desired placement of indidual components and to visual the overall footprint of the finished NAS…

In the end it looks like it will be comparable in size to most 6 bay off the shelf nas systems. Next is to make some measurments and design the backplane and enclosure. I envision a tooless enclosure where the drives just slide into the backplane without any caddy and has some kind of spring loaded tab to lock each drive into place. To save on space I may put the pcie slot on the the top ouside of the case since the only time I am likely to use 10GBE or the PCIE slot is when I bring the NAS onsite to restore or back up large amounts of data.

Update 2 Day 2

Now with the 6 drives connected I did a speed test locally again on a RAIDZ1 and got a similar result as my 2TB drives. Maxing out around 220MBps. Curious if the ASM1166 was overheating as it’s heatsink was very hot to the touch I blew a fan over it which gave me another 20-30 MBps . I checked the CPU usage and it was relatively low averaging about 25% across all 4 cores.

So the next question I wanted to answer is if perhaps there was some sort of port multiplier crap going on. To answer this I created a 6x4TB stripe to benchmark on. This yielded very different results. Topping out at around 950 MBps with a fan on it and 930+ MBps without a fan. I know this is a PCIE gen 3 board and one lane of that would max out 984MBps.

I recall this adapter being an x2 adapter and I may already be pushing the limits of the 6 drives in a striped array. So I ran lspci and the results were consistent with thtis perfomance test…

Capabilities: [80] Express (v2) Endpoint, MSI 00…

LnkCap: Port #0, Speed 8GT/s, Width x2…

LnkSta: Speed 8GT/s (ok), Width x2 (ok)…

This is a PCIE Gen 2.0 x2 adapter which would max out around the limits of the srtipe I am seeing. So am I leaving any performance on the table? Is the ASM1166 bottlenecking my drives? Well looking at real world benchmarks from other users, these drives average a sustained write speed of about 159MBps. Multiply that by 6 and you get 954MBps which is almost exaclty what I am seeing here. Clearly there deosn’t seem to be any port multiplation going on with this m.2 to sata adapter and I am getting both the full benefit of the drive speeds, so no complaints there.

Next is to figure out what is bottlenecking my RAIDz1 config. In my desktop I have 4x5TB drives in a ZFS RAIDz1 under hyper-V and I get 337MBps from fio locally.

And I get a pretty steady 350MBps over SMB from the vm host.

Those drives are seagate terascale drives and don’t appear to have better performance specs than the Dell drives I ordered. This makes me think that maybe 4 drives will perform better. Let’s see…

Four drives didn’t help too much and 5 drives gave me about 300MB/s. Interestingly, my virtualized truenas on my desktop only has 8GB memory and seems to be performing better. Not much better, but a little. I don’t think 6 drives specifically is causing any kind of bottleneck or a single drive in the bunch based on my stripe test. Perhaps I am incurring latency from only using a single stick of 16GB PC4-3200 . It certainly does not appear to be purely CPU bound. I’ve accomplished enough for one day. If anyone has any suggestions other than saying I shouldn’t be using the hardware I’m using, I’m all ears. In the end, the perfomance isn’t bad and is more than sufficient for an off site backup over a 1GBE WAN. Either way I just want to figure out where the bottleneck is actually coming from. A good nights rest always brings new ideas.

Send in the clowns!!! ![]()

**