Exactly which commands have you tried, and what was the result?

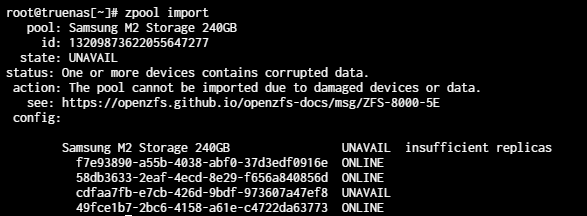

I tried zpool import (“pool name/id”) with various arguments like -f and -F - no dice. Basically the ones I linked in the original post up there.

Adding new drives does not move the data. Data added before expansion is still fully on the old drives; data added after expansion is striped all over, but probably most on the new drives—and is lost if any drive is irrecoverable.

Okay well this is great news because I added the drives and they essentially died in a day or two without any new data being added to the server. (maybe a few nextcloud photos, but who cares. They are still on my phone and can be added later if this issue is fixed)

That depends on what the situation is. What is the hardware condition of each drive?

Potentially, it might be possible to roll back to a state where the pool can be imported, discarding some data. Else, you could attach the drives to a Windows machine and try Klennet ZFS Recovery—scanning is free, but if it finds something to recover it will cost you $500 for the license.

All of them work except the two new ones I added. They both were sold to me as DOA apparently. One is recognized by my MOBO but doesn’t seem to functionally work. The other is completely dead. Not recognized by mobo and makes horrible sounds. I know, this is my fault. I should have checked the drives but I trusted the seller.

So, If I remove the SSD and the 4TB drive ( the ones that were in my NAS originally with ALL of my important data on them ) and install them in my PC, I should be able to just clone them onto new drives for backups? I assume I can use Macrium or something?

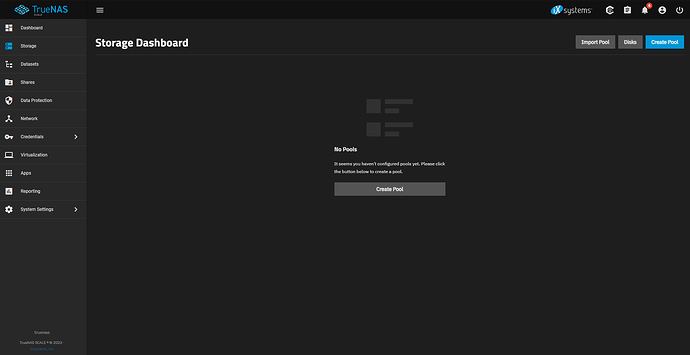

In any case: Buy some good drives, to clone the drives before attempting some potentially destructive operations, and/or to copy any data you might recover. And to make a new pool that is well-designed and safer from the start.

So, I essentially just clone the data from my old drives to new ones ( that way I have a backup incase something gets messed up ). How do I go about re-importing the pool? If I disconnect the faulty drive, it still shows up as UNAVAILABLE on the zpool import command even though it isn’t connected to the NAS - also when I try to do zpool import (enter name of pool) it says “pool does not exist” or something along those lines. Is that because I exported it?