I’ve recently moved from CORE to SCALE (latest Dragonfish). I’ve done a brand new installation and am re-applying the CORE configuration from captured CORE screenshots. I am struggling to have the same network configuration resolve DNS entries on SCALE for some reason, and I am baffled as to why.

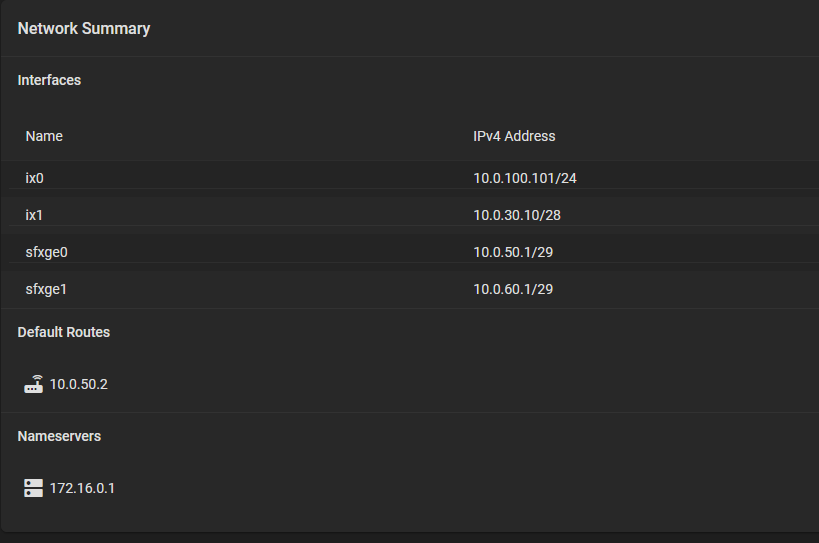

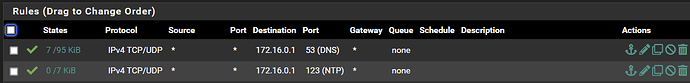

Here is my CORE network configuration that worked without a problem (i.e. it could reach out to Internet and fetch updates).

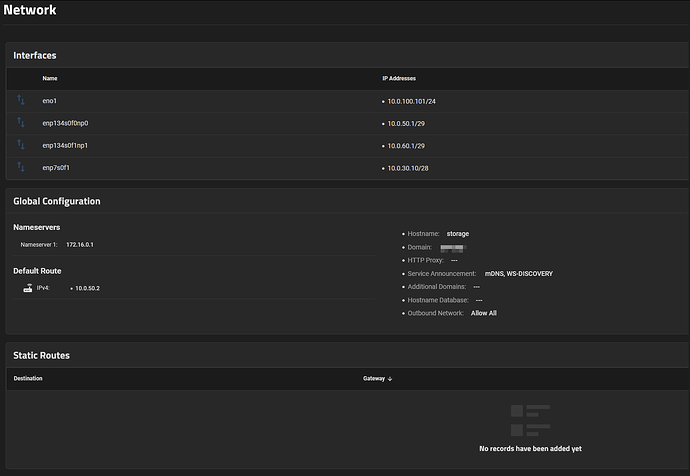

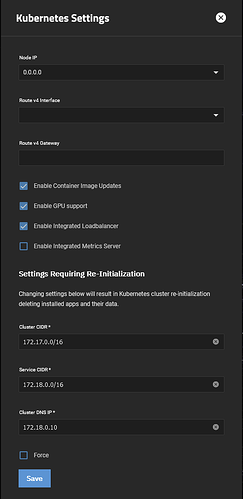

Here is the SCALE configuration that no longer works.

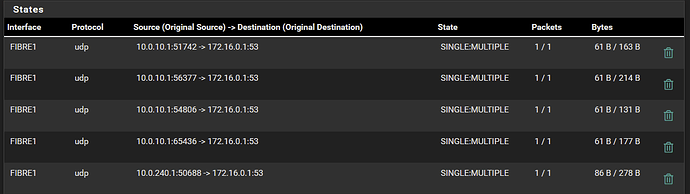

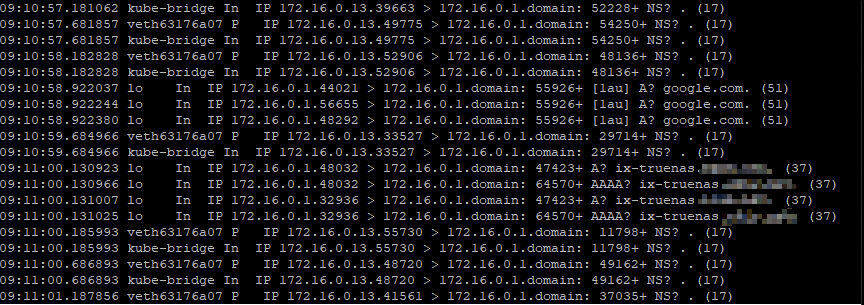

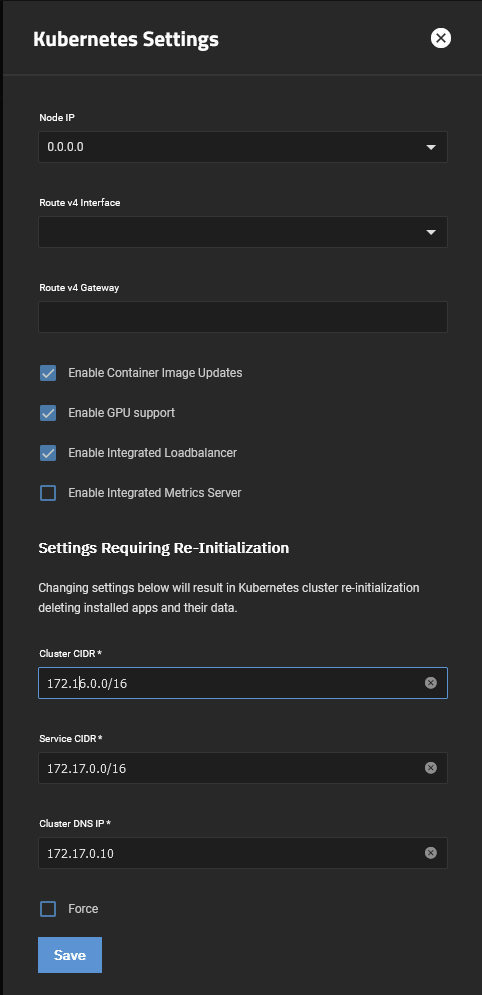

Extra info:

172.16.0.1:53is where pfSense firewall’s DNS Resolver service is running10.0.50.2is where the PVI interface gateway is defined (inter-VLAN routing)- there are no firewall changes between previous CORE and new SCALE installation

- this setup works for the rest of the network and did work for CORE - but no longer works on SCALE

I can ping both the PVI gateway and the firewall - basic connectivity works.

admin@storage[~]$ ping 172.16.0.1

PING 172.16.0.1 (172.16.0.1) 56(84) bytes of data.

64 bytes from 172.16.0.1: icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from 172.16.0.1: icmp_seq=2 ttl=64 time=0.058 ms

64 bytes from 172.16.0.1: icmp_seq=3 ttl=64 time=0.055 ms

64 bytes from 172.16.0.1: icmp_seq=4 ttl=64 time=0.065 ms

--- 172.16.0.1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3070ms

rtt min/avg/max/mdev = 0.041/0.054/0.065/0.008 ms

admin@storage[~]$ ping 10.0.50.2

PING 10.0.50.2 (10.0.50.2) 56(84) bytes of data.

64 bytes from 10.0.50.2: icmp_seq=1 ttl=64 time=0.804 ms

64 bytes from 10.0.50.2: icmp_seq=2 ttl=64 time=0.817 ms

64 bytes from 10.0.50.2: icmp_seq=3 ttl=64 time=0.865 ms

64 bytes from 10.0.50.2: icmp_seq=4 ttl=64 time=0.925 ms

--- 10.0.50.2 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3056ms

rtt min/avg/max/mdev = 0.804/0.852/0.925/0.047 ms

Digging google.com through the default nameserver doesn’t work.

admin@storage[~]$ dig google.com

;; communications error to 172.16.0.1#53: connection refused

;; communications error to 172.16.0.1#53: connection refused

;; communications error to 172.16.0.1#53: connection refused

; <<>> DiG 9.18.19-1~deb12u1-Debian <<>> google.com

;; global options: +cmd

;; no servers could be reached

…but it does work through Google’s DNS.

admin@storage[~]$ dig @8.8.8.8 google.com

; <<>> DiG 9.18.19-1~deb12u1-Debian <<>> @8.8.8.8 google.com

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 27401

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;google.com. IN A

;; ANSWER SECTION:

google.com. 153 IN A 142.250.69.206

;; Query time: 16 msec

;; SERVER: 8.8.8.8#53(8.8.8.8) (UDP)

;; WHEN: Sat Jun 22 00:33:13 MDT 2024

;; MSG SIZE rcvd: 55

I’ve checked /etc/resolv.conf and everything looks fine:

admin@storage[~]$ cat /etc/resolv.conf

domain <redacted>

nameserver 172.16.0.1

Also, I am not seeing anything listening on port 53 (should there be something?)

admin@storage[~]$ netstat -an | grep "LISTEN "

tcp 0 0 127.0.0.1:6999 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:6444 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:10010 0.0.0.0:* LISTEN

tcp 0 0 10.0.60.1:5357 0.0.0.0:* LISTEN

tcp 0 0 10.0.30.10:5357 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:10257 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:10259 0.0.0.0:* LISTEN

tcp 0 0 10.0.50.1:5357 0.0.0.0:* LISTEN

tcp 0 0 10.0.50.1:179 0.0.0.0:* LISTEN

tcp 0 0 10.0.50.1:50051 0.0.0.0:* LISTEN

tcp 0 0 10.0.100.101:5357 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:50051 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:6000 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:445 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:139 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:3260 0.0.0.0:* LISTEN

tcp6 0 0 ::1:179 :::* LISTEN

tcp6 0 0 :::29652 :::* LISTEN

tcp6 0 0 :::29653 :::* LISTEN

tcp6 0 0 :::29642 :::* LISTEN

tcp6 0 0 :::29643 :::* LISTEN

tcp6 0 0 :::29644 :::* LISTEN

tcp6 0 0 :::20244 :::* LISTEN

tcp6 0 0 :::10250 :::* LISTEN

tcp6 0 0 :::6443 :::* LISTEN

tcp6 0 0 :::443 :::* LISTEN

tcp6 0 0 :::445 :::* LISTEN

tcp6 0 0 :::139 :::* LISTEN

tcp6 0 0 :::22 :::* LISTEN

tcp6 0 0 :::111 :::* LISTEN

tcp6 0 0 :::80 :::* LISTEN

tcp6 0 0 :::3260 :::* LISTEN

My latest thinking is to try and open the port 53 via iptables manually, but I really really don’t want to do that as it feels very wrong.

Am I missing something really basic here? What else could I try? Any thoughts are appreciated.

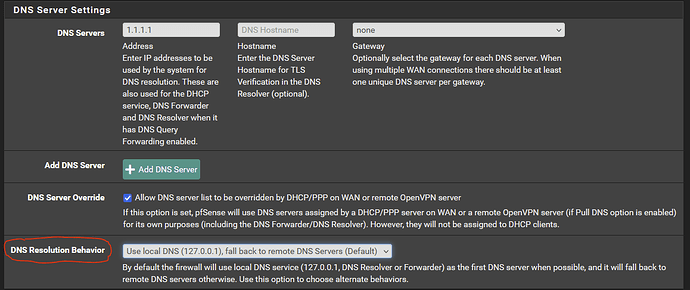

EDIT: Internet connectivity is restored if I put 8.8.8.8 as the secondary nameserver but I don’t want to do that as DNS Resolver on the firewall is capable of doing the extra lookup to public DNS.

I’ve also triple-checked to see if there are any remnant firewall rules - there are none. I’ve also made sure that DNS lookups from the IP range of PVI (10.0.50.x) are allowed.

This firewall config works under CORE but doesn’t under SCALE.

EDIT 2: Adding Kernel IP routing table. This is also looking ok to me.

admin@storage[~]$ netstat -r

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

default 10.0.50.2 0.0.0.0 UG 0 0 0 enp134s0f0np0

10.0.30.0 0.0.0.0 255.255.255.240 U 0 0 0 enp7s0f1

10.0.50.0 0.0.0.0 255.255.255.248 U 0 0 0 enp134s0f0np0

10.0.60.0 0.0.0.0 255.255.255.248 U 0 0 0 enp134s0f1np1

10.0.100.0 0.0.0.0 255.255.255.0 U 0 0 0 eno1

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 kube-bridge