Hi all. I wanted to share my experience with this issue. I am getting help on discord for it right now and we have made progress. I was asked to share everything we have done in hopes it helps more people with Ollama Docker I am running.

I upgraded from Dragon to ElectricEel-24.10.2

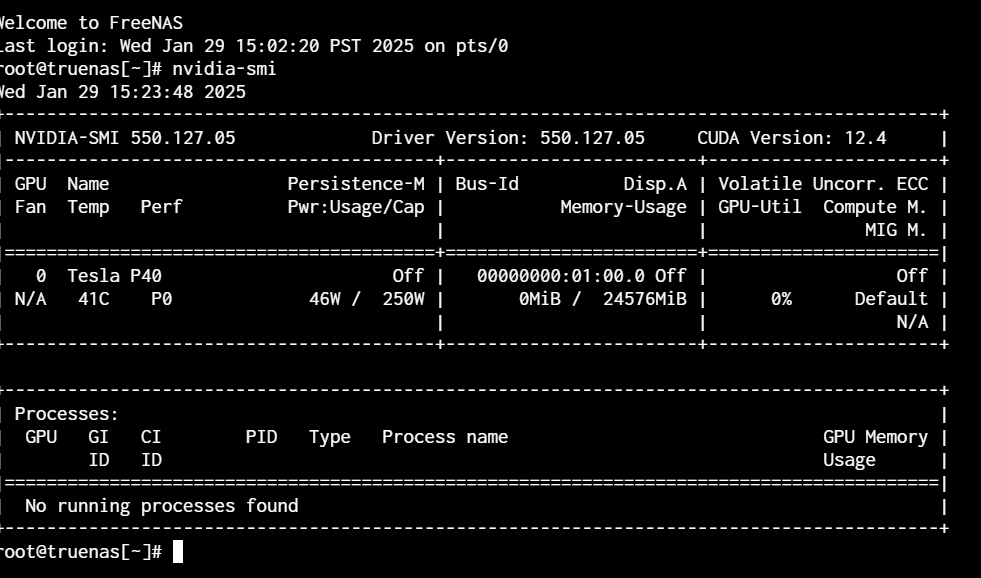

This did not go well to say the least. Like all of you, the Nvidia drivers where missing. I could not install them because apt was disabled. So I reached out on Discord, Github and on the forums. nvidia-smi was not installed but I could see my GPU in the settings under isolate GPU.

I gotta say, everyone (Kryssa and HoneyBadger) on Discord has been gems. Thank you all so much for all your help!

So this is what we did.

Kryssa asked me to run this from the Host OS Shell:

midclt call -job docker.update ‘{“nvidia”: true}’

Then all my apps disappeared. I was worried…

It was all in vain as I restart and everything came back!

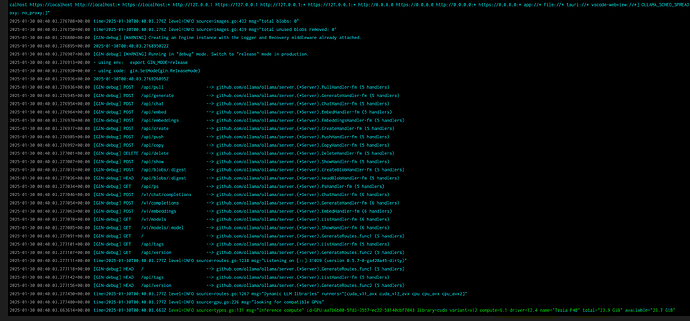

nvidia-smi now was working and outputting my Tesla P40.

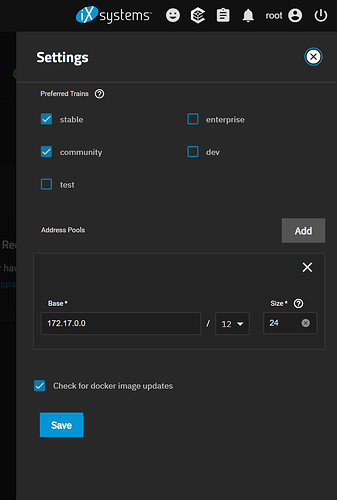

However, the option in the apps → config → settings still did not show a check for nvidia. Weird!

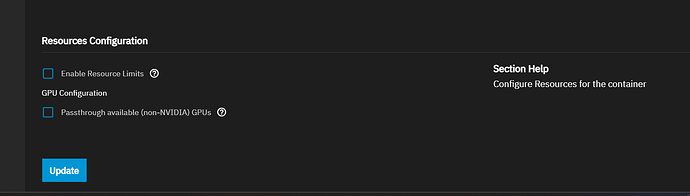

I also can not assign the GPU to any dockers as the GPU does not list.

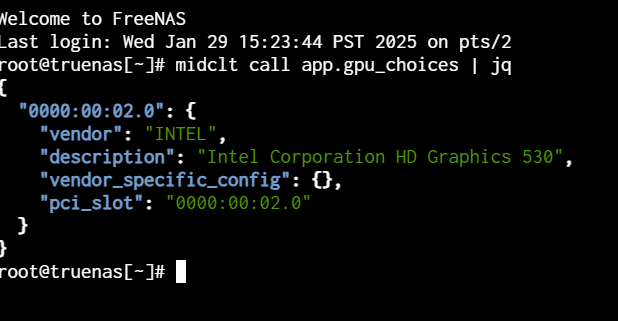

Based on something recommended here I ran this on the Host OS:

Only my iGPU was showing up. eakkkk.

HoneyBadger said they where going to look more into this. I have offered to send my card to them for testing and offered access to the system as well.

I will keep everyone up to date on the progress we make.

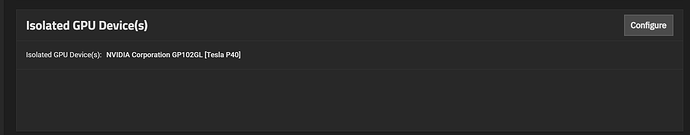

Update: I noticed my GPU was listed as Isolated for some reason! This was new as it was never set to be isolated! To remove it I have to make sure NOTHING was selected in the drop down in the config menu and save it.

The nvidia drivers checkbox now shows up!

Update 2: The saga contuines… ![]()

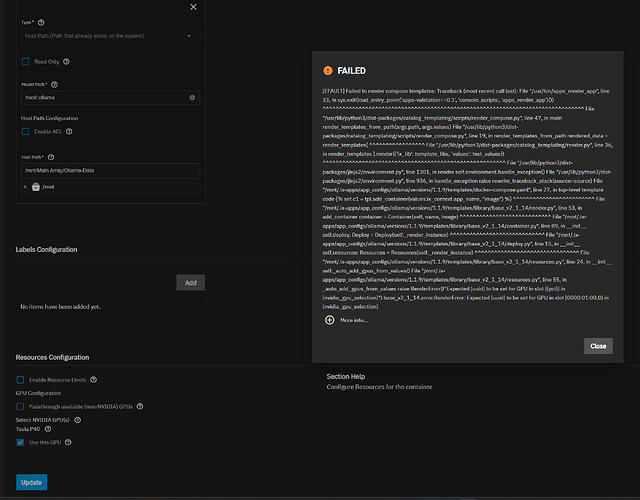

I did another restart and stop/started the container and the GPU started to show under the docker config!!! I was so excited but alas my hopes where dashed again with this error.

Update 3: Success? Kinda?

So I tried unchecking and rechecking the Nvidia drivers thing a few times. Tried restarting a few times. No success. Then I tried a new instance of Ollama and the gpu worked!!! What!!!

So this part of it seems to be some kind of bug with migrated dockers from the old train maybe? I donno. This just means I need to remake my dockers now.