Stux

June 4, 2024, 2:41am

21

So, I have made one piece of progress.

I was unable to get my pool to mount. /dev/vdb was… well… not formatted.

Rolling back did not help.

It appears somewhere along the line with all my trials, the sector size on the disk device got set to 512B instead of 4096.

That’s one thing.

Stux

June 4, 2024, 3:02am

22

Sigh.

Just cloned the boot volume zvol, then did a clean install from iso of 24.04.1.1

(was never asked to re-use pre-existing config on disk)

Same hang.

Stux

June 4, 2024, 3:16am

23

So, I clean installed 24.04.0 and it booted right up. I then uploaded a config… and it restarted with out timing out…

…

but once I upload my config… then it times out at shutdown.

must be something that’s configured.

that’s interesting.

The config is fairly minimal.

Stux

June 4, 2024, 4:04am

24

I had a disabled ubuntu VM in the TrueNAS VM.

deleting the VM means TrueNAS doesn’t block at restart time.

I suspect there is a bug in the libvirt stuff…

…

after deleting the VM, I am able to do an online update without the update hanging on restart… and then timing out as above.

@John

Stux

June 4, 2024, 4:14am

25

Update still hangs on boot

…

when it hangs, 3 cores (of 4) are spinning at 100%

658604 libvirt+ 20 0 9900.8m 8.2g 30700 S 301.7 6.5 19:54.27 qemu-system-x86

Stux

June 4, 2024, 6:32am

26

Its the config I think. Something is tripping up the startup when the config is applied.

And it happens on two different VMs

And if I reset to defaults… then I can upgrade… and as soon as I apply the config it will hang

Stux

June 4, 2024, 8:30am

27

So, that’s interesting.

reset to defaults, create root account, create a bridge, upgrade… hang.

reset to defaults, upgrade… no hang.

Stux

June 4, 2024, 9:05am

28

setting root only… no hang.

set root and static ip… hang.

guess its time to try rebooting the hypervisor.

Stux

June 4, 2024, 9:13am

29

That worked btw.

no it didn’t.

back to the random freezes

That is interesting and it seems reproducible. Hopefully you find “The” solution. You really do this forum a huge service, thank you.

1 Like

Stux

June 4, 2024, 11:10am

31

Wonder why it works for you, but definitely not for me. Even a clean install.

What is your Nic device?

John

June 4, 2024, 1:20pm

32

Dumb question and I’m not sure if I have an idea but what does “setting root” mean. Set password, set shell? …I really dont have much of an idea but I’ll look something up and see if I can pull on that thread a little bit to see what happens and get back to you.

You think your config has something to do with it. Have you tried viewing with the DB viewer?

Stux

June 4, 2024, 1:30pm

33

I mean setting a “root user” account password in the installer.

I had been testing by minimizing the changes from default.

The problem is it doesn’t fail 100% of the time, leading to false positives, so I had begun to think that using a root account vs an admin acount, makes a difference.

The latest results are I can do a clean install, with a boot device and no pool. Set either an admin password or a root password and it will hang. Typically while generating some keys.

When set to 8GiB of ram

But not 100% of the time.

It works 100% of the time with 24.4.0.

John

June 4, 2024, 2:10pm

34

Okay, my possible dumb idea was ‘run0’ and Systemd 256. I’m going on the concept of my previous thought about “read only”.

This could be a time suck so I’d verify the versions first before going down this path.

opened 05:53PM - 26 Apr 24 UTC

closed 07:03PM - 27 Apr 24 UTC

pid1

not-our-bug

downstream/fedora

I'm trying to figure out the intermittent Testing Farm fails, and there's someth… ing very wrong going on with systemd when upgrading it from v255 to v256-rc. It can be easily reproduced on latest Rawhide:

```

# rpm -q systemd

systemd-255.5-1.fc41.x86_64

# koji download-build --arch x86_64 --arch noarch systemd-256~rc1-1.fc41

...

# dnf upgrade ./*.rpm

...

Dependencies resolved.

===============================================================================================================================================================================================================================================

Package Architecture Version Repository Size

===============================================================================================================================================================================================================================================

Upgrading:

systemd x86_64 256~rc1-1.fc41 @commandline 5.2 M

systemd-libs x86_64 256~rc1-1.fc41 @commandline 726 k

systemd-networkd x86_64 256~rc1-1.fc41 @commandline 707 k

systemd-oomd-defaults noarch 256~rc1-1.fc41 @commandline 28 k

systemd-pam x86_64 256~rc1-1.fc41 @commandline 394 k

systemd-resolved x86_64 256~rc1-1.fc41 @commandline 309 k

systemd-udev x86_64 256~rc1-1.fc41 @commandline 2.3 M

Transaction Summary

===============================================================================================================================================================================================================================================

Upgrade 7 Packages

Total size: 9.5 M

Is this ok [y/N]: y

Downloading Packages:

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Upgrading : systemd-libs-256~rc1-1.fc41.x86_64 1/14

Upgrading : systemd-pam-256~rc1-1.fc41.x86_64 2/14

Upgrading : systemd-resolved-256~rc1-1.fc41.x86_64 3/14

Running scriptlet: systemd-resolved-256~rc1-1.fc41.x86_64 3/14

Upgrading : systemd-networkd-256~rc1-1.fc41.x86_64 4/14

Running scriptlet: systemd-networkd-256~rc1-1.fc41.x86_64 4/14

Upgrading : systemd-256~rc1-1.fc41.x86_64 5/14

Running scriptlet: systemd-256~rc1-1.fc41.x86_64 5/14

Upgrading : systemd-udev-256~rc1-1.fc41.x86_64 6/14

Running scriptlet: systemd-udev-256~rc1-1.fc41.x86_64 6/14

Upgrading : systemd-oomd-defaults-256~rc1-1.fc41.noarch 7/14

Cleanup : systemd-oomd-defaults-255.5-1.fc41.noarch 8/14

Running scriptlet: systemd-networkd-255.5-1.fc41.x86_64 9/14

Cleanup : systemd-networkd-255.5-1.fc41.x86_64 9/14

Running scriptlet: systemd-networkd-255.5-1.fc41.x86_64 9/14

Running scriptlet: systemd-udev-255.5-1.fc41.x86_64 10/14

Cleanup : systemd-udev-255.5-1.fc41.x86_64 10/14

Running scriptlet: systemd-udev-255.5-1.fc41.x86_64 10/14

Cleanup : systemd-255.5-1.fc41.x86_64 11/14

Running scriptlet: systemd-255.5-1.fc41.x86_64 11/14

Reexecute daemon failed: Transport endpoint is not connected

Cleanup : systemd-libs-255.5-1.fc41.x86_64 12/14

Cleanup : systemd-pam-255.5-1.fc41.x86_64 13/14

Running scriptlet: systemd-resolved-255.5-1.fc41.x86_64 14/14

Cleanup : systemd-resolved-255.5-1.fc41.x86_64 14/14

Running scriptlet: systemd-resolved-255.5-1.fc41.x86_64 14/14

Running scriptlet: systemd-resolved-256~rc1-1.fc41.x86_64 14/14

Running scriptlet: systemd-resolved-255.5-1.fc41.x86_64 14/14

Reload daemon failed: Transport endpoint is not connected

Job for systemd-resolved.service failed because the control process exited with error code.

See "systemctl status systemd-resolved.service" and "journalctl -xeu systemd-resolved.service" for details.

Job for systemd-userdbd.service failed because the control process exited with error code.

See "systemctl status systemd-userdbd.service" and "journalctl -xeu systemd-userdbd.service" for details.

Job for systemd-journald.service failed because the control process exited with error code.

See "systemctl status systemd-journald.service" and "journalctl -xeu systemd-journald.service" for details.

Job for systemd-udevd.service failed because the control process exited with error code.

See "systemctl status systemd-udevd.service" and "journalctl -xeu systemd-udevd.service" for details.

Failed to start jobs: Transport endpoint is not connected

Reload daemon failed: Transport endpoint is not connected

Failed to start jobs: Transport endpoint is not connected

```

systemd is at this point completely unusable:

```

# systemctl log-target kmsg

# systemctl status systemd-journald

× systemd-journald.service - Journal Service

Loaded: loaded (/usr/lib/systemd/system/systemd-journald.service; static)

Drop-In: /usr/lib/systemd/system/service.d

└─10-timeout-abort.conf

Active: failed (Result: exit-code) since Fri 2024-04-26 17:49:22 UTC; 1min 41s ago

Duration: 7min 31.408s

Invocation: aeb8d9430f254c02bb7eb285c6d2d500

TriggeredBy: × systemd-journald.socket

× systemd-journald-audit.socket

× systemd-journald-dev-log.socket

Docs: man:systemd-journald.service(8)

man:journald.conf(5)

Main PID: 2796 (code=exited, status=127)

FD Store: 0 (limit: 4224)

CPU: 540us

# systemctl restart systemd-journald

Job for systemd-journald.service failed because the control process exited with error code.

See "systemctl status systemd-journald.service" and "journalctl -xeu systemd-journald.service" for details.

[ +0.000013] systemd[1]: Starting systemd-journald.service - Journal Service...

...

[ +0.000068] systemd[1]: Received SIGCHLD from PID 2890 (16).

[ +0.000036] systemd[1]: Child 2890 (16) died (code=exited, status=127/n/a)

[ +0.000076] systemd[1]: init.scope: Child 2890 belongs to init.scope.

[ +0.000006] systemd[1]: systemd-journald.service: Child 2890 belongs to systemd-journald.service.

[ +0.000016] systemd[1]: systemd-journald.service: Main process exited, code=exited, status=127/n/a

[ +0.002222] systemd[1]: systemd-journald.service: Failed with result 'exit-code'.

[ +0.001730] systemd[1]: systemd-journald.service: Service will restart (restart setting)

[ +0.000009] systemd[1]: systemd-journald.service: Changed start -> failed-before-auto-restart

[ +0.000054] systemd[1]: varlink-74: Sending message: {"parameters":{"cgroups":[{"mode":"auto","path":"/system.slice/systemd-

[ +0.000303] systemd[1]: systemd-journald.service: Job 2279 systemd-journald.service/start finished, result=failed

[ +0.000008] systemd[1]: Failed to start systemd-journald.service - Journal Service.

```

I'll need to dig a bit deeper to see what's going on, but I'm opening this in the meantime, because I have a feeling @AdamWill is hitting something very similar in Fedora's OpenQA.

Also, typing on my phone so not at a laptop.

1 Like

Stux

June 4, 2024, 11:49pm

35

Well, you don’t really need dracut in the initrd at all anymore to have a working initrd. Except for certain kinds of exotic storage

Quoting pottering…

Wonder if ZFS counts as exotic

I shall be ordering more memory so I can embark on this journey also! See if I have the same issue if not resolved by the time I get it and install it, probably a couple weeks. I’ll probably use 16GB ram though for the VM.

I won’t be updating like you though, so it will be different than yours. I guess I could briefly try the same version as you before continuing on to Electric Eel once the docker stuff makes it to the Beta.

1 Like

Stux

June 5, 2024, 4:27am

37

Here’s another example of something that sounds very similar, but with Proxmox as the Hypervisor.

I have a Proxmox hypervisor (8.2.2) running a single VM for TrueNAS. Disks are on a sata controller that is passed through in hardware. It was on Cobia until a month or so ago, and then upgraded to Dragonfish 24.4.0. All running fine.

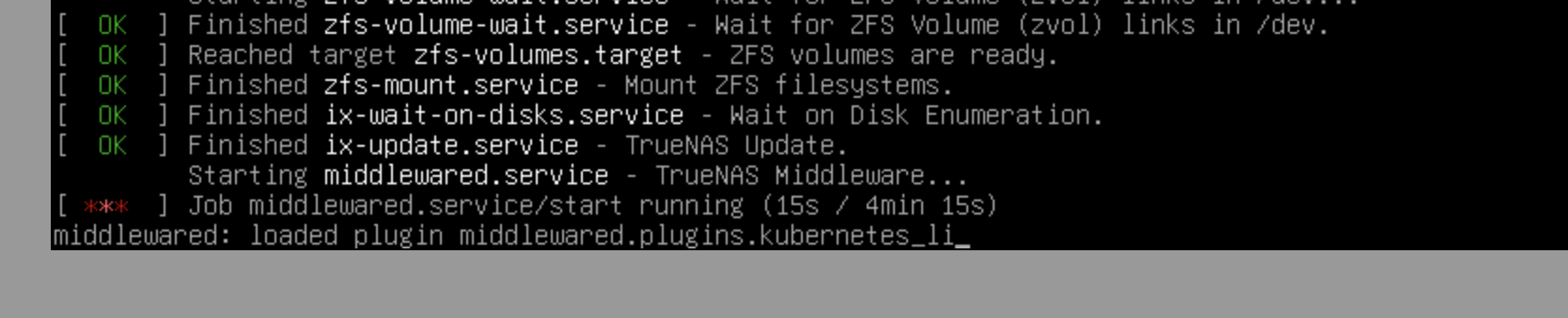

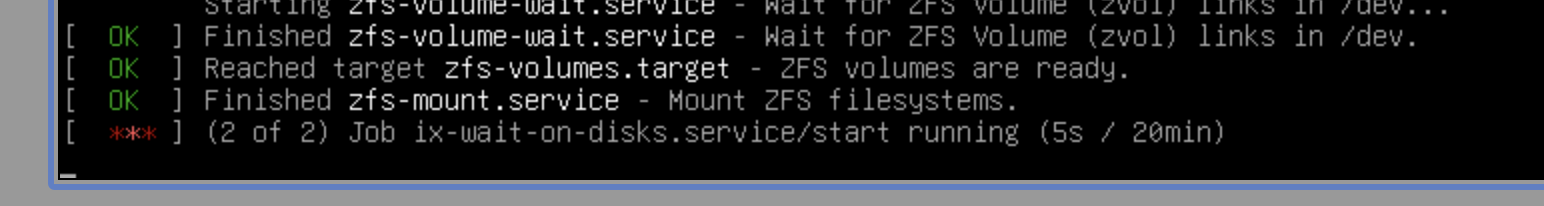

This afternoon, I tried the upgrade to 24.04.1.1, and it hung after rebooting, just after the Finished zfs-mount.service line. CPU usage on the VM was pegged at 67%; the vm has 3 CPU cores allocated, so this is 2 cores at 100%. It stayed this way for 30 mins, unr…

Stux

June 5, 2024, 4:28am

38

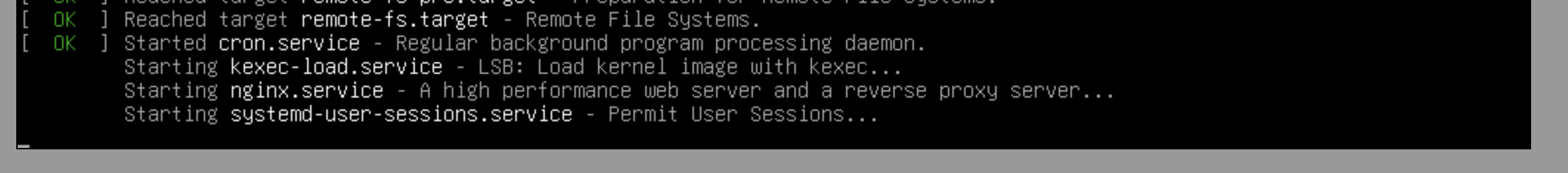

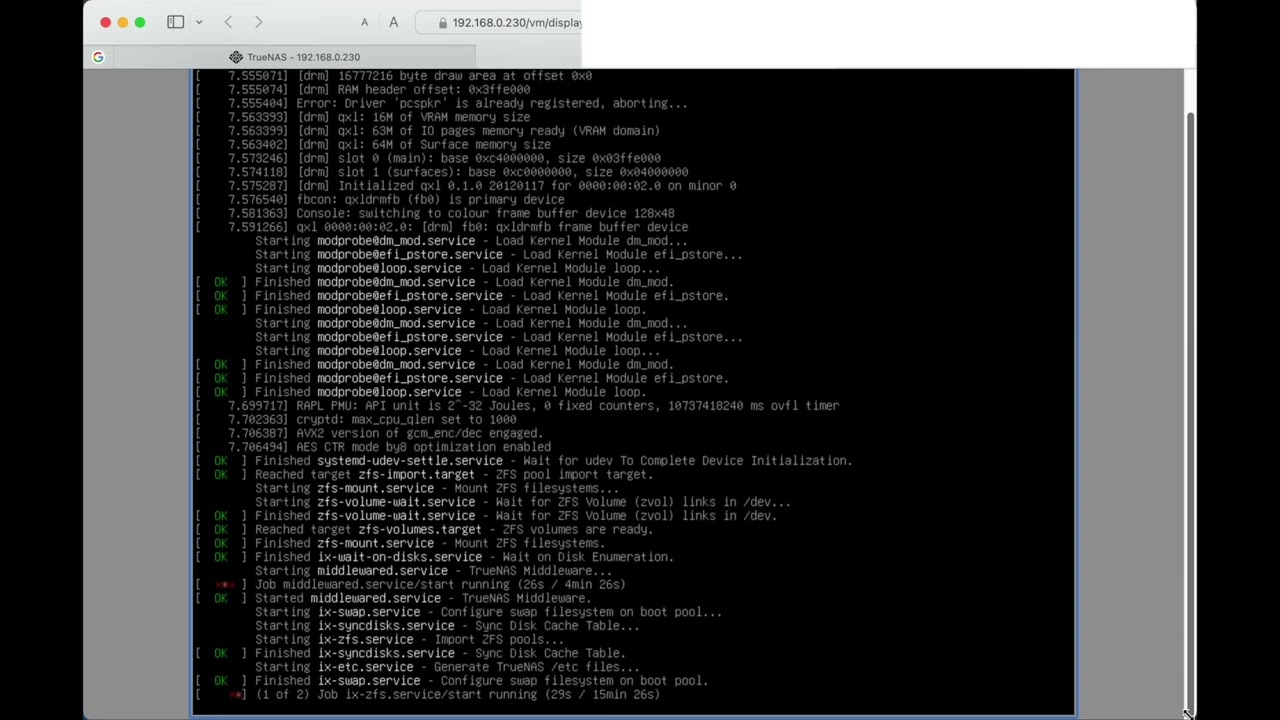

Here is a reproduction video I made.

It would be good if anyone else could reproduce…

All you need to do is create an 8GB VM with a 32GB boot zvol…

A clean install of TruenNAS will hang when running in a VM with 8GB of RAM. It works fine on all previous versions of TrueNAS (Scale, Core and FreeNAS)), including 24.04.0

In the video on a TrueNAS 23.10.2 system with 128GB of RAM and a Xeon E5-2699A v4 CPU, I setup a VM with 8GB of RAM, 4 cores, a VirtIO NIC on a bridged interface, and a VirtIO 32GB boot zvol.

I then clean install 24.04.1.1 from verified ISO, and reboot it 6 times. It hangs on startup 3 out 6 times.

I then clean install 24.04.0, and reboot it 5 times, it starts correctly 5x in a row.

The ISOs are shasum OK. I have also confirmed this behaviour on a Dragonfish 24.04.1.1 system running on a Xeon D-1541 with 32GB of RAM.

Stux

June 5, 2024, 5:02am

39

I may have missed the real important details, among everything else, but need to ask:

Which specific vendor/version Hypervisor and any other details, not to be assumed?