DELL PowerEdge T440

2 x Intel(R) Xeon(R) Silver 4108 CPU @ 1.80GHz

192 GB DDR4 2133 MT/s

Dell HBA330 Adp Storage Controller in Slot 4 FW: 16.17.01.00 (CPU1)

4 x 1.8TB 7.2k SAS 12Gbps

3 x 500GB CT500MX SSDs

3 x 500GB CT500P5PSSD8 NVMe on PCIe gen3 x 16 card (Slot 3 - CPU2)

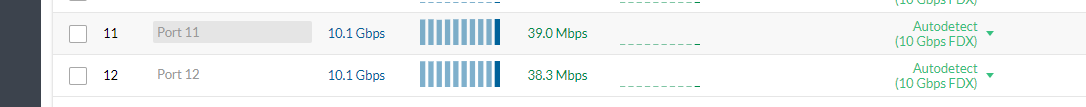

NC552SFP 2-port 10Gb Server Adapter (CPU1)

Test Pool

3 x nvme stipe

simple dd write zeros local test on pool: 3.3GB/s - 3.7GB/s

sync; dd if=/dev/zero of=testfile bs=1M count=8000; sync

8000+0 records in

8000+0 records out

8388608000 bytes transferred in 2.265634 secs (3702544059 bytes/sec)

iperf test from remote host

[root@ol1 ~]# iperf3 -c 10.10.10.4

Connecting to host 10.10.10.4, port 5201

[ 5] local 10.10.10.2 port 36652 connected to 10.10.10.4 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

[ 5] 1.00-2.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

[ 5] 2.00-3.00 sec 1.15 GBytes 9.89 Gbits/sec 0 1.54 MBytes

[ 5] 3.00-4.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

[ 5] 4.00-5.00 sec 1.15 GBytes 9.89 Gbits/sec 0 1.54 MBytes

iperf3 -c 10.10.20.4

Connecting to host 10.10.20.4, port 5201

[ 5] local 10.10.20.2 port 59606 connected to 10.10.20.4 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.16 GBytes 9.92 Gbits/sec 0 1.54 MBytes

[ 5] 1.00-2.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

[ 5] 2.00-3.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

[ 5] 3.00-4.00 sec 1.15 GBytes 9.90 Gbits/sec 0 1.54 MBytes

With all the above info.

My KVM hosts iscsi Datastores connected via 2-port 10Gb + multipath (both paths active with I/O) caps at 1.6GB/s no matter what.

Same dd command from KVM host.

Test with 2 x KVM hosts (same config, same dd test, concurrent) and again total aggregate throughput from both hosts cap at 1.6GB/s.

With two hosts, i was able to saturate the TNAS network interfaces but for short spikes. The average was around 770MB/s

Not bad but still cannot break the 2GB/s psychological barrier.

Any ideas much appreciated

Thank you