Hi again,

Thank you all for your input much appreciated.

Helped me confirm that the problem lies with iscsi mpio.

So like many suggested i used fio which gives much more info.

Tested locally:

WRITE: bw=3617MiB/s (3793MB/s), 1822MiB/s-1830MiB/s (1910MB/s-1919MB/s), io=21.9GiB (23.5GB), run=6069-6187msec

From single KVM host best i can do:

Run status group 0 (all jobs):

WRITE: bw=998MiB/s (1047MB/s), 499MiB/s-499MiB/s (523MB/s-524MB/s), io=36.2GiB (38.9GB), run=37103-37124msec

Tried fio sequential read:

Run status group 0 (all jobs):

READ: bw=1155MiB/s (1211MB/s), 1155MiB/s-1155MiB/s (1211MB/s-1211MB/s), io=136GiB (145GB), run=120128-120128msec

It is obvious that total bandwidth used is for single 10Gbs nic even though i set mpio on Linux to load balance. I see 50/50 traffic on both NICs but my total bandwidth is at 10Gbs.

Tested with both KVM hosts concurrently and finally got full speed.

KVM1:

Run status group 0 (all jobs):

READ: bw=1155MiB/s (1211MB/s), 1155MiB/s-1155MiB/s (1211MB/s-1211MB/s), io=136GiB (145GB), run=120128-120128msec

KVM2:

Run status group 0 (all jobs):

READ: bw=1170MiB/s (1227MB/s), 1170MiB/s-1170MiB/s (1227MB/s-1227MB/s), io=138GiB (148GB), run=120568-120568msec

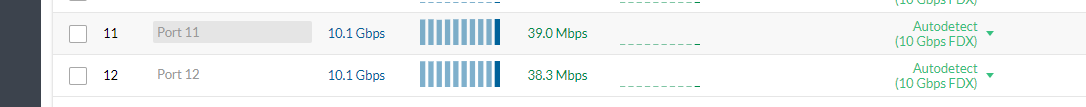

My TrueNAS nic ports:

So @Davvo even though the post is from 2016, i have yet not found a solid document or forum on how to setup mpio (multipath.conf) with TrueNAS in order to get full active/active iscsi performance.

Having said that if anyone has successfully manage to setup Linux MPIO with TrueNAS (not active/standby) pls share multipath.conf file.

I am currently testing with path_grouping_policy set to multibus.

Thank you all for your input and again i apologize if i said anything wrong.