I feel over whelmed with TrueNas as I switched from a Synology Nas because I wanted more control over my physical hardware and this is a big learning curve, I am trying to find the best way to duplicate my data from one dataset to another data set, I don’t want a snapshot but rather that a live copy that I could physically see the files unless I don’t understand that a snap shot is an actual copy of the file? I use windows and I want the piece of mind that my files are backed up by being able to see the files and not the system telling me that replication has been completed, I want to be able to navigate to the backup location a physically see those backed up files in their native format if that makes sense. I have searched for days on people mentioning syncthing & rsync but with the different versions of the operating system things are either not there or work slightly different or are extremly confusing. In the end I want two copies of my data on two separate datasets and one copy in the cloud and that is a whole other issue since I was using Microsoft One Drive and that is no longer supported unless you jump through some hoops that I have read. Any help good articles that can help me I would appreciate it.

At the end of the day, there’s no point having “Two separate copies” on the same pool. If the pool breaks, the data is just as gone.

Ergo you may as well just have the blocks be used once.

Maybe what you really want is a separate pool, and then replicate the dataset from pool a to b.

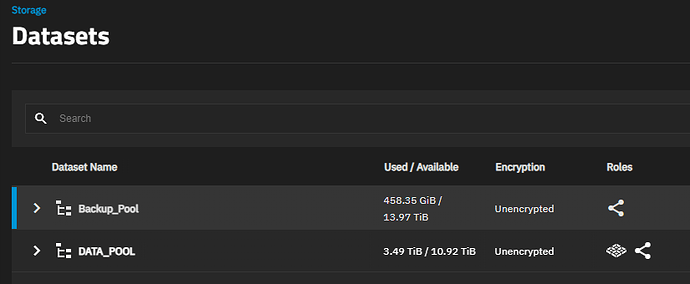

The two datasets would be on separate pools 3 drives each sorry for not mentioning this, I do understand they are on the same machine

Is it okay to have periodic replication. Say up to every minute?

I personally use 5 minute snapshots, and replicate to a backup pool every hour. (Ie have 5 minute, hourly, daily, weekly, monthly snapshots, they have different retention and naming schema. But I replicate the hourly/daily/weekly/monthly snapshots to the same location with the hourly task.

You could mount the backup pool dataset share as “backup” with read-only permissions if you wanted to verify its contents.

But practically, you can’t run the replication more often than minutely

I would be fine with. a backup once a day My only concern is a snapshot a copy of the exact file or is a snapshot some kind of special system file that is understood only by the system. So say for example if I backup text file a over to the new drive and I share that drive and and try to text file A or is that file not able to be viewed because it’s in some kind of different file system? I performed a replication backup and shared that location and nothing showed up, the drive showed up as empty.

A snapshot is a complete (immutable) filesystem (at a certain point in time.)

It is not file-based.

And the reason each snapshot doesn’t consume the total “represented” space of the filesystem itself, is because the live filesystem and snapshots are pointing to the same blocks of data that already exist.

Thank you for explaining that makes more since then replication is not what I would be looking for then, what can I do so that I have a duplicate of my files on my second data pool?

It is what you are looking for.

You don’t get any more “exact” than a replicated snapshot of a filesystem.

EDIT: Ars Technica wrote a primer on ZFS, which I think will demystify some of these concepts. I highly recommend reading it, and then coming back to this thread. It’s written to be understood at a practical level (for end-users), and is visual.

The snapshots themselves allow you to view the data as per the time when the snapshot was taken.

If you then replicate the snapshot to another pool, the pool contains an exact copy of the data included in the original snapshot.

I understand that, my only concern is relying snapshots that require you to use the operating system to restore that copy as if you had two copy’s of your data on two drives and one drive fails your have another copy that does need a piece of software to restore the file.

I will give this a read

No “special software” or “operating system” is required. Anything that understands ZFS (TrueNAS, practically all Linux distros, FreeBSD, etc) can import a ZFS pool.

However, for “ease of use”, TrueNAS uses ZFS on the backend, but most end-users interact with their data via SMB over the local network. This means that once you connect to an SMB share, you browse through your files “as if” they are local files. Windows? Linux? Mac? Doesn’t matter.

So in your case, if you have snapshots being replicated to the “backup” pool, then at any time you can share certain datasets in this backup pool via SMB, which allows you to browse and open the files like usual.

(The only caveat is, it’s good practice to use the “read-only” option for such shares, since you do not want to write anything new to a “backup” pool. If you want to “promote” it to your new “main” pool? Sure, you can keep using it like normal.)

EDIT: To put it another way. Let’s say your most recent snapshot of the main pool was on May 1st, 2024. And this snapshot was (incrementally) replicated to the backup pool. (Let’s say on the same day, May 1st.)

If you open up your NAS server, physically remove the drives of the “main” pool, and then throw them over a cliff, you will still have an exact replica of the entire filesystem on your backup pool… as they existed on May 1st, 2024.

(This only applies to datasets which were snapshotted and replicated to the backup pool. This does not cover datasets within your pool that you never created/replicated snapshots.)

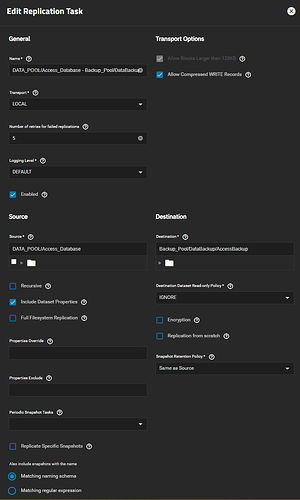

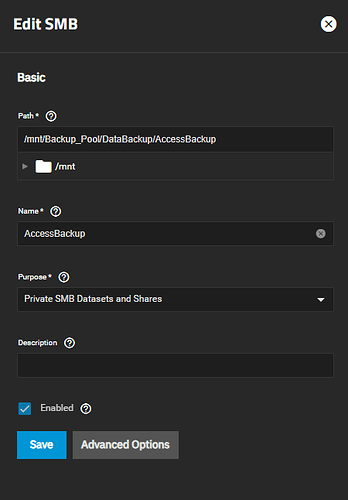

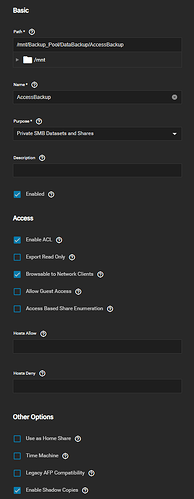

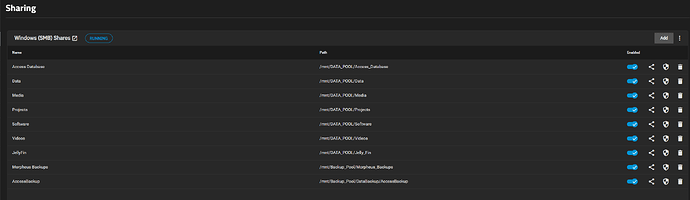

That would be great and thats what I thought to, tried that and it wasnt working so I must be doing something wrong, the replciation task completed and I shared that location and when I opened that network location via smb it was empty but the data set says there is three snapshots.

Your screenshot is cropped at the bottom.

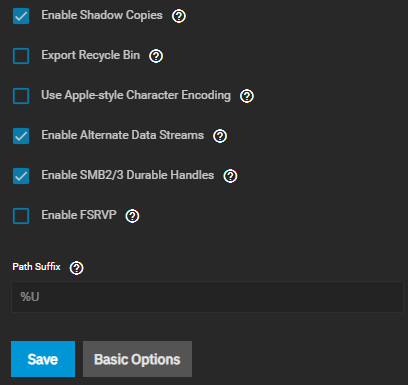

Also, how did you “share” the backup dataset?

EDIT: You should set “Destination Dataset Read-only Policy” to SET. It’s not related to this, but good practice for backups.

What client are you using to browse the backup share? Windows? Linux? Mac?

Does the user have sufficient permissions?

What is the output of this:

zfs list -r -t filesystem -o space

I solely use windows 11, I would not know where to keep that command into I am assuming that is a linux command? Sorry for the ignorance but appreciate the help

I have made sure to set permissions for myself on all shares full control

A command you run directly on the NAS server. You can use the “Shell” tool, which should be on the menu pane.