Hi,

I am building a new AIO VMWare Host which currently is running ESXi 8 U2.

Given that Core is going to … die a slow death … I thought I would give Scale a try for that build.

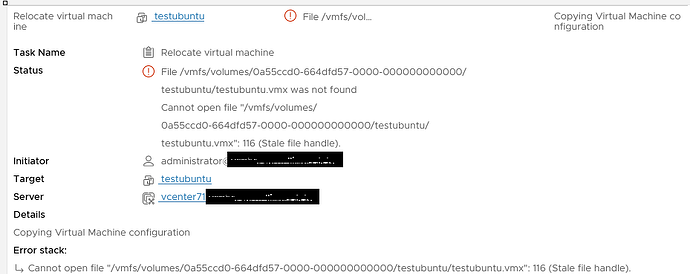

Installed everything as always, set up NFS, mounted my Share (nfs4) and tried moving a VM to it.

esxcli storage nfs41 add -H 172.16.10.32,172.16.11.32 -s /mnt/tank7/nfs -v tn15_nfs

Unfortunately - no go:

2024-04-06T19:23:47.324Z In(182) vmkernel: cpu6:2098542 opID=23545234)NFS41: NFS41FileOpenFile:2911: Open of 0x430a56466360 with openFlags 0x1 failed: Stale file ha ndle

2024-04-06T19:23:47.324Z Wa(180) vmkwarning: cpu6:2098542 opID=23545234)WARNING: NFS41: NFS41FileOpOpenFile:3627: Open of obj 0x430a56466360 (fhID 0x134877) failed: Stale file handle

2024-04-06T19:23:47.324Z In(182) vmkernel: cpu6:2098542 opID=23545234)NFS41: NFS41FileOpenFile:2911: Open of 0x430a56466360 with openFlags 0x1 failed: Stale file ha ndle

2024-04-06T19:23:47.324Z Wa(180) vmkwarning: cpu6:2098542 opID=23545234)WARNING: NFS41: NFS41FileOpOpenFile:3627: Open of obj 0x430a56466360 (fhID 0x144877) failed: Stale file handle

2024-04-06T19:23:47.324Z In(182) vmkernel: cpu6:2098542 opID=23545234)NFS41: NFS41FileOpenFile:2911: Open of 0x430a56466360 with openFlags 0x1 failed: Stale file ha ndle

2024-04-06T19:23:47.324Z Wa(180) vmkwarning: cpu6:2098542 opID=23545234)WARNING: NFS41: NFS41FileOpOpenFile:3627: Open of obj 0x430a56466360 (fhID 0x154877) failed: Stale file handle

2024-04-06T19:23:47.338Z Wa(180) vmkwarning: cpu6:2098542 opID=23545234)WARNING: NFS41: NFS41FileDoRemove:5286: Could not remove "testubuntu" (task process failure) : Directory not empty

2024-04-06T19:23:47.338Z In(182) vmkernel: cpu6:2098542 opID=23545234)VmMemXfer: vm 2098542: 2461: Evicting VM with path:/vmfs/volumes/0a55ccd0-664dfd57-0000-000000 000000/testubuntu/testubuntu.vmx

2024-04-06T19:23:47.338Z In(182) vmkernel: cpu6:2098542 opID=23545234)VmMemXfer: 209: Creating crypto hash

2024-04-06T19:23:47.338Z In(182) vmkernel: cpu6:2098542 opID=23545234)VmMemXfer: vm 2098542: 2475: Could not find MemXferFS region for /vmfs/volumes/0a55ccd0-664dfd 57-0000-000000000000/testubuntu/testubuntu.vmx

2024-04-06T19:23:47.507Z In(182) vmkernel: cpu1:2098561 opID=6dbd4a2b)World: 12324: VC opID lud1c16k-60790-auto-1awn-h5:70016329-2e-01-2d-3e43 maps to vmkernel opID 6dbd4a2b

2024-04-06T19:23:47.507Z In(182) vmkernel: cpu1:2098561 opID=6dbd4a2b)VmMemXfer: vm 2098561: 2461: Evicting VM with path:/vmfs/volumes/0a55ccd0-664dfd57-0000-000000 000000/testubuntu/testubuntu.vmx

2024-04-06T19:23:47.507Z In(182) vmkernel: cpu1:2098561 opID=6dbd4a2b)VmMemXfer: 209: Creating crypto hash

2024-04-06T19:23:47.507Z In(182) vmkernel: cpu1:2098561 opID=6dbd4a2b)VmMemXfer: vm 2098561: 2475: Could not find MemXferFS region for /vmfs/volumes/0a55ccd0-664dfd 57-0000-000000000000/testubuntu/testubuntu.vmx

I verified that the mount was fine (it was), that permissions were fine (yes, I could create and delete a folder just fine) , and what not.

Same problem has been mentioned before, eg as a necro in this older thread

Also some thread in vmware forums, but nowhere a solution.

I tried tweaking a few Scale settings but couldn’t find anything helpful.

I saw a reference to set an Alias, but mounting worked just fine for me.

This was on TrueNAS-SCALE-23.10.1 I think (as that’s what I had, dont think i updated after installation)

I then gave up, quickly installed TNC (TrueNAS-13.0-U6.1) on same (virtual) HW, set up NFS4 identical, used the same mount command on ESXi and - it just worked.

While I am fine to run Core instead of Scale i am surprised that this has not been discussed more widely.

Or is it just working for most people on Scale?

Cheers