Hi guys,

So after a recent drive failure I need to build a backup server. Something I’ve been putting off for the longest time.

My current server is about to be 10 years old and it’s:

Super Micro MBD-X11SSM-F-O

Intel Xeon E3-1230 v5 quad core 3.40 GHz

Its served me well, but since I haven’t paid attention to hardware requirements in 10 years I was hoping I could get some quick advice on building a new server.

Basically, my new server will be my main one (used for storing important data - that’s all) and the old server will be used to backup critical files so I have a duplicate onsite.

The new pool I’m planning will be around 8x or 10x 26TB drives, so around 150-200 TB (really depends on how much the motherboard/CPU/ram eats into my budget).

Ideally I’d like a motherboard with onboard 10gb NIC but if the cost of the motherboard greatly exceeds the cost of buying another 10GB NIC, then it’s no big deal.

Is the super Micro X12SPI-TF ATX Server a good choice? It it overkill? Looks to be available for around $600.

Any recommendations for motherboards or what kind of ballpark figure would be great.

I just don’t want to spend $600 if that much is not required.

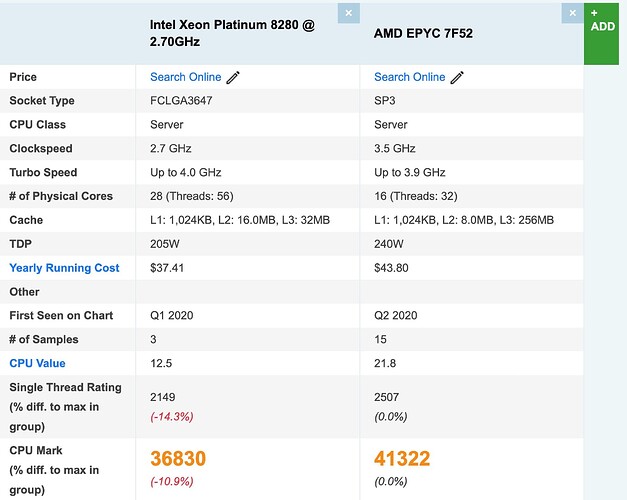

As for the CPU, is there a sweet spot? With power in mind as costs for kwh increase, I want to keep it as efficient as possible (but at the same time I don’t want it to affect performance).

Same as motherboard I don’t mind investing in something if truenas uses it, but also don’t want to just burn money on overkill.

For RAM, is there also a sweet spot for the speed? Or just get the fastest I can afford?

Is 1GB per 1TB still the recommended advice?

Thanks for your time, and for any recommendations.