@pinoli not using NFS, only SMB but manually to look at the file system from my windows pc.

@Dave I am not using any PVC storage, only host paths.

@winnielinnie did not reboot yet, will do and report back

15 min after reboot, no swapping, fingers crossed

More important than that is if this circumvents the slowdowns, lockups and/or UI sluggishness over time. ![]()

similar behavior to me; and I am seeing more and more similar issues popping up, which made me believe it’s scale 24 bug. Although this is my first TrueNas experience, I haven’t extensively tested it before on 22, 23 , and 24RC1, didn’t show this behavior before until I was committed and went with 24 and started really using it.

I “suspect” without any evidence that could be the way they tuned dragonfish to leverage RAM dynamically, and eventually some memory leak or bug will occur during this dynamic adjustment.

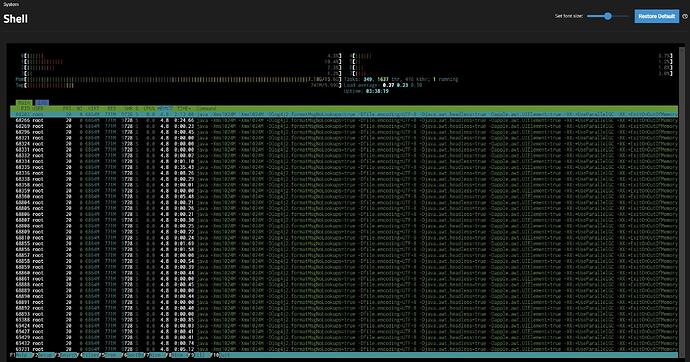

When your Web UI locks up, your swap usage is almost identical to mine about 1.6GB/8GB . I have 1TB RAM and locks up when 900ish GB ARC and with 5% total RAM left(50~60GB FREE). and I will see “asyncio_loop” start to chewing up swap. You can monitor it by going to top, enable swap monitor with “f”, and “s” to sort based on swap usage.

Can confirm, limiting ARC to 50% of ram was the solution, i.e. rolling back to cobia behaviour.

EDIT: fixed ![]()

What a “surprise”. ![]()

I was really trying to avoid this, using everything stock, and debugging, but at some point need to give in to the “hacky” approach, bit sad ![]()

For those looking for a similar solution. Add this to your init/shutdown scripts:

echo 68720000000 >> /sys/module/zfs/parameters/zfs_arc_max

Add it at post-init

Adjust the number 68720000000 to the ram amount you want to assign. 68720000000 is in bytes, and in my case equals 64 GiB. Use wolfram alpha for your own calculations ![]()

The irony is that the “hack” was overriding the default of 50%. Prior to Dragonfish, SCALE followed OpenZFS’s upstream default of the 50% limit.[1] So in a sense, you just set it back to the default behavior. ![]()

@SnowReborn: Did you try this, followed by a reboot? Change the numbers accordingly to tailor to you’re system’s total physical RAM:

zfs_arc_max default for Linux and FreeBSD ↩︎

UPDATE: To simplify resetting it to the default, without having to calculate 50% of your RAM, you can set the value to “0” in your Startup Init command, and then reboot.

echo 0 > /sys/module/zfs/parameters/zfs_arc_max

You might have better luck with a “Pre Init” command.

If that doesn’t work, then go ahead and manually set the value in your Startup Init command. See @cmplieger’s post above.(You might not be able to “set” it to “0”, since SCALE likely sets it to a different value shortly after bootup. And it doesn’t accept setting it to “0” on a “running system”.)

Nevermind. Only use @cmplieger’s method to return to Cobia’s bevhaior. See this post as to why.

Maybe for some, but there are other reports like this one where there is tons of free ram. I saw a few posts somewhere where people have even reset the arc to default 50% and the problem still occurs. The thread below has several examples which indicate a different issue perhaps, but still manifests in the UI. Hopefully they can find the issue(s).

That’s why I never install a .0 release!

https://forums.truenas.com/t/very-slow-webui-login-apps-etc-scale-dragonfish-rc

To truly “reset” it, you must reboot the system. So that’s an important caveat.

I know, agree of course. Whether or not they have, someone has to follow up. But that other thread is much more concerning to me as it indicates more of a middleware problem. I mean a guy with 1TB ram and tons and tons of free memory even? Hopefully enough bug reports will be filed to assist IX in finding the culprit(s).

I certainly wouldn’t be surprised if arc played a role in some of them. The conditions of how/when that happens could hopefully be sorted one day. It’s one reason I will keep my swap space for those memory issues temporarily needing to be resolved. But many of these reports indicate it may be something else too. High middleware CPU, just sitting there? I believe I even saw other reports of a middleware memory leak too. Might be many issues.

Don’t forget in the Beta, many people did not experience these issues too. So, it shouldn’t be a case of it’s a problem for all. Some did, like Truecharts documenter.

Thank you, having similar issues after recently upgrading from Cobia to Dragonfish. From day one UI has become unresponsive, to the point I cannot login to the UI mostly. Terminal is fine.

Also receiving warning emails multiple times a day:

Failed to check for alert ScrubPaused: Failed connection handshake

Failed to check for alert ZpoolCapacity: Failed connection handshake

All new since dragonfish.

Server is only 16GB ram, but doesn’t serve apps, only ZFS and SMB are actively used (i.e. just a storage server)

RAM usage was 15.6 GB and 900 MB SWAP in dragonfish looking at htop.

I’ve performed the zfs_arc_max change to 50% ram as a preinit script and now it’s running much better (UI is responsive, no issues logging in) , the emails have also stopped being sent.

We’re seeing a pattern here. ![]()

Also, somewhat related (or maybe not), but I can’t help but notice this, still being on Core myself:

The fact that any swap is being used at all means that memory is not being handled gracefully. But levels of 1 to 2 GiB of swap for a simple server with only ZFS + SMB? ![]()

No one’s gaming or doing intensive number crunching or editing 4K videos. This is a server…

Now the question is, is it the swap in itself that breaks stuff, or is the buffer ARC leaves too small.

Ask the upstream ZFS developers why they set the default maximum ARC size for FreeBSD at “1 GiB less than total RAM”, whereas for Linux it is “50% of total RAM”. ![]()

Swap being used in these cases of Dragonfish is likely only a symptom and/or correlates to the flaky memory management if the ARC is not restricted.

my setup i didn’t setup a swap. i use 16gb of ddr4.

the only issue i noticed in the recent tn build was some sort of java memory error when i did htop ![]()

have 20 active docker containers.

the ui slow down for me seemed to have been gone. i had done a fresh reinstall of truenas.

was wondering if i needed to upgrade to 16x2 for 32gb dd4 dual channel. but things seem to just work for me setup so i held off on that.

for docker containers, some of the apps i add a hard memory limit. if you set limit too low some of these containers will crash. so it takes some fine tuning to know the limit to set.

There’s another pattern emerging. This is the 4th or 5th I’ve read between here and reddit where the problem is claimed to be resolved by a fresh install (something I like to do). I still think there are several issues here, not one.

Would be interesting to hear back as time passes, is it still ok after a few days or a week. Did they also change the arc limit?

Thanks for the tip! I knew this from lawrence’s system guide. But I was afraid to change it on 24 because I am afraid to cause unintended consequence. My friend also suggested to just turn off swap completely and sudo swapoff -a; but I am not ready to test things on my live server just yet. :S; but yeah, reverting to 50% doesn’t “seem” to going to cause any issues; which I am indeed tempted to try. But I why 50% specifically? because it’s what 23 uses by default? What if you manually set it to 90% or 85%? So we can isolate dragonfish is going insane because it’s over 50% allocated for ARC, or it’s just dragonfish’s dyanmic ARC allocator at fault.