I think it’s viable to try, but has the small possibility to end up diasterous in looped back mem. assuming my understanding is correct. I think swappiness 0 is safer than zmem approach; besides, “regular” swaps shouldn’t cause the issue we are observing, if that’s the case that we are experiencing very unusual swap, then that means zmem will be constantly swapping as well.

eh… i am not sure swappiness is the workaround atm, but fyi:

/proc/sys/vm/swapiness

I might be wrong.

what’s known to be working is limit arc to 50%

This is my main concern, since there might exist a “perfect storm combination” of Linux + ZFS + ARC configured to mimic FreeBSD + constantly swapping

It’s the “constantly swapping” that seems to be the canary in the coal mine. Currently, it means thrashing your SSD (or HDD). With zram, it probably would mean constantly compressing / decompressing in RAM (whereas a reasonable “limit” to the zram should be enforced).

So in the meantime there seem to be two temporary solutions:

- Reset the ARC’s high-water to Linux’s default at 50% of RAM

- or, completely disable swap

I believe there might be a solution that can incorporate both: Figure out why SCALE is aggressively swapping under normal loads, and rather than just disabling swap altogether (which could result in OOM for some use-cases), have instead zram to fill in the role of swap, which shouldn’t (hopefully) be used much, if at all. (But at least it will be there as a safety cushion to prevent OOM if the system is nearing its limits.)

Thank you but how do I get there from/in the CLI… I’m a newbie here.

I do not believe SWAP was not an issue in COBIA. My system was swapping even then with 50% of free RAM. It’s a simple 16GB home file server with 2 users with requests once an a while. I saw lot’s of swapping whenever I was copying several files to it.

I’ve found an okay solution is to do the following:

- Set zfs_arc_max to 90% of my memory (121437160243)

- Set zfs_arc_sys_free to 8GiB (8589934592)

- Swapoff -a

Even under high memory pressure this seems to be working well to avoid OOM conditions and will just flush ARC when available system memory drops below 8GiB to get itself back within limits. I don’t use a massive amount of memory for services (31 of 120GiB as of right now) so leaving 8 or even 16GiB free is good for me. Though I imagine it could work with less.

I’ve been using this that I picked up from somewhere, probably the old TrueNAS forums at some point, and modified a little.

#!/bin/sh

PATH="/bin:/sbin:/usr/bin:/usr/sbin:${PATH}"

export PATH

ARC_PCT="90"

SYS_FREE_GIG="8"

ARC_BYTES=$(grep '^MemTotal' /proc/meminfo | awk -v pct=${ARC_PCT} '{printf "%d", $2 * 1024 * (pct / 100.0)}')

echo ${ARC_BYTES} > /sys/module/zfs/parameters/zfs_arc_max

echo zfs_arc_max: ${ARC_BYTES}

SYS_FREE_BYTES=$((${SYS_FREE_GIG}*1024*1024*1024))

echo ${SYS_FREE_BYTES} > /sys/module/zfs/parameters/zfs_arc_sys_free

echo zfs_arc_sys_free: ${SYS_FREE_BYTES}

swapoff -a

getting swapped isn’t an issue, right now in dragonfish there’s abnormal swapping causes issue like web UI freezing, 100% cpu usage, very hot SSD, throttled transfer etc… you can change the values in CLI but it’s not going to be presistent. If you want to be presistent go to system advanced settings ,postinit command and add there. So each reboot truenas will auto apply that.

I believe this is just a coincident. The ARC cache by default in dragonfish is using RAM -1GB like freebsd. That being said in actuality it will actually not use until 1GB less. It will leave around 5~10%. Seeing you are using 45GB in service, if you reduce your service app etc actual usage, you are very likely to see your ARC go up. The 15GB ish free is expected behavior for default dragonfish dynamic arc allocation.

Then how do we revert to 50% where should I do that and how to get there?

See above, or via this link:

Thank you, it worked

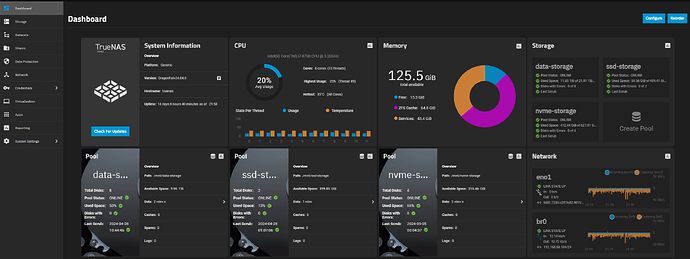

I just started seeing this on my system that I upgraded from CORE to SCALE. When I was running the release candidate this was not occurring but after upgrading to the official release my system started to go unresponsive every night. My monitoring system (Nagios) would show CPU hitting 100% and the system processes slowly increasing until eventually it would start timing out. WebUI and SSH would go unresponsive and the only way to recover was to hard reset the system through the IPMI module. When it would come back up there were no events in the logs to debug with as all logging just stopped at the same time the CPU hit 100%. I’ve implemented this fix and rebooted the system. Hoping this will address. ![]()

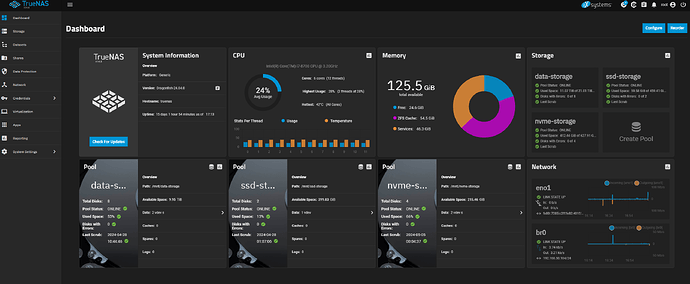

In an update to my post above I found something interesting. It seems that ZFS kernel settings are getting preserved from CORE to SCALE upgrades. So, for users that performed upgrades check your System Settings → Advanced in the SYSCTL section. It seems that the upgrade carried over the vfs.zfs.arc_max setting which for me was set to 95% of my system memory. I only found this after I was using the script discussed here to set the parmater directly in the mentioned files. Which the system let me but I noticed no affect until after I disabled the setting in SYSCTL. Could this be a possible bug in that these settings should NOT be preserved from CORE to SCALE? ![]() I mention this as I’ve seen talk that a fresh install doesn’t have this issue or resolves it.

I mention this as I’ve seen talk that a fresh install doesn’t have this issue or resolves it.

I don’t believe this is what causes the issue, as I am running from an initial install of Scale (upgraded from Bluefin to Cobia to Dragonfish), so nothing would have been able to have been carried over from Core.

In any case default for Dragonfish is very high:

ARC size (current): 71.1 % 88.7 GiB

Target size (adaptive): 71.0 % 88.6 GiB

Min size (hard limit): 3.2 % 3.9 GiB

Max size (high water): 31:1 124.7 GiB

Seems to be an issue with swapping specific to Dragonfish as I was running zfs_arc_max=95% & zfs_arc_sys_free=1GiB (equivalent in bytes) on Cobia for a period of time with no issues, this was from some testing that was done late 2023. That worked fine, but issues started only after the upgrade.

So, you were running at 95% on Cobia (not just testing) to be sure, and it all changed on Dragonfish but you are still at 95%? Is that correct @essinghigh ? That’s interesting.

To clarify, my CORE system has it set at 95% also with no issues. What I am suggesting is that something in the latest SCALE Dragonfish release version has an issue with this setting. As I mentioned I didn’t have this issue until I moved to the official release. The Dragonfish RC didn’t have any issues at all for me.

I’d been running 95% for probably 1-2 weeks on Cobia around the time the thread started, then I switched to 90% with zfs_arc_sys_free at 8GiB and ran that consistently with no issues until the upgrade to Dragonfish. With the same config on Dragonfish, with swap enabled, I can reproduce the issue. Wasn’t able to on Cobia.

If I disable swap on Dragonfish it runs fine, so seems to be some issue with the system swapping way too aggressively instead of resizing ARC properly.

I think in future I’ll run without swap and a defined zfs_arc_sys_free value as I have more than enough memory to not run into an OOM condition (and swap just generally is something to be avoided except for as a safety-net).

EDIT: to add some clarifications, what I’m running right now to test is Dragonfish defaults (arc_max=0 & sys_free=0) with swap disabled, and that works fine.

To add to what @essinghigh says, I upgraded my system from CORE to Cobia and ran it for about a week but had all kinds of issues with the apps. The forums said to resolve them update to the Dragonfish RC which solved my issues. System was stable for a month while I sorted out my install of Nextcloud and some VMs that I have on the system. When the official Dragonfish was released I updated and ran fine for about 3-4 days. After that is when the symptoms started for me. Now it happens to me just about every night when I replicate my datastores between my two systems for backup. The SCALE system goes dark and the CORE system just keeps on trucking. Also only the WebUI and other core services are affected. All my VMs and Apps keep right on trucking with no issue.

To sum up what I have done to date on my SCALE Dragonfish system:

/sys/module/zfs/parameters/zfs_arc_max set to 50% of memory

/sys/module/zfs/parameters/zfs_arc_sys_free set to 16G

disabled vfs.zfs.arc_max in System Settings → Advanced → Sysctl

I’ll run this overnight and see what happens.