Years after SMR HDDs were stealthy introduced in to NAS HDD lines, we are still seeing users with them. Most don’t seem to know.

To be clear, when Seagate introduced it’s first SMR HDDs in 2013, the Archive line, some people knew about the various issues and still bought & used them. Including me, as Seagate’s Archive 8TB SMR was a reasonable cost 8TB. Back then, there were few 8TB options, all noticeably more expensive.

One person purposefully wanted to use Seagate Archives in a ZFS pool to reduce cost. That user ran across some problems, like the expected long disk replacements. That user even thought the ZFS developers should write new code to support SMR HDDs.

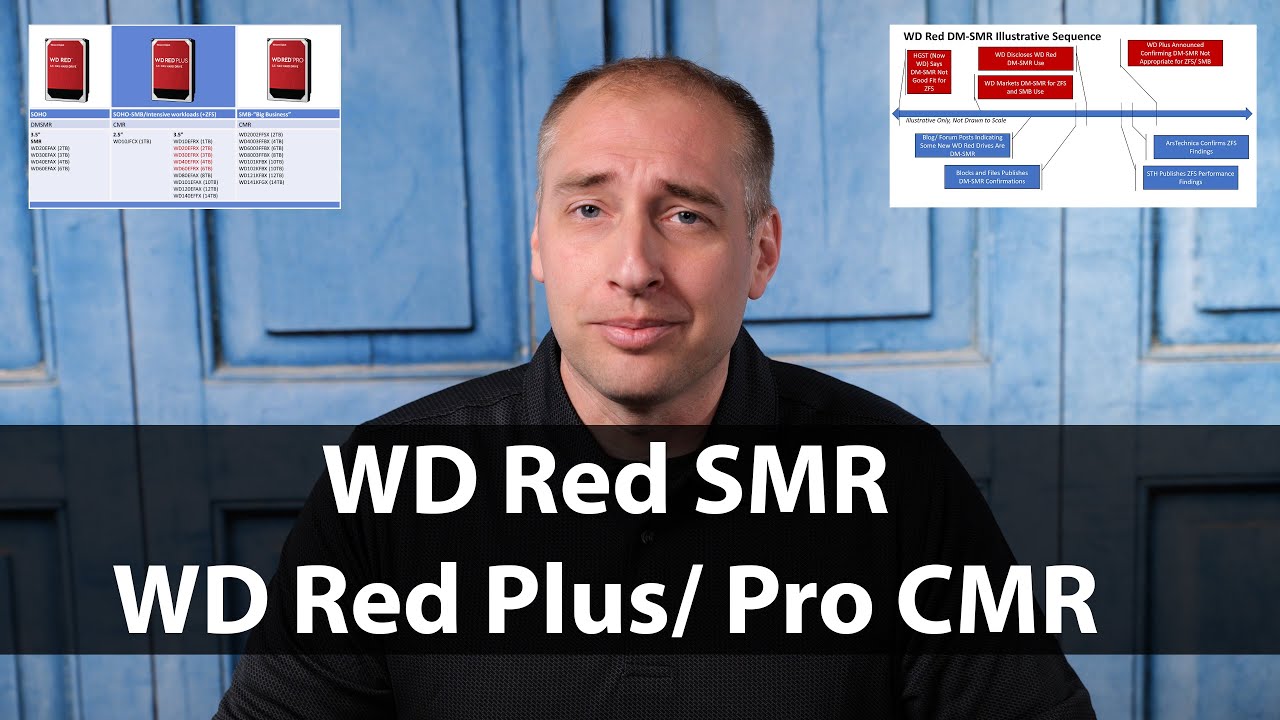

Their are 3 types of SMR HDDs:

- DM-SMR - Drive Managed, which is what most consumers will have either bought or know about. Examples are new WD Red, Seagate Archive & Barracuda.

- HA-SMR - Host Aware, meaning the drive exposes shingle information allowing the host to know if a re-write of a track is needed.

- HM-SMR - Host Managed, where the host deals with the re-writing of shingles.

Here is a more complete explanation:

Here is a recent thread that seems to have used Seagate Barracudas in a ZFS pool:

So, do any of you want to express your love, hate or indifference to SMR hard disks?