RAIDZ2, 1 vdev, 6-wide 12TB.

Started with 4 12TB drives, and since replaced one (first and only resilver), and expanded the vdev, adding 2 drives recently, for a total of 6.

root@nas1[~]# zpool list pool1

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool1 65.5T 8.15T 57.3T - - 6% 12% 1.00x ONLINE /mnt

root@nas1[~]# zpool status pool1 -v

pool: pool1

state: ONLINE

scan: scrub repaired 352K in 03:54:59 with 0 errors on Fri Apr 4 03:10:03 2025

expand: expanded raidz2-0 copied 7.99T in 11:08:26, on Thu Apr 3 01:52:58 2025

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

8d977df4-68d2-424a-b9f2-5368f111bd34 ONLINE 0 0 0

sdd2 ONLINE 0 0 0

sdg2 ONLINE 0 0 0

sdh2 ONLINE 0 0 0

11ea30fd-ed9b-4ef8-8931-dec798f8ca30 ONLINE 0 0 0

299865cb-98fa-4359-ad04-2dcf3171caa2 ONLINE 0 0 0

errors: No known data errors

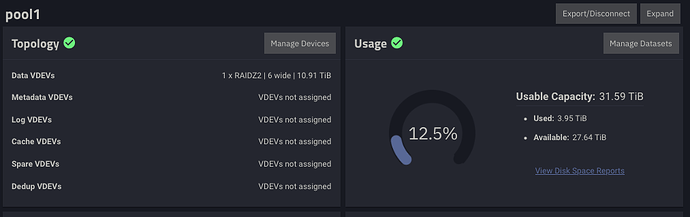

I would have expected to see ~40TB useable, rather than ~32TB per ZFS Capacity Calculator | TrueNAS Documentation Hub.

Have I missed something? If so, would appreciate an education. Thanks!