Sure. There were indeed some tutorials, but trying to simplify:

Step 0: before you install your drive, record the PSID (Physical Security ID) number on the drive’s label (sometimes/typically, you need it to initialize the drive, and also if one day, you ever need it (to reset/erase a lost password drive), you won’t have to take your machine apart to get it.)

Then, assuming properly identifying your SED drive, e.g. via:

sedutil-cli --scan

and then a few environment variables set:

export PSID_NO_DASHES=<your_PSID_from_the_drive_label>

export SEDPassword=<your_password> # probably keep long/random, but avoid symbols

export SEDDevice=/dev/<your_SED_drive>

Step 1: “Factory reset” the drive (assume this will erase the drive – you should be starting with a empty drive) with this command:

sedutil-cli --PSIDrevert $PSID_NO_DASHES $SEDDevice

Step 2: Initialize the drive with a password with this command:

sedutil-cli --initialsetup $SEDPassword $SEDDevice

Step 3: Enable the “locking range” - (0 is the whole drive)

sedutil-cli --enablelockingrange 0 $SEDPassword $SEDDevice

Now, your drive is setup with your chosen password, for full drive encryption. As such, you can now LOCK and UNLOCK the drive – by setting the locking range 0 as either “lk” (lock) or “rw” (read/write):

sedutil-cli --setlockingrange 0 lk $SEDPassword $SEDDevice

sedutil-cli --setlockingrange 0 rw $SEDPassword $SEDDevice

As you Lock/Unlock, you can see the status of your drives locking with this command:

sedutil-cli --query $SEDDevice |grep "$SEDDevice\|Lock"

which will show either

Locked = Y

or

Locked = N

Of course, by design, at power off/power interuption, it will automatically lock, so you’ll really only need the unlock command:

sedutil-cli --setlockingrange 0 rw $SEDPassword $SEDDevice

However, “implementing SEDs with the sedutil-cli utility” isn’t much of an elegant solution.

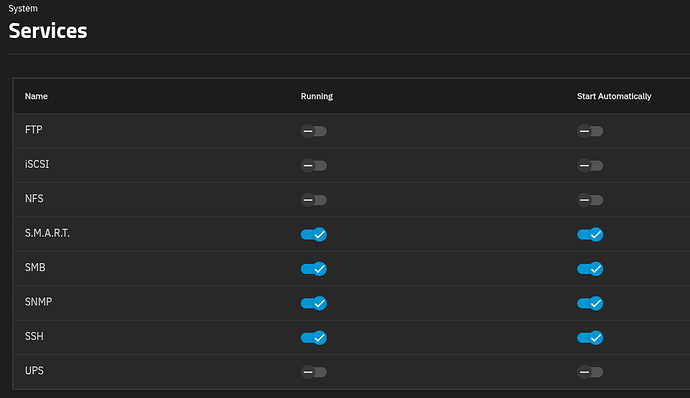

There currently isn’t a UI option to set the application environment to not start automatically:

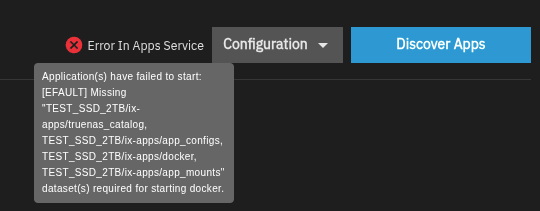

So, when you boot up, and the app service/Docker looks for its drive, the drive will still be encrypted, thus missing, and the service start will bomb out, e.g.:

So, you’ll have to manually unlock your drives, then restart the app service (hopefully) to get everything up and running. I suppose a systemctl disable docker.service is possible here, but I haven’t experimented this far yet. Needless to say, the UX here isn’t the typical TrueNAS ease of use.

What I have seen so far is that Electric Eel still supports the UI adding the SED passwords, and when I did a test upgrade of a system already set to use SEDs to Fangtooth, the SED passwords were still in the UI – so I’m not sure the functionality is really “disabled”.

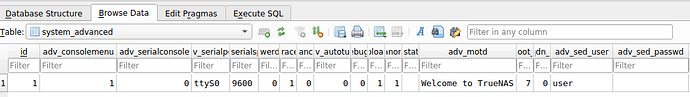

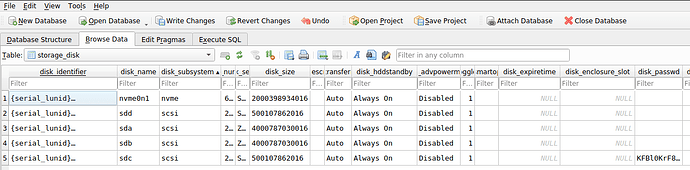

Likewise, the config database has table/fields for:

storage_disk | disk_password

and

system_advanced | adv_sed_password

and I’m not sure if those got set/config restored in Fangtooth+, if the SED passwords would return to the UI as well. Again, I haven’t experimented this far yet.

Also, per the Jira ticket initiating the SED change, supposedly the “api will still be allowed so this is not removing the feature from the community”, although I’m not sure what that means. Maybe there is still a cli/api way to set

storage_disk | disk_password

and/or

system_advanced | adv_sed_password

… which will also keep the functionality.

So far, I’m just stuck on Electric Eel over this, which is sad, as I’d like to try out Instances. But, in the meantime, the Docker apps in Electric Eel will keep me busy.

Overall, I think nerfing SED was a mistake, and I hope for its reversion. There is a lot of unwarranted bias again SEDs, but even LUKS/Cryptsetup, with 2.7.0, now supports Opal – despite some initial “this will never happen” – so the biases seem to be dying. Even the TrueNAS Jira “issues” related to SEDs seemed unfair, namely dinging SEDs for not being fault tolerant to sketchy power (e.g., NAS-129366, NAS-132518), which is a feature not a bug. Sketchy power is a potential security event – it shouldn’t be tolerated for convenience/fault resilience.

I’m not sure about the “sharp edges” alluded to in NAS-133442, but if it means a power loss locks an SED, or abusing your system by repeatedly turning it off and on again has erratic results, I’d close those tickets with “works as designed”.