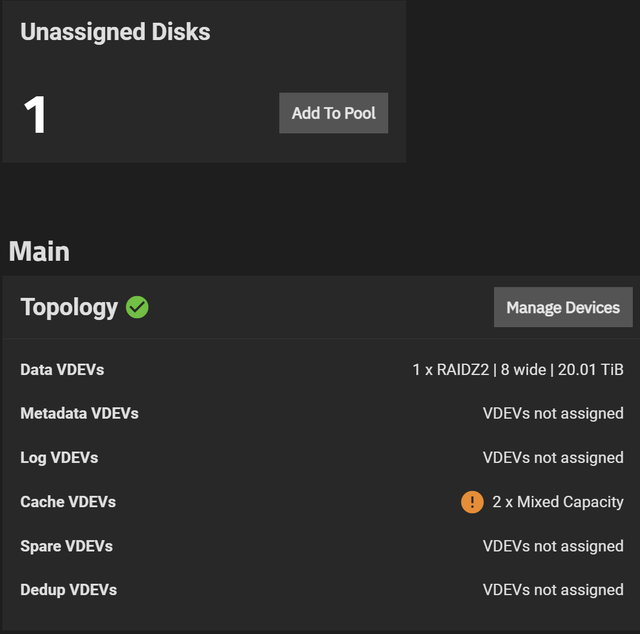

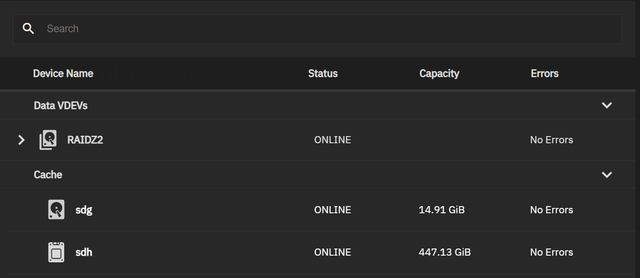

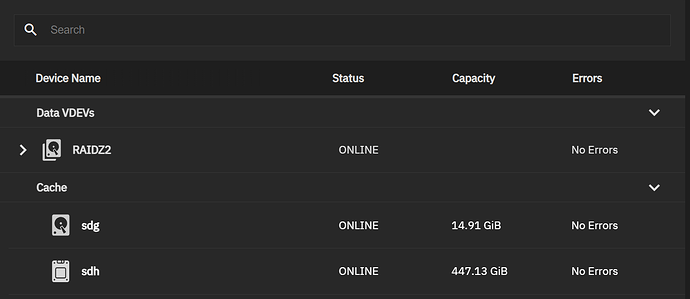

I wanted a RAID solution for home, so I bought a mini-r. It has 8 20TiB HD, a 14.9 GiB HD, a 14.9 GiB SSD, & a 447 GiB. I put the 20 Ts in the RAID2. The 14.9 is for the Write Cache/Log. The 447 was assigned to Read Cache. This leaves the 14.9G HD unused. Where do I put it? Or have I messed up elsewhere? I’m not sure which of the reporting commands I should run since they all seem geared toward later on in the process, so if you would like one, please let me know. I was going to include some screenshots, but it seems I am not allowed to do so at this time. They were just pictures of my drives list & topology, which I have already explained.

You can put those images in a image sharing site and share the link.

Also, not because you have 12 bays you have to populate them all.

I’d say that those 20TB in Z2 ( as you did ) is good. Don’t have to fill all the bays.

If the empty trays don’t have something to cover the inside, get something so the airflow don’t go just though the empty trays.

My 2 cents

hidden image via link ?

BBcode full image

how to get image url

New users aren’t allowed to add images or links.

[url=https://ibb.co/WW6GMRH][img]https://i.ibb.co/SXP5MZ6/2024-10-19-17-26-52-True-NAS-truenas-local-Brave.png[/img][/url]

[url=https://ibb.co/PtKg5w2][img]https://i.ibb.co/Tr6tHmQ/2024-10-19-17-28-45-True-NAS-truenas-local-Brave.png[/img][/url]

[url=https://ibb.co/PYw1gDm][img]https://i.ibb.co/cvD2QYg/2024-10-19-17-29-13-True-NAS-truenas-local-Brave.png[/img][/url]

is not the way it should be.

Maybe someone with more experience can tell you why not and what is best.

…again, I’d leave those 4 extra slots empty ( for future ideas that am sure will come to you in time )

Most users do NOT need either an sLOG (Write cache/log) or a L2ARC (read cache). These are for specific use cases, and often will not improve performance and may even detract from it.

sLOG is not a write cache or a log - it is a temporary staging area for a specific sub-class of writes - synchronous writes. Synchronous are only used for specific workloads - and are always much slower than asynchronous writes, so wherever possible you should use asynchronous writes. If your workloads that need synchronous writes have data that can all be put on SSD, that is preferable to using sLOG.

L2ARC is not a read cache - ZFS uses your systems spare memory as a read cache as standard (which is why ZFS systems have much more memory than the O/S needs to run). You should only use an L2ARC if you have 64GB or more of memory, otherwise it is likely to slow your system down. If you can add more memory, that is likely to be better than an L2ARC, but if your memory is maxed out and if your measured cache hit ratio is below 99% then an L2ARC might be beneficial.

IMO you would be better off removing both SSDs from your HDD RAIDZ2 pool (both sLOG and L2ARC can be removed without needing to rebuild the pool) and using the SSDs for other purposes.

A few questions about your system:

- What are you using for a boot drive?

- I am unclear why you have a 14.9GB HDD? Do they even make them this small?

It would help if you could run the following commands and copy and paste the results here:

sudo zpool status -vsudo zpool importlsblk -bo NAME,MODEL,PTTYPE,TYPE,START,SIZE,ROTA,PARTTYPENAME,PARTUUID- EDIT:

lspci(to try to help determine what the small HDD is)

Finally as an indication, I would probably use the non-RAIDZ2 drives as follows:

- 14.9GB SSD - boot drive

- 447GB SSD - apps, VMs - backed up using replication to the HDD pool since it is not mirrored.

- 14.9GB HDD - remove and chuck in the bin to free up a slot - and buy either another 447GB drive to mirror the existing one, or buy a 20TB drive to act as a hot standby for the RAIDZ2 pool.

Agreed, that’s an odd one. Could it in fact be a SATADOM?

Either way, not very useable anymore.

If it is then you have 2x 14.9GB SSDs which would be suitable for a mirrored boot drive.

I think the SSD drives for cache are different from reported. Go configure a Mini R with eight conventional HD and then add a read cache and a write cache. Configuration tool shows both as 480Gb High Performance SSD in the tool tips. Write cache is over provisioned to 16Gb

I am guessing this was ordered with both options. Original Poster, please post the entire specs of the ordered TrueNAS Mini R. We don’t know if you ordered it with 32 or 64Gb of RAM either.

I did not list the boot drive. It is a separate SSD.

zpool status -v

root@Maomao[~]# zpool status -v

pool: Main

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

Main ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

2e65447e-53f6-4806-92aa-b78a2320adc6 ONLINE 0 0 0

13c4f80d-a241-4235-9ea9-733220b23212 ONLINE 0 0 0

263b3c64-2eec-4811-80d7-31d2d69b4277 ONLINE 0 0 0

9fa30ff9-1363-4a71-bb68-9f3807ea9409 ONLINE 0 0 0

b1044e84-8c3d-4672-901e-d6f2804c7bf3 ONLINE 0 0 0

230fad11-ee6e-4a72-b3f9-f920464ade6b ONLINE 0 0 0

a98aa3d9-156b-4e15-8086-41f55436530d ONLINE 0 0 0

8b066482-6b4e-43e3-9837-7c9139e73b2d ONLINE 0 0 0

cache

18d016eb-e283-4afe-afd0-440a37500ad9 ONLINE 0 0 0

9ddba27c-6636-4f7b-94ed-eaa2d04840b8 ONLINE 0 0 0

errors: No known data errors

pool: boot-pool

state: ONLINE

scan: scrub repaired 0B in 00:00:10 with 0 errors on Sun Oct 20 03:45:12 2024

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

nvme0n1p3 ONLINE 0 0 0

errors: No known data errors

zpool import

root@Maomao[~]# zpool import

no pools available to import

lsblk -bo NAME,MODEL,PTTYPE,TYPE,START,SIZE,ROTA,PARTTYPENAME,PARTUUID

root@Maomao[~]# lsblk -bo NAME,MODEL,PTTYPE,TYPE,START,SIZE,ROTA,PARTTYPENAME,PARTUUID

NAME MODEL PTTYPE TYPE START SIZE ROTA PARTTYPENAME PARTUUID

sda Micron_5400_MTFDDAK480TGA disk 16013942784 0

sdb WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdb1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 2e65447e-53f6-4806-92aa-b78a2320adc6

sdc WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdc1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 13c4f80d-a241-4235-9ea9-733220b23212

sdd WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdd1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 263b3c64-2eec-4811-80d7-31d2d69b4277

sde WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sde1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 9fa30ff9-1363-4a71-bb68-9f3807ea9409

sdf WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdf1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS b1044e84-8c3d-4672-901e-d6f2804c7bf3

sdg WDC WD221KFGX-68B9KN0 gpt disk 16013942784 1

└─sdg1 gpt part 4096 16011756032 1 Solaris /usr & Apple ZFS 9ddba27c-6636-4f7b-94ed-eaa2d04840b8

sdh Micron_5400_MTFDDAK480TGA gpt disk 480103981056 0

└─sdh1 gpt part 4096 480101007872 0 Solaris /usr & Apple ZFS 18d016eb-e283-4afe-afd0-440a37500ad9

sdi WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdi1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 230fad11-ee6e-4a72-b3f9-f920464ade6b

sdj WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdj1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS a98aa3d9-156b-4e15-8086-41f55436530d

sdk WDC WD221KFGX-68B9KN0 gpt disk 22000969973760 1

└─sdk1 gpt part 4096 22000966828544 1 Solaris /usr & Apple ZFS 8b066482-6b4e-43e3-9837-7c9139e73b2d

nvme0n1 iXTSUN3SCEQ120R1 gpt disk 120034123776 0

├─nvme0n1p1 gpt part 4096 1048576 0 BIOS boot 9792f794-2d14-4d7d-8720-cabe7dec74fc

├─nvme0n1p2 gpt part 6144 536870912 0 EFI System 0570deb9-a506-4317-b458-3fa8edb20ff5

├─nvme0n1p3 gpt part 34609152 102314221056 0 Solaris /usr & Apple ZFS f35f35cd-eed6-4748-affe-a9e7e7852057

└─nvme0n1p4 gpt part 1054720 17179869184 0 Linux swap 20985b27-07ad-48c4-ba82-3bc7421b00ce

└─nvme0n1p4 crypt 17179869184 0

lspci

root@Maomao[~]# lspci

00:00.0 Host bridge: Intel Corporation Atom Processor C3000 Series System Agent (rev 11)

00:04.0 Host bridge: Intel Corporation Atom Processor C3000 Series Error Registers (rev 11)

00:05.0 Generic system peripheral [0807]: Intel Corporation Atom Processor C3000 Series Root Complex Event Collector (rev 11)

00:06.0 PCI bridge: Intel Corporation Atom Processor C3000 Series Integrated QAT Root Port (rev 11)

00:09.0 PCI bridge: Intel Corporation Atom Processor C3000 Series PCI Express Root Port #0 (rev 11)

00:10.0 PCI bridge: Intel Corporation Atom Processor C3000 Series PCI Express Root Port #6 (rev 11)

00:11.0 PCI bridge: Intel Corporation Atom Processor C3000 Series PCI Express Root Port #7 (rev 11)

00:12.0 System peripheral: Intel Corporation Atom Processor C3000 Series SMBus Contoller - Host (rev 11)

00:13.0 SATA controller: Intel Corporation Atom Processor C3000 Series SATA Controller 0 (rev 11)

00:14.0 SATA controller: Intel Corporation Atom Processor C3000 Series SATA Controller 1 (rev 11)

00:15.0 USB controller: Intel Corporation Atom Processor C3000 Series USB 3.0 xHCI Controller (rev 11)

00:16.0 PCI bridge: Intel Corporation Atom Processor C3000 Series Integrated LAN Root Port #0 (rev 11)

00:18.0 Communication controller: Intel Corporation Atom Processor C3000 Series ME HECI 1 (rev 11)

00:1f.0 ISA bridge: Intel Corporation Atom Processor C3000 Series LPC or eSPI (rev 11)

00:1f.2 Memory controller: Intel Corporation Atom Processor C3000 Series Power Management Controller (rev 11)

00:1f.4 SMBus: Intel Corporation Atom Processor C3000 Series SMBus controller (rev 11)

00:1f.5 Serial bus controller: Intel Corporation Atom Processor C3000 Series SPI Controller (rev 11)

01:00.0 Co-processor: Intel Corporation Atom Processor C3000 Series QuickAssist Technology (rev 11)

03:00.0 Non-Volatile memory controller: Phison Electronics Corporation PS5013 E13 NVMe Controller (rev 01)

04:00.0 PCI bridge: ASPEED Technology, Inc. AST1150 PCI-to-PCI Bridge (rev 03)

05:00.0 VGA compatible controller: ASPEED Technology, Inc. ASPEED Graphics Family (rev 30)

06:00.0 Ethernet controller: Intel Corporation Ethernet Connection X553/X557-AT 10GBASE-T (rev 11)

06:00.1 Ethernet controller: Intel Corporation Ethernet Connection X553/X557-AT 10GBASE-T (rev 11)

-

TrueNAS Mini 12 x 3.5" SATA hot-swap bays, 350W Power Supply - 100-240V 50/60Hz input power (auto-switching). Includes Short Rail Kit - 19" to 26.6" rackmount depth

-

TrueNAS SCALE is the hyper-converged version of TrueNAS Open Storage. Built on OpenZFS data protection and management, TrueNAS SCALE provides block, file, and object storage, and support for VMs and Kubernetes.

-

Standard Enterprise Drives

-

Eight-Core 2.2GHz CPU, 64GB ECC DDR4 RAM, 2 x USB 2.0 Ports (Rear), 1 x USB 3.0 Port (Rear), 1GbE IPMI Port, 1x Internal TrueNAS M.2 Boot Device

-

Dual 10GbE Base-T ports (100 Meters Max on CAT6A) - Integrated. 2x Cat6 cables included

-

9 TrueNAS Mini 22TB Enterprise (RED PRO) SATA3 256MB Cache HDD

-

1 TrueNAS Mini High-Performance SSD Read Cache

-

1 TrueNAS Mini High-Performance SSD Write Cache - overprovisioned to 16GB

I think I see the problem. That one drive should be reporting as another 22T, not 16G

That’s a bingo, looks like /dev/sdg got resized down to 16G. Did you add it as a write cache (“log”) device at some point?

You should be able to fix this though.

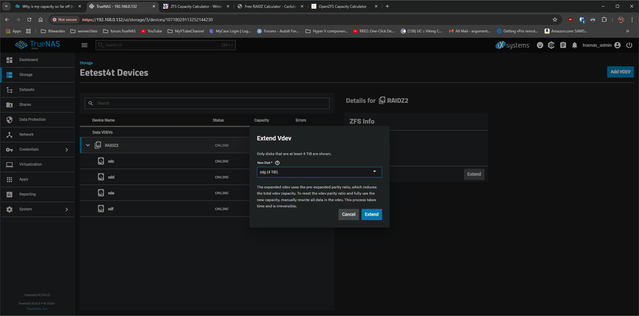

- From your Storage → Manage Devices option where this screenshot was taken:

Select your sdg spinning disk device and DETACH it from the cache vdev.

-

Drop to the command line (System → Shell) and enter the command

sudo disk_resize sdgwhich should reset it back to the proper 22T size. -

If you don’t have any data on that pool, you could export/destroy it and build again. If you do have data, you can EXTEND the 8x22T RAIDZ2 vdev with your fixed 22T drive now.

-

Finally, add the 16G SSD

sdaas alogtype vdev, not acache.

Should be all fixed up then.

After talking to iX, no one knows why I was sent an overprovisioned drive.

I destroyed the Pool, resized the drive to 20TiB and recreated the Pool.

Thank you to everyone.